Protecto AI Guardrails

Make Your AI Features Safe

Safeguard your AI with Protecto AI guardrails, preventing sensitive data leaks and ensuring compliance

- Trusted by Engineering Teams Building AI at Scale

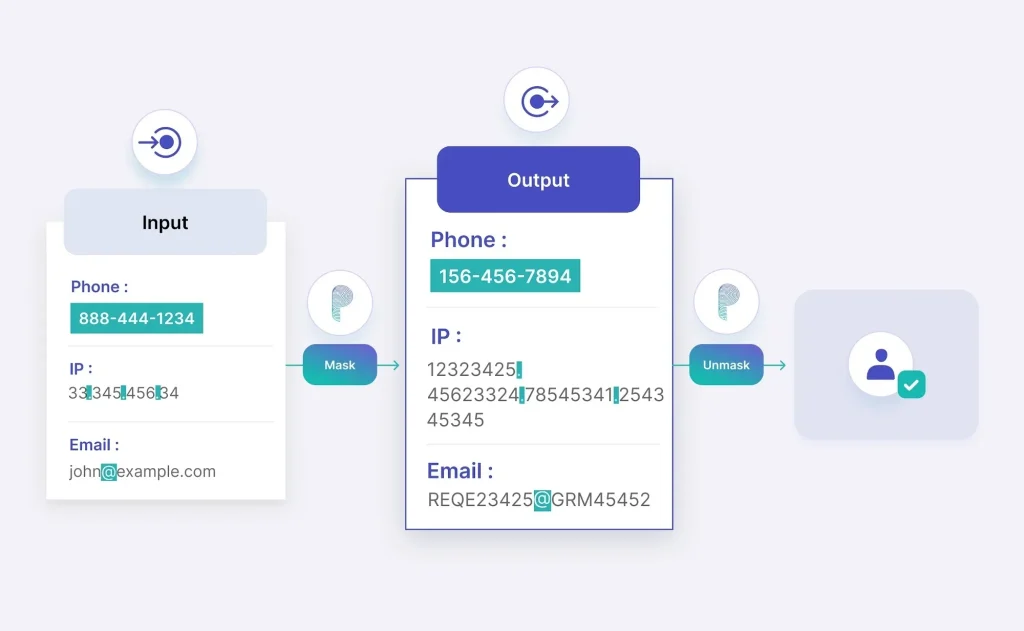

Accuracy-Preserving Masking

Our unique data masking technology keeps your data safe without compromising LLM accuracy.

Accept consent to view this

Precision PII/PHI Detection

Our custom models identify and redact sensitive data with pinpoint accuracy, preserving the context and meaning of your AI interactions.

Protection Across AI Lifecycle

Integrate our security guardrails into prompts, responses, or data ingestion for RAG/Agents

Role-based Access to PII/PHI

Enable role-based access to sensitive data in RAG/Agent. Grant authorized users access to original data when needed, maintaining control and security

Learn how to build customer trust in your AI

Safeguard Your AI Features

Protecto safeguards your AI against sensitive data leaks. Ensure safety, privacy, compliance, and uphold your reputation.

Protect Your Users

Our AI Guardrails equips your AI applications with features needed to your customers' sensitive information

Build Customer Trust and Adoption

Empower your customers to confidently share sensitive data with your AI, backed by our robust security features

Low Latency

Instantly scans and filters PII/PHI, harmful content, and sensitive data, delivering the low latency your AI interactions demand

Proactive Safety Checks

Filter out hate speech, profanity, and other harmful content before it reaches your users. Safeguard your brand reputation

Meet Compliance

Meet privacy regulations (HIPAA, GDPR, DPDP, CPRA etc.) requirements by tightly managing sensitive personal data in your AI

Develop AI Without PII Risks

Use the data for AI training, and RAG/Agent development without exposing PII/PHI while maintain AI accuracy

Curious about building trust in your AI?

Why Protecto?

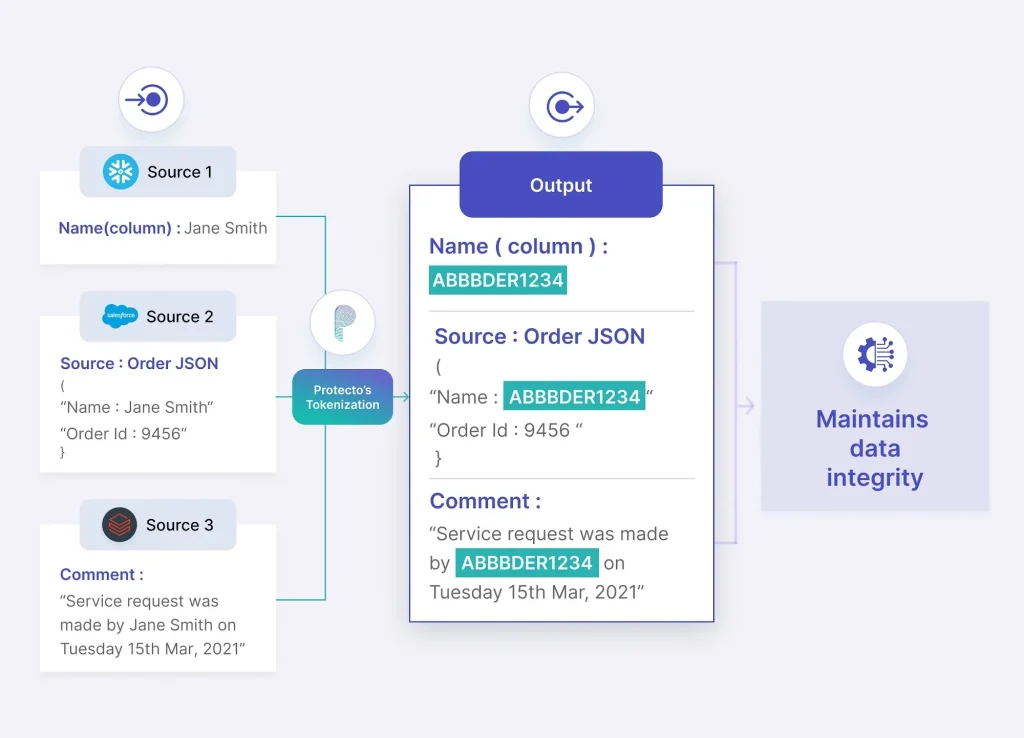

Protecto is the only data masking tool that identifies and masks sensitive data while preserving its consistency, format, and type. Our easy-to-integrate APIs ensure safe analytics, statistical analysis, and RAG without exposing PII/PHI

Easy to Integrate APIs

Our turnkey APIs are designed for seamless integration with your existing systems and infrastructure, enabling you to go live in minutes.

Data Protection at Scale

Deliver data tokenization in real-time APIs and asynchronous APIs to accommodate high data volumes without compromising on performance

On-Premises or SaaS

Deploy Protecto on your servers or consume it as SaaS. Either way, get the full benefits including multitenancy

Pay as You Go

Scale effortlessly and protect more data sources with our flexible, simplified pricing model

Preserve LLM Accuracy

Don't sacrifice accuracy for security. Our data masking tool is the only one that preserves your hard-earned LLM accuracy

Want to try Protecto in a sandbox?

Frequently Asked Questions

Not all guardrails preserve accuracy. Our AI guardrails are designed to maintain the accuracy and integrity of AI models while providing protection. By carefully balancing security with performance, the guardrails can mask or redact sensitive data without altering the context or meaning of the AI’s output.

AI guardrails can flag a range of inappropriate content, including hate speech, offensive language. It can mask PII, PHI, and any custom entities. Additionally, it can competitor mentions, and any other material that could pose a risk to your brand or violate ethical standards. This ensures that AI-driven features produce safe and reliable outputs.

Tokenization involves replacing sensitive data with a token or placeholder, and the original data can only be retrieved by presenting the corresponding token. On the other hand, Encryption is the process of transforming sensitive data into a scrambled form, which can only beread and understood by using a unique decryption key

No, tokenization is a widely recognized and accepted method of pseudonymization. It is an advanced technique for safeguarding individuals’ identities while preserving the functionality of the original data. Cloud-based tokenization providers offer organizations the ability to completely eliminate identifying data from their environments, thereby reducing the scope and cost of compliance measures.

Tokenization is commonly used as a security and privacy-preserving measure to protect sensitive data while still allowing certain operations to be performed on the data without exposing the actual sensitive information. Various types of structured and unstructured data that contains Personal Identifiable Information (PII), transaction data, Personal Information (PI), health records, etc. can be tokenized.

Our guardrail APIs are optimized for low latency, ensuring that they operate quickly and efficiently, even in high-demand environments. This means your AI features can process requests rapidly without experiencing significant delays.