Data is moving faster than your controls. In 2024, AI data privacy/security incidents jumped 56.4%, and 82% of breaches involve cloud systems; the same lanes your LLMs, agents, and RAG pipelines speed through every day.

If you’re shipping GenAI inside a regulated org, you need guardrails that protect PII/PHI and IP without crushing context or tanking accuracy. Use this guide to:

- Map the 2025 risk surface across prompts, RAG, agents, logs, and vendors.

- Pinpoint where breaches are actually happening—and why visibility drops.

- Replace brittle regex/DLP with AI-native guardrails.

- Put numbers behind progress with KPIs and a 90/180/365‑day runbook you can operationalize.

The 2025 AI Privacy Landscape: What Changed—and Why It Matters

Malicious actors are developing more sophisticated tools everyday. As a result, the privacy and compliance landscape is continuously changing. Some of these include:

The new risk surface created by GenAI at scale

GenAI multiplies your risk surface: LLMs, agents, and RAG touch the same record across prompts, retrieval, tools, and logs, often in seconds. Touchpoints expand across storage, prompts/context windows, logs/telemetry, analytics warehouses, and vendor APIs/model gateways.

Real‑world messiness includes unstructured docs, multimodal inputs, schema drift, and live agent streams. The challenge is protecting PII/PHI and IP without crushing context or tanking accuracy.

For example, if a contact-center agent transcribes a call and account numbers flow into embeddings, vendor APIs, and debug logs.

By the numbers: trends to anchor your risk posture

AI privacy/security incidents rose 56.4% in 2024. Third‑party attribution has doubled to roughly 30% of breaches, while staffing shortages persist in over half of breached orgs. Nearly half of all breaches involve customer PII.

- 26% of orgs admit sensitive data hits public AI; only 17% block/scan via technical controls.

- Cybercrime costs are projected at $10.5T in 2025, pushing breach impact and frequency.

Anchor actions: map AI data flows, stand up an egress proxy, and enforce masking on capture.

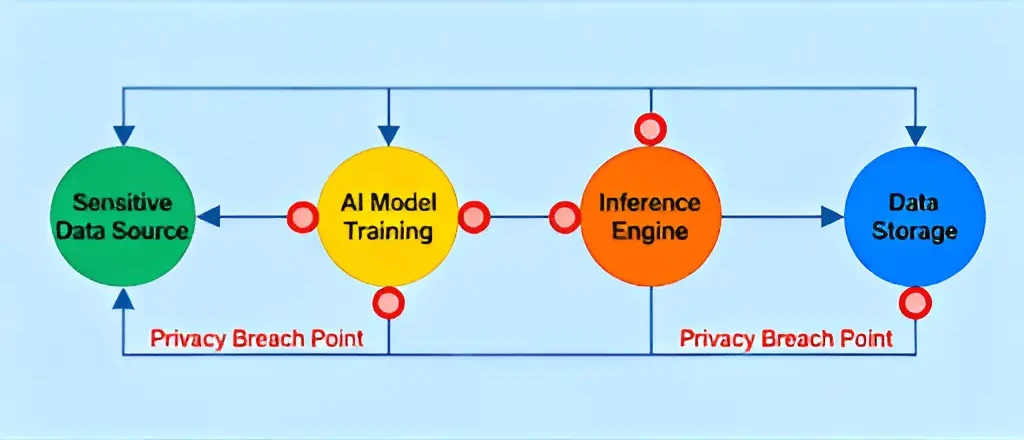

Where breaches are actually happening

Misconfigured AI storage and over-permissive IAM: AI training data, prompt logs, or embeddings often sit in misconfigured cloud buckets with overly broad IAM roles. A single slip can expose regulated data to unauthorized users or attackers.

Public LLM misuse by employees: Employees paste sensitive tickets or patient details into public LLMs, sending PHI/PII outside your controlled environment. This creates compliance and data residency risks you can’t monitor or undo.

Leaky RAG connectors: Retrieval systems often bypass document ACLs, exposing restricted content. Embeddings stored without isolation or encryption can still leak identifiers through reconstruction.

Weak vendor controls and missing DPAs: Third-party LLM APIs and vector DBs may log prompts or replicate data without contractual limits. Without DPAs or BAAs, you risk uncontrolled data use and regulatory non-compliance.

Why traditional privacy tools struggle

Regex redaction and static DLP break on unstructured, multimodal, and streaming data, and black‑box models obscure where data goes. You need AI-native guardrails that preserve utility: reversible masking, entropy‑based redaction, egress proxies, and model‑gateway policies with full audit.

In short: focus on the few controls that move risk fast—map touchpoints, minimize data by default, and operationalize guardrails that protect without breaking model accuracy.

How AI Infringes on Privacy: Key Mechanisms Explained

Excessive collection and purpose creep

Pipelines often over‑collect across prompts, vector stores, and logs—then reuse data for “quality” or “fine‑tuning” without a lawful basis, classic purpose creep. Map this to minimization: define data contracts per use case, drop fields at ingest, and set retention to days, not months.

Inference and re-identification attacks

Inference attacks derive sensitive traits (health, income, location) from “anonymous” features and model outputs. Model inversion and membership inference can expose training artifacts even without direct dataset access; run red‑team tests before launch.

Memorization, leakage, and unwanted retention

LLM memorization sticks on unique strings—emails, MRNs, account numbers—then leaks via prompts, outputs, or chain‑of‑tool traces.

Telemetry, debug dumps, and analytics warehouses amplify exposure; AI privacy incidents rose 56.4% in 2024, while 26% of orgs paste sensitive data into public AI and only 17% block/scan.

Data poisoning and adversarial manipulation

Poisoned training or RAG corpora (label flips, hidden payloads) skew outputs, trigger exfiltration, or seed hallucinations. Mitigate with document hygiene, signed datasets, and canary PII to detect taint early.

Profiling and automated decisioning

Eligibility decisions (credit, jobs, care) raise privacy and fairness stakes; you’ll need explainability, documentation, and contestability on tap. Keep decision logs and feature/label lineage to honor DSRs and regulator queries.

Multimodal and streaming specifics

Voice, images, OCR, and screen streams can expose PII/PHI in real time; contact centers are hot zones where masking must be low‑latency and context‑aware.

With 82% of breaches involving cloud, enforce real‑time masking on the edge and standardize egress through a governed proxy.

Link to deeper dive

See “How Can AI Infringe on Privacy? Key Mechanisms Explained” for step‑by‑step defenses and implementation examples.

In short, if it hits your prompt, it hits your risk surface; deploy real‑time masking, strict data contracts, and governed egress now to preserve privacy without tanking model accuracy.

Top AI Data Privacy Risks Facing Organizations in 2025

A practical risk taxonomy for GenAI programs

Map risks so security, data, and product speak the same language—and prioritize fast.

Data lifecycle (collection, retention, logs)

Model/algorithmic (training, inference, explainability)

Human/process (usage, approvals, prompt libraries)

Vendor/third‑party (models, plugins, annotators)

Cloud/posture (storage, IAM, gateways)

Regulatory/sovereignty (DSRs, transfers, residency)

Score simply:

Rate impact and likelihood 1–5, 2) multiply, 3) tackle 15–25 first, 4) assign owners and SLAs.

“If it’s in a prompt, it’s in scope for privacy.”

High-impact risks to prioritize

Public LLM misuse by employees; 26% admit sensitive pastes, yet only 17% block/scan use. Picture this: a CSM pastes an SSN into a chatbot—now it’s in third‑party logs.

Shadow data in docs, wikis, and mail feeds ungoverned RAG indexes and embeddings. Cloud misconfigurations amplify blast radius; 82% of breaches involve cloud services. Black‑box opacity slows incident detection, DSR fulfillment, and lawful basis checks.

“You can’t secure what you can’t see—or audit.”

Model and data-specific attack classes

Inversion, membership inference, and extraction targeting sensitive training artifacts.

Poisoning of training sets and retrieval corpora; prompt injection via content or connectors.

Adversarial inputs that bypass naive masking and leak secrets through chain‑of‑thought or tool calls.

Bias, discrimination, and fairness as privacy risk

Biased outputs can reveal or infer protected attributes, creating privacy-adjacent harms and legal exposure.

Expect sector audits, decision logs, and traceable features to back explainability claims.

Staffing and operating model gaps

Skills shortages and manual review won’t scale against a 56.4% rise in AI incidents.

Automate with policy‑as‑code, role‑aware redaction, and shared KPIs across security, data, legal, and product.

“Shadow data is breach fuel.”

Link to deeper dive

See “Top AI Data Privacy Risks Facing Organizations” for control playbooks, checklists, and example policies.

In short: focus on public LLM use, vendors, and cloud posture first, then harden models and eliminate shadow data—quick wins that reduce real risk fast.

AI Data Privacy Breaches: Major Incidents & What They Teach Us

Breaches in 2025 follow a predictable playbook: sensitive data flows into AI, visibility drops, and attackers or accidents do the rest.

Picture this: a contact center transcript streams to a model while account numbers quietly land in “debug” logs—no masking, no guardrails.

Pattern analysis across sectors

- Healthcare: PHI exposure via transcription agents, EHR integrations, and misconfigured data lakes.

- Financial services: PII leaks in RAG-based advisory tools and long‑lived logs with account identifiers.

- Retail/CPG: Supplier and customer data slipping into vendor fine‑tuning pipelines and third‑party plugin leaks.

“Shadow data isn’t invisible to attackers—only to you.”

Common root causes and compounding factors

- Over‑permissive access, lack of reversible masking, and missing egress controls at model gateways.

- Inadequate vendor DPAs and weak audit trails that stall forensics and regulatory reporting.

- Unvetted prompt libraries and no prompt‑injection defenses across RAG inputs and tool outputs.

Cost, scale, and time‑to‑detect

- AI privacy/security incidents rose 56.4% in 2024, signaling accelerating risk.

- Nearly half of breaches expose customer PII, driving remediation and notification costs.

- 82% of breaches involve cloud storage or processing—AI’s data gravity amplifies blast radius.

- 30% of breaches are attributed to third parties, reflecting vendor and plugin sprawl.

- Cybercrime costs are projected at $10.5T in 2025; AI‑fueled breaches are among the fastest‑rising.

Detection often lags while AI‑enabled attacks move at machine speed—automation isn’t optional.

Lessons learned you can apply now

- “Treat logs as production data”—mask at capture, not after the fact, and apply reversible revelation via RBAC.

- Enforce strict data contracts for RAG inputs and embeddings; validate and scrub what gets indexed.

- Establish “least data” patterns for prompts, tools, and intermediate context; block/scan/proxy public LLM use.

AI Data Privacy Statistics & Trends for 2025

- AI privacy/security incidents jumped 56.4% in 2024, with 233 reported cases spanning breaches, bias, misinformation, and misuse.

- Incident mix is shifting: leakage remains dominant, while bias/discrimination and misinformation events are rising as models scale across teams.

- Nearly half of all breaches now involve customer PII; elevating notification, litigation, and remediation costs.

- 26% of orgs admit sensitive data goes into public AI, yet only 17% block/scan/proxy that usage with technical controls.

- Global cybercrime costs are projected to hit $10.5T in 2025, with AI‑powered breaches among the fastest‑rising cost drivers.

- PII-heavy cases face higher penalties, class‑action risk, and brand damage; cloud concentration compounds incident scale and response scope.

- AI incidents trend pricier due to cross‑tool logs, copies in analytics, and model retraining costs.

Guardrails That Preserve Privacy and Accuracy: Governance and Technical Controls

AI incidents jumped 56.4% in 2024 and 82% of breaches now involve cloud—guardrails can’t be optional, they’re table stakes.

Data-first controls that actually work in AI pipelines

Use policy-as-code with discovery/classification across unstructured stores so minimization and retention travel with the data.

Apply reversible masking and tokenization that keep format and entity types intact; use entropy-based masking to avoid over/under-redaction.

Run async tokenization for high‑throughput jobs; prefer format‑preserving approaches so validations and downstream joins don’t break.

In-line protections for prompts, training, and streams

- Add real-time PII/PHI detection and masking for prompts, tools, and agent transcripts; picture this: a contact center stream redacts SSNs under 100 ms without losing context.

- Route public LLM traffic through an egress proxy for DLP scanning, secrets filtering, and role‑aware reveal—26% of orgs paste sensitive data into public AI, but only 17% block/scan.

- Standardize policies via model gateways so redaction, rate limits, and audit are consistent across providers.

Model-aware risk controls

- Enforce prompt‑injection defenses (allowlists, input validation, content filters) and retrieval hygiene (dedupe, MIME checks, Tika/OCR sanity).

- Scrub training/RAG corpora for sensitive terms; add poisoning‑resistance checks and dataset diffing before indexing.

- Use privacy‑preserving training where fit: synthetic data to de-risk coverage, selective fine‑tuning, and differential privacy for high‑sensitivity attributes.

Access, audit, and accountability

- Implement fine‑grained RBAC/ABAC, just‑in‑time access, and approval workflows for de‑masking keys.

- Capture immutable audit trails for data flows, masking events, and reveal operations with searchable provenance.

- Continuously test with privacy unit tests, canary PII, and shadow deployments to validate policies before rollout.

Vendor and third‑party governance baked into the stack

- Contract for data‑use limits, retention, subprocessor transparency, and breach SLAs; 30% of breaches trace to third parties.

- Add technical sandboxing, traffic isolation, automated telemetry, anomaly detection, and independent attestations for ongoing assurance.

- Bottom line: ship fast and safe by building “least data” defaults, mask at capture, and enforce policy at every hop—then verify with continuous tests and immutable audit.

Reference Architectures for Safe GenAI: LLMs, Agents, and RAG

Baseline architecture for privacy-first LLM apps

Start with a privacy-first architecture that assumes 82% of breaches involve cloud systems and 30% trace to third parties—design for least data, least trust.

Ingestion → pre-processing guardrails (PII/PHI detection, entropy-based masking) → tokenization/masking (format-preserving, async) → policy engine (policy-as-code, retention) → model gateway (standardized redaction, provider isolation) → post-processing and logging (immutable audit).

“Mask at capture, reveal by role, log everything”—reversible reveal happens behind reversible masking keys with strict RBAC/ABAC, JIT access, and HSM/KMS-backed key management.

RAG-specific patterns

RAG lives or dies on document hygiene as incidents tied to AI rose 56.4% in 2024.

Governed pipelines: classification, sensitive-term scrubbing, deduplication, and indexing policies that block shadow data and stale caches.

Query-time controls: retrieval filtering, redaction before context assembly, traceable citations, and deny-lists to stop prompt-layer contamination. “If it’s not governed, it’s not getting indexed.”

Live agent and contact center flows

Picture this: a customer reads their card number aloud and your agent transcript streams to the model in 120 ms.

Low-latency streaming redaction for audio/text with fallback policies when confidence drops; cache-safe, zero-reveal transcripts for QA.

Handle accents, OCR errors, and code-switching via phonetic models, post-OCR cleanup, and bilingual entity detectors—without dropping accuracy.

Multimodal and enterprise integration

Keep context; drop risk.

Image/screen redaction, structured-to-unstructured joins, and ERP/CRM connectors that minimize fields by default.

On-prem, VPC, and hybrid options for sovereignty; traffic isolation for vendor plugins to shrink third‑party blast radius.

Resilience, performance, and accuracy trade-offs

Design to be fast and safe.

Latency budgets with guarded caching (no sensitive payloads), circuit breakers, and egress proxies for public LLMs; tune batch sizes for async tokenization.

Quality metrics: utility loss from masking, FP/FN rates, downstream model accuracy, masked-token coverage per 1,000 prompts, and leakage findings trends. “Accuracy is non-negotiable—privacy is how you keep it in production.”

A tight, governable path—ingest clean, mask early, gateway consistently—lets you ship reliable GenAI while shrinking breach likelihood and detection time.

Runbook and Maturity Roadmap: From Assessment to Continuous Assurance

AI privacy/security incidents jumped 56.4% in 2024, and 82% of breaches now involve the cloud—don’t wait for the perfect architecture to start shipping guardrails.

“Start small, ship weekly, and measure everything” is how you move from risk to policy-as-code reality.

90-day foundations

- Inventory AI use cases, data flows, and vendors; classify sensitive data in sources and logs, then tag by lawful basis and retention.

- Stand up a policy engine with baseline reversible masking/redaction for prompts, RAG, and streams that preserves model context.

- Implement an egress proxy for public LLMs to block/scan with role-aware reveal—26% of orgs paste sensitive data into public AI, but only 17% scan or block.

180-day scale-up

- Extend guardrails to training/fine‑tuning; harden retrieval corpora and embeddings with deduplication, sensitive-term scrubbing, and async tokenization.

- Deploy continuous monitoring: leakage tests, canary PII, and drift detection across policies and data; alert on anomalies in minutes, not weeks.

- Tighten vendor governance with DPAs, breach SLAs, and technical sandboxing—third‑party attribution has doubled to 30% of breaches.

365-day continuous assurance

- Automate DSR fulfillment across AI datasets; integrate model cards and decision logs so audits don’t stall delivery.

- Run red‑team exercises for inversion/poisoning and tabletop breach simulations with legal and PR to compress response time.

- Establish KPIs/KRIs and board reporting (masked‑token coverage, leakage findings per 1,000 prompts, vendor risk scores); iterate with new regulations.

Roles, budget, and ownership

- Define RACI across security, data, legal, and product; set monthly reviews and change-control gates.

- Close expertise gaps with targeted hires and upskilling; prefer automation over headcount for repeatable controls.

- “Treat logs as production data—mask at capture, not after,” and make access to de‑mask keys just‑in‑time and approval‑bound.

Where to go deeper

Examples of AI Privacy Issues in the Real World

- AI Data Privacy Statistics & Trends for 2025

- Challenges in Ensuring AI Data Privacy Compliance

- Build for least data: minimize what enters prompts, standardize controls through a gateway, and prove outcomes with metrics—guardrails that safeguard data and AI accuracy. The immediate moves: deploy an egress proxy, baseline masking, and leakage tests; everything else scales from there.

Conclusion

Privacy isn’t a speed bump—it’s how you ship GenAI with confidence. When you align controls to where data actually flows, you reduce breach risk, speed audits, and keep model accuracy intact.

Build for outcomes, not checklists: guardrails that are AI‑native, measurable, and invisible to your users until they need to be visible to your auditors.

FAQs:

1. What is AI data privacy and why is it important in 2025?

AI data privacy refers to protecting sensitive information like PII, PHI, and intellectual property from exposure when using AI systems such as LLMs, agents, and RAG pipelines. With AI incidents rising 56.4% in 2024, safeguarding data is essential to reduce breach risks and maintain regulatory compliance.

2. How do AI data privacy breaches typically happen?

Breaches often occur through misconfigured cloud storage, public LLM misuse, leaky RAG connectors, and weak vendor data controls. These vulnerabilities can cause unauthorized access, data leakage, and compliance failure.

3. What are the biggest AI data privacy risks for organizations?

Top risks include shadow data in ungoverned RAG indexes, over‑permissive IAM settings, inference attacks, and inadequate guardrails for multimodal or streaming data. Addressing these risks quickly can prevent costly data breaches.

4. What technical controls help improve AI data privacy?

Effective controls include reversible masking, egress proxies for public AI traffic, policy‑as‑code enforcement, prompt‑injection defenses, and immutable audit trails. These AI‑native guardrails protect sensitive data without compromising model accuracy.