AI is now woven into everyday work. Customer teams rely on chat assistants, developers use copilots, and analysts ask models to sift through knowledge bases. The biggest shift in 2025 is not a single law or headline. It is the move from occasional audits to continuous, technical controls that run wherever data flows.

The state of AI data privacy in 2025

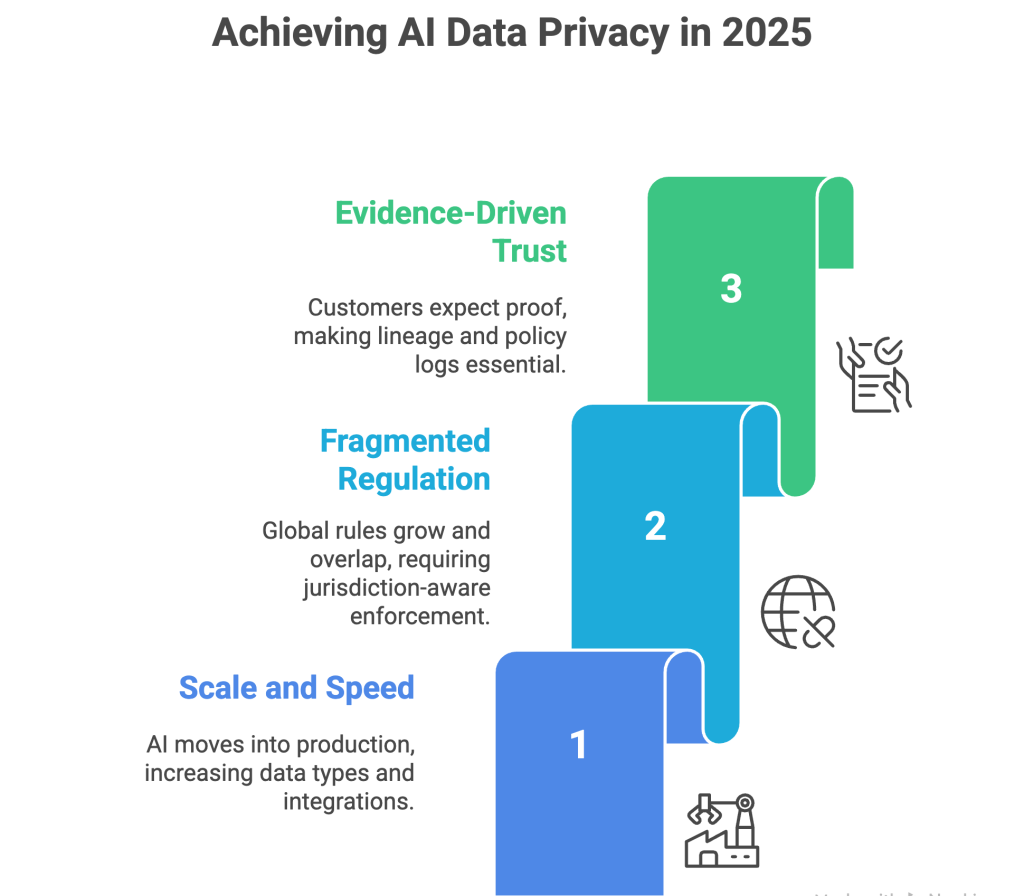

The privacy conversation has matured. Instead of debating if AI should be adopted, most teams are asking how to deploy it safely, how to prove controls work, and how to keep up as new features ship every week. Three realities define the moment.

- Scale and speed: AI moved from pilots to production across sales, support, HR, finance, and operations. That means more data types, more integrations, and more logs. Risk expands wherever controls are thin.

- Fragmented regulation: Global rules continue to grow and overlap. The practical takeaway is simple. You need jurisdiction aware enforcement and repeatable evidence, not one off interpretations.

- Evidence driven trust: Customers, auditors, and partners now expect proof, not promises. Lineage, policy logs, and measurable outcomes have become the new currency of trust.

Against this backdrop, the most useful ai data privacy trends are the ones that turn principles into daily routines.

Trend 1. Continuous compliance replaces annual checklists

Compliance used to be a periodic activity. In 2025 it will be continuous. High level policies still matter, but the center of gravity has moved into the stack. That means automated discovery of sensitive fields, policy as code, runtime enforcement, and live dashboards with clear metrics.

What to change in practice

- Classify and tag datasets at ingestion, not weeks later

- Enforce purpose and residency rules in code paths and gateways

- Export enforcement events, masking rates, and violations to your SIEM

- Keep lineage that ties datasets, policies, users, and time together

A control plane such as Protecto discovers sensitive data, applies tokenization and redaction, enforces policy at prompts and APIs, and records every decision with policy version and context, which turns audits into exports rather than interviews.

Trend 2. Privacy by design becomes a build standard

Privacy by design is no longer a slogan. It is a set of controls that teams expect to find in every workflow. The big change is placing safeguards where risk begins, rather than after the fact.

Must have control points

- Ingestion and ETL: Classify and tokenize identifiers; reject files with secrets

- Retrieval and vector stores: Redact sensitive entities before indexing and apply retrieval filters

- LLM gateway: Pre prompt redaction, output filtering, tool allow lists

- APIs: Response schemas and scopes with rate limits and anomaly detection

- Logging: Redact by default and shorten retention

These steps are straightforward and cover the majority of practical leaks.

Trend 3. Multimodal AI raises new privacy questions

Models increasingly accept and generate text, images, audio, and video. Multimodal inputs carry hidden identifiers, from faces and voices to screen captures that expose names, emails, and record numbers. The privacy challenge shifts from detecting strings to removing sensitive features across modalities.

What to implement

- Image pipelines that blur faces and on screen identifiers before indexing

- Audio pipelines that redact names, numbers, and addresses in transcripts and remove voice prints when not required

- Video pipelines that apply both image and audio redaction steps

- Consistent purpose tags and residency controls across modalities

The rule is simple. If you would redact it in text, you should protect the equivalent feature in audio or video.

Trend 4. RAG is powerful and risky

Retrieval augmented generation or RAG lets models ground answers in your documents and knowledge bases. If those sources contain unredacted PII, PHI, or secrets, retrieval can surface them in normal answers, often without anyone noticing.

Controls that work

- Redact entities before documents are embedded or indexed

- Tag sources with purpose and sensitivity to drive retrieval filters

- Apply output scanning to strip sensitive values returned by the model

- Maintain lineage that shows which sources and chunks were used

This is a classic case where a little prevention prevents a noisy incident later.

Trend 5. Agent workflows need tool layer guardrails

Agents call tools such as databases, search, calendars, or ticketing systems. The risk is not only in language output but also in what tools return and how results are joined. Prompt injection can trigger unwanted tool use or expose data meant for a narrow audience.

Protective steps

- Restrict tools to an allow list tied to the task and user role

- Use scoped credentials that limit fields and queries

- Validate tool responses against schemas before the agent sees them

- Log agent plans and tool calls with policy context for reviews

Agents move fast. Controls must match that speed.

Trend 6. Purpose limitation and minimization become operational

Purpose tags are the link between legal terms and code paths. In 2025, successful teams attach purpose at ingestion and enforce it at runtime. Data used for support does not wander into marketing. Fields that are not needed are dropped or tokenized.

How to make this real

- Maintain a small catalog of allowed purposes with clear descriptions

- Tag datasets and requests with purpose and block mismatches automatically

- Use automated impact analysis to show how dropping or tokenizing fields affects accuracy

- Record every allow or deny decision with purpose and policy version

Minimization saves you twice. Less data to protect and fewer paths to review.

Trend 7. PETs move from research to routine

Privacy enhancing technologies are now everyday tools rather than niche experiments. The most practical stack for many teams blends tokenization and contextual redaction with selective use of differential privacy and federated learning where needed.

When to use which

- Deterministic tokenization for identifiers where joins matter

- Contextual redaction for free text in tickets, notes, and PDFs

- Differential privacy for published aggregates and dashboards

- Federated learning when training across regions or partners

- Secure enclaves and multi party computation for sensitive joint analysis

Pick the lightest tool that achieves real protection, then add more advanced methods as risk or scale grows.

Trend 8. Vendors and egress need continuous governance

Most stacks include cloud services, model hosts, analytics tools, and integration partners. Vendor risk now evolves daily, which means your program must watch where data goes, not just what contracts say.

Steps that reduce exposure

- Maintain allow lists for outbound endpoints and block unknown destinations

- Require no retention options, sub processor visibility, and regional controls

- Verify practice against promises by inspecting actual traffic

- Keep an inventory of connectors and rotate keys on a schedule

This is an area where small gaps can cause big headaches. Automation pays off quickly.

Trend 9. Evidence driven trust becomes a product feature

Trust is not only an internal metric. Customers and partners want to see how you protect their data. Teams are building lightweight trust dashboards that show coverage, response times, and how access and deletion requests are handled.

What to publish

- Coverage of discovery and masking across critical datasets

- Average time to respond to access and deletion requests

- Summaries of blocked prompts, schema violations, and mean time to respond

- Clear descriptions of model uses, data sources, and opt out paths

Evidence replaces long explanations. It also speeds sales and partnership reviews.

Trend 10. Real time monitoring catches slow burn leaks

Not all incidents are loud. Many privacy issues emerge as slow patterns. Enumeration of vector chunks or API responses, after hours scraping, or odd sequences of prompts can indicate exfiltration.

What to monitor

- Vector search behavior for abnormal breadth or speed

- Prompt patterns that match jailbreak or injection families

- API usage for fields returned outside of normal paths

- Egress volume and destinations relative to baselines

Alerting should include context and safe actions such as throttle, mask, or block.

Sector snapshots

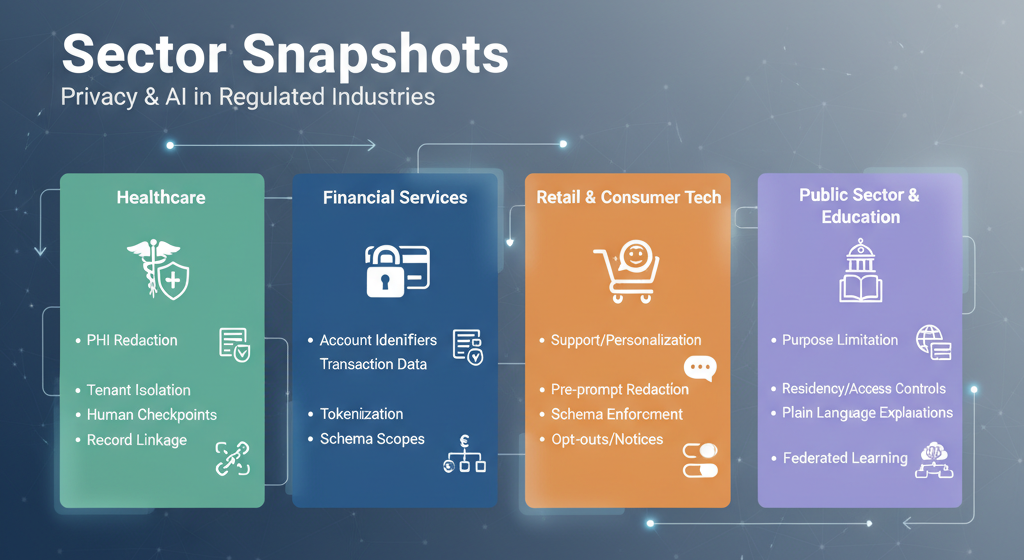

Healthcare

High sensitivity and strict rules make healthcare a bellwether for privacy. Useful patterns include PHI redaction before indexing, tenant isolation for assistants, and documented human checkpoints for patient impacting decisions. Rights handling benefits from strong record linkage and lineage.

Financial services

Controls target account identifiers, transaction data, and audit evidence. Tokenization preserves joins for analytics. Response schemas and scopes prevent oversharing through support and statement APIs. Retrieval for explanations should exclude raw identifiers while keeping the rationale clear.

Retail and consumer tech

Workflows center on support, personalization, and order management. Pre prompt redaction and schema enforcement prevent most leaks. Opt outs and clear notices build trust and reduce churn when assistants are customer facing.

Public sector and education

Purpose limitation and transparency take priority. Residency and access controls are critical, and explanations must be written in plain language. Federated learning and strict redaction help when centralization is constrained.

Future outlook beyond 2025

Several signals suggest where privacy practice is heading next.

- Multimodal redaction becomes a standard feature in data platforms and knowledge tools

- Real time consent and purpose enforcement expand from consumer apps to enterprise systems

- Continuous assurance replaces point in time certifications with always on evidence

- Privacy preserving analytics scales, with differential privacy and federated analytics operating behind the scenes

- Agent safety focuses on tool use and data access plans, with preflight checks and postflight scans as default steps

These shifts keep pushing privacy into the fabric of everyday engineering and operations.

How Protecto helps

Protecto is a privacy control plane for AI. It places precise controls where risk begins, adapts policies to region and purpose at runtime, and produces the evidence that customers and regulators expect. For teams navigating ai data privacy trends in 2025, Protecto turns strategy into daily practice.

What Protecto does

- Automatic discovery and classification: Scan warehouses, lakes, logs, and vector stores to find PII, PHI, biometrics, and secrets. Tag data with purpose and residency so enforcement is automatic.

- Masking, tokenization, and redaction: Apply deterministic tokenization for structured identifiers and contextual redaction for free text at ingestion and before prompts. Preserve analytics and model quality while removing raw values. A secure vault supports narrow, audited re-identification when business processes require it.

- Prompt and API guardrails: Block risky inputs and jailbreak patterns at the LLM gateway, filter outputs for sensitive entities, and enforce response schemas and scopes for APIs. Add rate limits and egress allow lists to prevent quiet leaks.

- Jurisdiction aware policy enforcement: Define purpose limits, allowed attributes, and regional rules once. Protecto applies the right policy per dataset and per call and logs each decision with policy version and context.

- Lineage and audit trails: Trace data from source to transformation to embeddings to model outputs. Answer who saw what and when, speed up investigations, and complete access and deletion requests on time.

- Anomaly detection for vectors, prompts, and APIs: Learn normal behavior and flag enumeration or exfil patterns. Throttle or block in real time to contain risk.

- Developer friendly integration: SDKs, gateways, and CI checks make privacy part of the build. Pull requests fail on risky schema changes, prompts are redacted automatically, and dashboards report real coverage and response times.

The outcome is a system where privacy is continuous and measurable. Teams deliver features quickly, users feel safer, and audits become routine rather than disruptive.

FAQs

- What are the top AI data privacy trends for 2025?

Key AI data privacy trends include continuous compliance replacing annual checklists, privacy by design becoming standard, multimodal AI privacy challenges, and privacy-enhancing technologies moving mainstream. - How is continuous compliance changing AI privacy?

Continuous compliance replaces periodic audits with automated discovery, real-time policy enforcement, runtime controls, and live dashboards that provide measurable privacy outcomes. - What privacy challenges does multimodal AI create?

Multimodal AI creates new privacy risks by processing text, images, audio, and video that can contain hidden identifiers like faces, voices, screen captures with personal information, and embedded metadata. - Which privacy-enhancing technologies are becoming mainstream?

Mainstream privacy technologies include deterministic tokenization, contextual redaction, differential privacy for aggregates, federated learning, and secure multi-party computation for joint analysis. - How do RAG systems create privacy risks?

RAG systems create privacy risks by potentially surfacing unredacted PII, PHI, or secrets from indexed documents during normal conversations, requiring entity redaction before indexing and output filtering.