AI systems learn from vast amounts of data and then generalize. That power is useful and also risky. Sensitive data can slip into prompts. Proprietary datasets can be memorized by models. Attackers can steer models to reveal secrets or corrupt results. Meanwhile, your company is probably experimenting with multiple AI tools at once. That creates hidden data flows and inconsistent controls.

“Traditional” app security isn’t enough. AI introduces new attack surfaces: model behavior, training pipelines, third-party APIs, and the messy, human inputs that drive them. You need a program tailored to AI privacy and security that treats models, data, and prompts as first-class assets.

Throughout this guide, when we mention automated PII detection, redaction at the prompt level, or ongoing AI usage monitoring, note that Protecto can operationalize those controls in real time.

What Makes AI Risk Different

- Data gravity: AI concentrates sensitive data. Prompts, context windows, embeddings, and logs all accumulate PII and confidential content.

- Model behavior is probabilistic: You don’t get the same output every time, which complicates testing and incident reproduction.

- Opaque internals: Even with interpretable models, it’s hard to know if training data is memorized or if a prompt boundary will hold.

- Expansive supply chains: Models are built from datasets, frameworks, external APIs, plugins, model hubs, and orchestrators.

- Shadow AI: Teams adopt new AI tools without approvals, feeding them code, customer data, or contracts.

Because of these factors, a single “security gate” can’t protect you. You need layered controls around data ingestion, model access, and outputs.

The Top Risks You Must Address

Below are the core risks, why they matter, evidence to look for, and fast wins.

1) Sensitive Data Leakage via Prompts and Outputs

- What it is: Users or systems paste PII, PHI, or secrets into prompts. Models echo or transform that data. Logs, context caches, and vector databases retain it.

- Why it matters: Breach exposure, regulatory penalties, and customer trust damage.

- Signals: Long prompt histories, oversized context windows, unredacted logs, no PII scanning.

- Fast wins: Client-side and server-side redaction, output filters, data minimization.

2) Model Inversion and Membership Inference

- What it is: Attackers probe a model to infer whether a specific record was in the training set or reconstruct representative data.

- Why it matters: Exposure of training subjects, re-identification risk, and legal questions about consent.

- Signals: Highly overfit models, verbatim memorization in unit tests, lack of differential privacy.

- Fast wins: Regular memorization checks, differential privacy during training, rate limiting, anomaly detection.

3) Prompt Injection and Tool Abuse

- What it is: Malicious inputs override system prompts or jailbreak guardrails. With tool use, the model may exfiltrate data or run harmful actions.

- Why it matters: Data leakage, unauthorized actions, credential exposure.

- Signals: Sudden instruction overrides, unexpected tool calls, long chain-of-thought prompts in user inputs.

- Fast wins: Input allow/deny patterns, content sanitization, strict tool schemas, output validation.

4) Data Poisoning in Training or RAG Pipelines

- What it is: Attackers inject tainted data that biases predictions or triggers behaviors when certain prompts are used. In RAG, poisoning the knowledge base can be enough.

- Why it matters: Subtle integrity issues are hard to detect and may cause bad decisions at scale.

- Signals: Unexplained performance drift, specific queries returning odd content, unsigned data sources.

- Fast wins: Data provenance, signed datasets, content moderation on ingested docs, canary documents to detect manipulation.

5) Embeddings and Vector Database Risks

- What it is: Embeddings can encode sensitive text. Poorly secured vector stores expose searchable representations of your data.

- Why it matters: Attackers can re-identify content or harvest proprietary knowledge.

- Signals: Publicly accessible indexes, weak tenant isolation, no encryption at rest.

- Fast wins: Access controls, per-project keys, encryption, deletion policies, and PII scrubbing before indexing with Protecto.

6) Supply-Chain and Model Hub Exposure

- What it is: Pulling community models, datasets, or prompts that include malware, backdoors, or disallowed content.

- Why it matters: Hidden implants, license violations, and brand risk.

- Signals: Unverified model artifacts, unclear licenses, no SBOMs for AI assets.

- Fast wins: Curate an approved registry, scan artifacts, enforce signatures, store hashes.

7) API Keys, Secrets, and Cost Abuse

- What it is: Exposed keys in prompts, logs, or repos. Attackers use them to run expensive jobs or access data.

- Why it matters: Financial loss and potential data exposure.

- Signals: Cost spikes, keys hardcoded in notebooks, keys visible in chat history.

- Fast wins: Vaulted secrets, short-lived tokens, budget limits, egress controls, and secret scrubbing with Protecto.

8) Weak Governance and Compliance Gaps

- What it is: No inventory of models, datasets, or third-party tools; unclear roles; no DPIAs; no retention rules.

- Why it matters: Legal exposure and inconsistent controls.

- Signals: Multiple AI pilots with separate logins, unknown data flows, duplicated datasets.

- Fast wins: Central registry, RACI matrix, standard DPIA template, policy-as-code.

9) Model Theft and Intellectual Property Leakage

- What it is: Weights stolen from storage, distillation by competitors, or outputs that leak proprietary logic.

- Why it matters: Loss of competitive advantage.

- Signals: Unprotected checkpoints, broad S3 access, no tamper evidence.

- Fast wins: Encrypted checkpoints, access logs, watermarking, secure enclaves.

10) Shadow AI and Unmanaged Tools

- What it is: Teams quietly use chatbots and plugins to speed up work, often with customer data.

- Why it matters: Data leaves your boundary, and you can’t prove compliance.

- Signals: Unknown SaaS spend, browser extensions, copied data into random chatbots.

- Fast wins: Clear acceptable-use policy, sanctioned tools with a gateway, usage monitoring via Protecto.

Risk Priority Matrix

| Risk | Likelihood (typical enterprise) | Impact | Priority |

| Sensitive data leakage | High | High | Critical |

| Prompt injection/tool abuse | Medium-High | High | Critical |

| Data poisoning | Medium | High | High |

| Embedding/vector store exposure | Medium | Medium-High | High |

| Supply-chain/model hub | Medium | Medium-High | High |

| Keys and cost abuse | Medium | Medium | Medium-High |

| Governance/compliance gaps | High | Medium-High | High |

| Model theft | Low-Medium | High | Medium-High |

| Shadow AI | High | Medium | Medium-High |

This matrix is a starting point. Re-score based on your data sensitivity, model exposure, and regulatory environment.

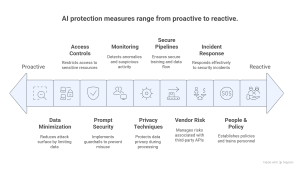

Core Protection Measures

Think in layers: protect data before it enters the model, constrain what the model can do, and monitor what comes out.

1) Data Minimization and Redaction

- Classify before you compute: Tag PII, PHI, PCI, and confidential documents where they live.

- Minimize prompts: Pass only the fields needed for each task; avoid raw dumps of tickets or contracts.

- Redact at the edge: Remove names, emails, IDs, and secrets from prompts client-side or at your gateway.

- Sanitize outputs: Filter responses for sensitive echoes.

- How tools help: Protecto detects and redacts PII token-by-token, so users don’t accidentally send secrets to third-party models.

2) Access Controls for Models, Data, and Tools

- Least privilege: Assign per-model RBAC/ABAC. Limit who can see training data, embeddings, and logs.

- Context windows with policy: Enforce document-level permissions when assembling RAG context.

- Network and tenant isolation: Use separate projects, VPC peering, and per-environment keys.

- Secrets management: Rotate keys, avoid embedding them in prompts or notebooks.

3) Prompt Security and Guardrails

- System prompts: Keep them short and locked; avoid revealing internal instructions in outputs.

- Allow/deny patterns: Block jailbreak phrases, data exfiltration patterns, and suspicious tool arguments.

- Structured outputs: Force JSON schemas and validate before taking action.

- Safety feedback loops: If a prompt triggers a block, explain the policy and offer a safer path.

4) Monitoring and Observability

- Full-fidelity logs: Capture prompts, context docs, tool calls, outputs, and latency with privacy scrubbing.

- Anomaly detection: Watch for prompt types that correlate with leakage or cost spikes.

- Canary prompts: Run known trigger phrases on a schedule to test guardrails.

- Audit trails: Tie AI events to user identities and data sources. Protecto can centralize usage visibility across approved tools.

5) Privacy-Preserving Techniques

- Differential privacy: Adds noise during training to limit memorization of specific records.

- Federated learning: Train locally on devices or data silos, send gradients, not raw data.

- Secure enclaves/TEE: Run sensitive workloads in hardware-isolated environments with attestation.

- Homomorphic encryption: Compute over encrypted data for narrow tasks; note performance trade-offs.

- Synthetic data: Replace high-risk fields or build shareable datasets for development and testing. Protecto supports privacy filtering and synthesis workflows.

6) Secure Training and RAG Pipelines

- Data contracts: Define schemas, allowed sources, lineage, and retention.

- Signed artifacts: Use checksums and signatures for datasets, checkpoints, and embeddings.

- Content moderation on ingest: Scan documents before they reach your index.

- Version everything: Datasets, prompts, system messages, and tool definitions.

- Isolation for fine-tuning: Separate networks and storage from production inference.

7) Vendor and API Risk Management

- Inventory: Track all AI vendors, plugins, and model endpoints.

- Data-handling terms: Clarify training rights, retention, sub-processors, and breach duties.

- Regional processing: Align with data residency and cross-border rules.

- Runtime controls: Route vendor calls through a policy gateway that enforces redaction and logging. With Protecto, you can apply consistent rules across providers.

8) Incident Response for AI

- Playbooks: Define procedures for prompt leaks, poisoned indexes, or exposed embeddings.

- Containment: Revoke keys, block routes, roll back to safe checkpoints or index snapshots.

- Forensics: Preserve logs and context documents; reproduce with saved seeds and prompts.

- Communication: Plain-language templates for customers and regulators.

9) People, Policy, and Training

- Acceptable use: What data can and cannot be placed into AI tools.

- Red-team exercises: Regularly test your guardrails with internal adversarial prompts.

- Human-in-the-loop: Require review for actions with legal, financial, or safety impact.

- Developer enablement: Reusable secure prompt libraries and vetted toolchains.

Practical Architecture: A Secure AI Reference Pattern

- Client layer:

- Enterprise SSO, user device posture checks.

- Local redaction SDK strips PII before prompts leave the browser or IDE. Protecto fits here with developer-friendly libraries.

- AI gateway:

- Central entry for all model calls.

- Enforces policy: redaction, allow/deny lists, schema validation, rate limits, cost budgets.

- Observability: logs prompts/outputs post-sanitization, tags datasets and teams.

- Inference layer:

- Isolated per-app model endpoints.

- Secrets loaded at runtime from a vault.

- Dedicated vector stores per tenant with encryption and row-level access.

- Knowledge and data layer:

- Curated, signed document sets with lineage.

- Pre-processing: classification, PII removal, chunking, watermarking.

- Continuous scanning for sensitive content with Protecto connectors.

- Training and fine-tuning layer:

- Dedicated secure enclaves, artifact registry with signatures, DP options.

- Approval workflow for new data sources and updated checkpoints.

- Governance layer:

- Model and dataset registry; DPIA templates; evidence collection for audits.

- Usage dashboards, cost and risk scoring, alerting.

A 30-60-90 Day Playbook

Days 1–30: Stabilize

- Inventory models, datasets, vector stores, and vendors.

- Put a gateway in front of model calls; enable basic logging.

- Turn on client-side and gateway redaction for PII with Protecto.

- Freeze risky data flows: no raw tickets or contracts in prompts.

- Ship an acceptable-use policy and short training.

Days 31–60: Harden

- Implement RBAC/ABAC for models and indexes.

- Add prompt allow/deny patterns and output validation.

- Sign and version datasets; set up a clean RAG ingest pipeline.

- Configure anomaly detection on prompts, outputs, and spend.

- Run your first AI red-team exercise and fix findings.

Days 61–90: Prove and Scale

- Differential privacy or enclaves for sensitive training jobs.

- Expand usage dashboards and risk KPIs to executive reports.

- Automate DPIAs and evidence capture for audits.

- Establish an approved vendor registry and runtime checks.

- Bake Protecto rules into CI/CD so new apps inherit controls.

Key Metrics and KPIs

Measure what matters or it won’t improve.

- Privacy metrics:

- Percentage of prompts sanitized before egress

- Sensitive output rate per application

- Time to redact or delete data on request

- Security metrics:

- Number of blocked injection attempts

- Poisoned document detection rate in RAG ingest

- Incidents to containment time

- Governance metrics:

- Coverage of models/datasets in registry

- Completion rate of AI training per team

- Vendor endpoints behind the gateway

- Cost and reliability:

- Cost per successful task

- Failed tool call rate

- Latency within SLO

Protecto can feed several of these metrics by centralizing usage, redactions, and policy events across your AI stack.

Techniques Cheat Sheet

| Technique | Protects Against | How It Works | Limitations |

| Data minimization | Leakage, compliance | Send only needed fields | Requires good data mapping |

| Redaction | Leakage, logging risk | Remove PII/secrets at token level | Can reduce output quality if over-aggressive |

| Differential privacy | Memorization, inference | Adds noise during training | May reduce model accuracy |

| Federated learning | Data residency | Train locally; share gradients | Complex to orchestrate |

| Secure enclaves | Insider risk, theft | Hardware isolation, attestation | Cost and platform limits |

| Homomorphic encryption | Processing on encrypted data | Compute without decrypting | Performance overhead, narrow tasks |

| Signed artifacts | Supply chain, poisoning | Hashes and signatures for data/models | Needs disciplined CI/CD |

| Guardrail prompts | Injection, jailbreaks | System messages, deny patterns | Not foolproof; must be monitored |

| Output validation | Tool abuse | Structure and schema checks | Requires well-defined contracts |

| Monitoring & canaries | Drift, emergent risks | Observe usage, probe models | Needs tuning and review |

Compliance Considerations Without the Drama

- Lawful basis and consent: Know why you’re processing personal data and be able to prove it.

- Data subject rights: Delete or export individual data even if it’s in embeddings or logs.

- Data residency: Keep data in approved regions and document cross-border flows.

- Retention and minimization: Don’t keep prompts or outputs forever.

- Vendor terms: Make sure providers commit to not training on your data unless you say so.

- Documentation: DPIAs, model cards, and usage policies aren’t paperwork for fun. They shield you in audits.

Automating these with your AI gateway and Protecto’s scanning and policy enforcement reduces manual overhead.

Common Myths vs. Reality

| Myth | Reality |

| “If we don’t train models, we have no risk.” | Prompts, outputs, logs, and vector stores still create exposure. |

| “A bigger model is safer.” | Larger context windows can leak more data and are harder to monitor. |

| “We blocked copy-paste; problem solved.” | Users will find workarounds. Fix the workflow, not just the keyboard. |

| “Open-source models are always riskier.” | Risk depends on controls, not the license. Many enterprises run open models securely. |

| “PII redaction ruins results.” | Smart token-level redaction preserves utility and removes only sensitive parts. Tools like Protecto do this automatically. |

Implementation Checklist

Before Go-Live

- Data classification in primary repositories

- Redaction on prompts and outputs

- RBAC/ABAC for models, indexes, and logs

- Signed datasets and versioned prompts

- Gateway with allow/deny rules and schema validation

- Monitoring: logs, canaries, anomaly alerts

- Incident playbooks and on-call rotation

- DPIA and documented data flows

- Vendor terms, data residency verified

After Go-Live

- Weekly review of blocked events

- Monthly red-team prompts and fixes

- Quarterly retraining or index refresh with provenance checks

- Regular key rotation and access audits

- KPI dashboards to leadership

- Continuous training for users

How Protecto Helps

Protecto is built for practical AI privacy and security in real organizations:

- Real-time PII and secret redaction: Token-level detection and removal in prompts and outputs, client-side or at the gateway, so sensitive data never leaves your boundary.

- Unified AI gateway: Route calls to any model or vendor with consistent policies, schemas, allow/deny patterns, and rate limits.

- Data discovery and connectors: Scan storage, tickets, chat tools, and code to identify sensitive content before it enters your AI stack.

- RAG and embeddings protection: Scrub documents prior to indexing, enforce access controls, and monitor queries for potential re-identification.

- Monitoring and compliance evidence: Centralize usage logs, redactions, and policy events; generate dashboards and audit artifacts.

- Developer-friendly: SDKs for web, server, and IDEs; CI/CD hooks for signed artifacts and policy checks.