2025 is the year privacy becomes the competitive layer of AI. If you’re rolling out GenAI privacy is no longer a compliance chore; it’s a trust-building strategy that accelerates adoption, partnerships, and revenue.

This report distills the most important AI privacy issues, statistics, and trends shaping 2025: what they mean, and how to respond with practical guardrails that protect people and performance.

The State of AI Data Privacy in 2025

AI is embedded in core workflows across industries, but privacy risks have scaled just as fast. The headline numbers many teams are tracking:

2025 AI Privacy Issues Statistics at a Glance

| Metric (2024–2025) | What It Signals | Operational Implication |

| ~40% of organizations report an AI-related privacy incident | AI is touching sensitive data sooner than controls are applied | Shift from point tools to pipeline-level guardrails |

| ~15% of employees pasted sensitive info into public LLMs | Human behavior is a major risk amplifier | Pre-prompt redaction + enterprise LLM tenants + training |

| ~70% of adults don’t trust companies with AI | Trust deficit threatens adoption and retention | Transparency + user controls + explainability |

| 26+ U.S. state AI/privacy initiatives in motion | Fragmentation is the norm | Jurisdiction-aware policies and logs for audits |

| 60%+ planning to deploy PETs by end-2025 | Privacy engineering is mainstreaming | Masking, differential privacy, federated learning at scale |

| ~$212B forecast global spend on security/risk | Budgets prioritize AI monitoring & compliance | Automate classification, masking, lineage, and alerts |

Where it fits: Platforms like Protecto discover PII/PHI automatically, apply masking/tokenization at ingestion, redact prompts on the edge, and generate audit-ready lineage—so teams can scale AI with confidence.

Innovation Meets Escalating Risk

AI is no longer a lab project—>70% of enterprises run it in production. With real business impact comes real exposure:

- Chatbot memory & logs: A support bot “helpfully” surfaces too much history; logs capture names, account numbers, even diagnoses.

- RAG without redaction: Retrieval systems index PDFs with PII/PHI; a single query can dredge it up.

- Shadow tools: Teams pilot new agents without procurement or DPO review; retention settings are unknown.

- Prompt injection: Attackers trick assistants into revealing internal notes or tool outputs.

Move from reaction to strategy. Instead of post-incident cleanup, leaders embed privacy into build and run:

- Privacy-first pipelines (mask/tokenize before data moves downstream)

- Converged governance (model oversight + data protection on one dashboard)

- Automated compliance (policy-as-code, continuous monitoring, real-time enforcement)

Protecto tie-in: Use Protecto’s policy engine to enforce masking/tokenization at ETL, pre-prompt redaction at the LLM gateway, and API schema guards—so the system prevents oversharing by default.

Rising Trends in AI Data Privacy Risks

1) Frequency and Nature of Breaches

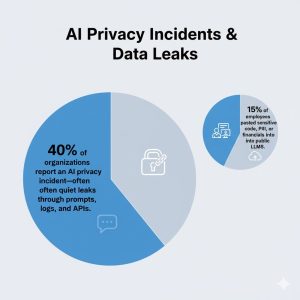

- ~40% of organizations report an AI privacy incident—often quiet leaks through prompts, logs, and APIs.

- ~15% of employees have pasted sensitive code, PII, or financials into public LLMs.

- Common patterns: chatbot data leaks, biased training data, reverse-identification through outputs, and over-permissive APIs.

Playbook

- Before prompts: pre-prompt redaction, secret scanning, enterprise LLM tenants (no-retention).

- Before embeddings: redact PHI/PII in documents; tokenize identifiers deterministically.

- At APIs: validate response schemas; restrict scopes; rate-limit and monitor for exfil patterns.

Protecto’s LLM/API gateway blocks risky inputs, enforces response schemas, and throttles suspicious egress—with audit logs to prove enforcement.

2) Consumer Trust & Adoption

- ~70% of adults say they don’t trust companies to use AI responsibly; ~81% expect misuse.

- Trust impacts usage: one mishap can sink adoption curves—even if features are beloved.

Playbook

- Explainability: clear, role-appropriate disclosures; model cards; “why this answer” tooltips.

- Choice: opt-outs for data use; easy data access and deletion.

- Proof: publish controls and outcomes (privacy incidents, DSAR response times).

Use Protecto’s lineage and policy logs to populate a trust dashboard: what data is protected, where it flows, and how requests are handled.

3) Safeguards vs. Reality

Leaders worry about accuracy, compliance, and cybersecurity—yet many lack practical controls at scale. The blockers: talent gaps, budget trade-offs, and moving targets.

Playbook

- Automate the basics: auto-discover PII/PHI; mask at ingestion; redaction at the edge.

- Policy-as-code: enforce purposes, residency, and attribute bans in CI/CD.

- Drills: DSAR/erasure tabletop exercises; incident playbooks.

CI integrations flag risky schema changes; runtime policies apply consistently across data stores, LLMs, and APIs.

Regulatory & Compliance Landscape in 2025

Global Policy Shifts

- EU AI Act: risk-based obligations, explainability, dataset quality, and human oversight.

- United States: 26+ state initiatives with varying rules on profiling, children’s data, and biometric limits.

- Other regions: evolving rules on cross-border transfer and localization.

Implication: Compliance is continuous and jurisdiction-aware. You’ll need to show what data was used, where it flowed, and why it was lawful.

Protecto tie-in: Define jurisdiction rules once (purpose, residency); Protecto enforces them at runtime and records policy versions applied per request—gold for audits.

Enforcement Trends

Regulators are shifting from after-the-fact penalties to proactive spot checks—asking for evidence of controls, not just policy docs. Reputational damage now outstrips fine amounts for many brands.

Expect focus on:

- Biometric data handling (face, voice, gait)

- Automated decisions that impact rights (credit, employment, healthcare)

- Provenance and consent documentation

PET Adoption Goes Mainstream

By late 2025, 60%+ of enterprises plan one or more Privacy Enhancing Technologies (PETs):

- Masking/tokenization (preserve joins and analytics while hiding raw values)

- Differential privacy (limit re-identification)

- Federated learning (train without centralizing sensitive data)

- Secure multi-party computation (compute across parties without revealing inputs)

Protecto operationalizes PETs—deterministic tokenization for structured data, contextual redaction for free text, and vaulted re-identification for narrow, authorized workflows.

Market Trends & Economic Impact

Security & Risk Spend

Global security and risk management spend is projected to be around $212B in 2025, with a growing share for:

- AI monitoring stacks: detect drift, injection, shadow deployments

- Privacy platforms: classification, masking/redaction, lineage, audit

- Governance dashboards: unify data protection and model oversight

The Real Cost of AI Breaches

Direct expenses (investigations, penalties, legal) are the tip of the iceberg. Hidden costs dominate:

- Churn & Conversion: users abandon products perceived as risky

- Sales Cycle Slowdown: longer security reviews and vendor questionnaires

- Innovation Drag: bans and manual workarounds replace scalable guardrails

Corporate Strategies & Gaps

Organizations respond with restrictions:

- 63% limit data employees can paste into AI tools

- 61% restrict which tools are allowed

- 27% ban AI for sensitive workflows

Protecto tie-in: Protecto’s pre-prompt filters and API schema enforcement deliver precision controls—so you can enable use-cases without opening floodgates.

Technology & Governance Convergence

Data Governance Meets AI Governance

Traditional data governance (privacy, accuracy, retention) is merging with AI governance (explainability, bias audits, model drift). The result: live oversight for both data and decisions.

Drivers

- Rising data subject requests (access, correction, deletion)

- Regulator spot checks on actual systems

- User expectations for clear, respectful data use

Automation & Scalable Compliance

Ironically, AI helps manage AI risk:

- Automated discovery of PII/PHI in warehouses, lakes, vector DBs

- Policy-as-code to enforce purposes, residency, and attribute bans

- Real-time monitoring of prompts, vector queries, and API responses

Protecto connects to your data estate, applies policy automatically, and streams high-signal alerts to your SIEM/SOAR with context and remediation options.

Future-State: Privacy-First AI

A mature model includes:

- Proactive risk assessments (AIIAs) before launch and on material changes

- Continuous oversight of inputs/outputs and model drift

- Adaptive controls that respond to jurisdiction and threat changes

Privacy becomes the bridge between innovation and trust.

Practical Benchmarks: Metrics That Matter in 2025

To move beyond slogans, anchor your program to measurable goals. Here’s a metric set aligned to the most cited ai privacy issues statistics:

| Area | Metric | 2025 Benchmark Goal |

| Discovery | % of critical datasets classified (PII/PHI/biometrics) | >95% coverage |

| Prevention | % of sensitive fields masked/tokenized at ingestion | >90% |

| Edge Safety | % of risky prompts blocked/redacted | >98% |

| API Guardrails | Response schema violations per 10k calls | <1 |

| Monitoring | Mean time to detect (MTTD) privacy events | <15 min |

| Response | Mean time to respond (MTTR) high-severity | <4 hrs |

| Trust | DSAR/erasure fulfillment time | <7 days |

| Governance | % of models with documented lineage & AIIA | 100% |

All eight metrics can be instrumented or evidenced via Protecto’s discovery, masking, LLM/API gateways, lineage, and alerting.

PETs in Practice: Choosing the Right Control

| PET | Best For | Strength | Watch-Outs |

| Deterministic tokenization | IDs, emails, phones | Preserves joins & analytics | Manage token vault access tightly |

| Contextual redaction | Free text, notes, tickets | Removes entities pre-prompt | Tune for false positives/negatives |

| Differential privacy | Aggregate analytics | Limits re-identification risk | Utility trade-offs at high privacy budgets |

| Federated learning | Cross-org training | Keeps source data local | Orchestration complexity |

| K-anonymity/l-diversity | Data releases | Simple, intuitive | Weak under linkage attacks |

| Secure MPC | Joint insights across parties | Strong cryptographic guarantees | Higher compute overhead |

Protecto standardizes tokenization and redaction at ingestion and pre-prompt, with vaulted re-identification for narrow, audited workflows—balancing safety and utility.

30-60-90 Day Plan to Operationalize Privacy

Days 0–30: Visibility & Quick Wins

- Connect discovery to warehouses/lakes/logs; classify PII/PHI/biometrics.

- Tokenize top 10 sensitive fields (emails, phone, account IDs) at ingestion.

- Pre-prompt redaction for all public LLM calls; secrets scanning on paste.

- API schema enforcement for customer/billing endpoints; restrict scopes.

- Shadow AI scan to identify unapproved tools and retention risks.

Days 31–60: Governance That Scales

- Define policy-as-code (purposes, residency, attribute bans) and enforce in CI/CD.

- Move to enterprise LLM tenants (no-retention) with tool-call whitelists.

- Add lineage across ETL → vector store → model outputs; export to SIEM.

- Instrument anomaly detection for vector queries and API egress.

- Run a DSAR & erasure drill; document gaps and fixes.

Days 61–90: Prove & Expand

- Gate releases with AIIAs; re-run on model/data changes.

- Add bias checks for high-impact decisions (credit, hiring, health).

- Extend controls to multimodal inputs (audio, image, video).

- Publish an internal trust dashboard (coverage, violations, MTTR).

- Harden vendor contracts (no-retention, sub-processor limits, audit rights).

Protecto tie-in: Protecto accelerates each phase—discovery, masking, LLM/API guardrails, lineage, anomaly detection, and SIEM export—so privacy becomes part of the build, not a bolt-on.

The Strategic Imperative: Privacy as Competitive Advantage

Why does privacy differentiate in 2025?

- Sales velocity: Faster security reviews, fewer redlines.

- Adoption: Users say yes when they believe their data is safe.

- Resilience: Incidents are contained quickly, with credible evidence for stakeholders.

- Speed: Guardrails enable safe experimentation—restrictions only where risk is real.

Think of privacy like brakes on a race car: you don’t win by avoiding brakes; you win by having great brakes so you can move faster with control.

Immediate Next Steps (Do These This Month)

- Map one end-to-end workflow (e.g., support chatbot) and mark every point where sensitive data enters, moves, or leaves.

- Turn on pre-prompt redaction and API schema validation for that workflow.

- Tokenize identifiers in your most-queried analytics tables; keep referential integrity.

- Add lineage so you can answer “did person X’s data train model Y?” without a war room.

- Train teams with a 30-minute “Do/Don’t” for AI tools to curb the 15% risky paste behavior.

How Protecto Helps

Protecto is a privacy control plane for AI. It prevents leaks before they happen, enforces jurisdiction-aware policies where data actually flows, and produces the audit evidence regulators and customers expect—without slowing teams down.

- Automatic Discovery & Classification

Crawl warehouses, lakes, logs, and vector stores to find PII/PHI, biometrics, and secrets. Tag records with purpose and residency so enforcement is automatic.

- Masking, Tokenization & Redaction

Apply deterministic tokenization for structured identifiers and contextual redaction for free text at ingestion and pre-prompt. Preserve joins and model utility while removing raw values.

Result: fewer false alarms, safer data everywhere it travels.

- Prompt & API Guardrails at the Edge

Block risky inputs (PII, secrets) and jailbreak patterns; enforce response schemas and scopes; throttle or block suspicious egress.

Result: prevent the quiet overshares behind many incidents.

- Jurisdiction-Aware Policy Enforcement

Define once (purpose limits, allowed attributes, residency); enforce per region at runtime. Every decision is logged with a policy version and context for audits.

- Lineage & Audit Trails

Trace data from source → transformations → embeddings → model outputs. Answer DSARs and erasure requests fast; shorten investigations from weeks to hours.

- Anomaly Detection for Vectors, Prompts & APIs

Baseline normal behavior; flag exfil patterns, enumeration, and after-hours spikes with step-up controls (mask/deny/throttle).

Result: detect and contain before damage spreads.

- Developer-Friendly Integration

SDKs, gateways, and CI plugins make privacy part of the build: fail risky PRs, suggest tokenized alternatives, and apply guardrails transparently.

Bottom line: With Protecto, you can adopt AI boldly while keeping sensitive data safe and proving compliance in real time—turning privacy into the engine of speed, trust, and resilience.

Conclusion

The ai privacy issues statistics we’re seeing in 2025 point to a simple truth: privacy is now the foundation of trustworthy AI at scale. Incidents will keep rising where guardrails are weak; trust will keep falling where transparency is thin. The winners are already reframing privacy—not as a brake, but as the braking system that lets them drive faster.

Build privacy into the pipeline (masking, tokenization, redaction). Enforce policy where data flows (prompts, APIs, embeddings). Keep receipts with lineage and audits. Do those three things consistently, and you’ll convert risk into momentum—shipping AI products users welcome, regulators respect, and competitors struggle to match.

FAQs

- What are the key AI privacy statistics for 2025?

Key AI privacy statistics show 40% of organizations report AI-related privacy incidents, 15% of employees paste sensitive info into public LLMs, and 70% of adults don’t trust companies with AI. - How many companies have experienced AI privacy breaches?

Approximately 40% of organizations report experiencing an AI-related privacy incident, with common patterns including chatbot data leaks and over-permissive APIs. - What percentage of employees misuse AI tools with sensitive data?

Around 15% of employees have pasted sensitive information like code, PII, or financial data into public LLMs, creating major security risks. - How much do consumers trust AI with their data?

About 70% of adults say they don’t trust companies to use AI responsibly, with 81% expecting misuse of their personal information. - What is the projected spending on AI security and privacy in 2025?

Global security and risk management spend is projected around $212B in 2025, with growing investment in AI monitoring, privacy platforms, and governance dashboards.