What happens when the AI feature you shipped last quarter is compliant in one region—but illegal today in another? That’s the new normal. In 2025, the EU AI Act, new U.S. state privacy laws, China’s PIPL, and APAC rules are reshaping how organizations collect, process, store, and share data for AI. Privacy isn’t a back-office task anymore; it’s a front-line guardrail for product, security, and data teams.

AI requires data to remain accurate and useful, but laws mandate that personal data be minimized, purpose-bound, explainable, and erasable. Meanwhile, most AI pipelines ingest unstructured inputs—emails, tickets, call transcripts, logs, documents—where sensitive information hides in plain sight. Traditional tools designed for tidy, structured databases don’t see these risks early enough.

This article examines the challenges of ensuring data privacy in AI systems, the regulatory shifts driving this urgency, and the practical strategies that safeguard innovation. You’ll also see exactly where Protecto slots in to help, from automatic discovery and masking to prompt guardrails and auditable lineage.

The Evolving Regulatory Landscape for AI Data Privacy

Regulators have moved on from “annual checklist” compliance. Today’s standard is continuous assurance: show—at any moment—what data your AI used, where it came from, how it was protected, and why the model acted as it did. Fines and enforcement actions are climbing, and high-risk use cases (healthcare, finance, employment, credit) face the strictest oversight.

Compliance is now a running task, not a quarterly event.

Global Privacy Frameworks You Must Reconcile (2025)

| Framework | What It Emphasizes | Common Friction with AI | What to Do Now |

| GDPR (EU) | Consent, purpose limitation, data minimization, right to erasure | Training data embedded in models; complex DSAR/erasure | Minimize at ingestion; log lineage to prove where data flows. |

| EU AI Act | Risk-based obligations, dataset quality, human oversight, and transparency | Auditability at model + data layers | Add explainability and dataset documentation to release criteria. |

| U.S. State Laws (2025 cohort) | Profiling opt-outs, children’s privacy, and sensitive data controls | Jurisdiction-by-jurisdiction differences | Build jurisdiction-aware policies into data and API layers. |

| China’s PIPL | Cross-border transfer limits, localization | Global model training across borders | Keep sensitive data local; tokenize before export. |

| APAC (India DPDP, Singapore, Japan) | Notice/consent, purpose limits, accountability | Fragmented definitions, varying notice rules | Centralize policy and adapt via region-aware enforcement. |

Where this gets easier: Privacy platforms like Protecto let you define policy once (purpose limits, redaction rules, residency) and enforce it across warehouses, pipelines, prompts, and APIs with audit trails you can hand to regulators.

The EU AI Act: Accuracy and Auditability

The Act bans some uses outright (e.g., social scoring) and imposes strong obligations on high-risk systems. You’ll need to show dataset quality, human oversight, and traceability. In practice, that means you can’t separate “model performance” from “data governance.” The data that trained the model—and the decisions the model made—must be explainable and logged.

Practical move: add a “privacy & explainability review” to your go-live checklist. If data lineage and disclosure aren’t ready, the model doesn’t ship.

The Challenges in Ensuring Data Privacy in AI Systems

AI privacy isn’t a copy-paste of legacy compliance. The hardest problems live where AI’s appetite for data collides with rules designed to restrict it.

1) Patchwork Regulation & Jurisdictional Complexity

A single product can be subject to dozens of overlapping rules. One state requires opt-in for profiling; another offers opt-out. PIPL restricts cross-border movement; other regions allow it with safeguards. The result: one data flow, many obligations.

What good looks like

- A central policy layer (purpose tags, allowed uses, residency rules).

- Jurisdiction-aware enforcement: policies adapt per user region, dataset, and endpoint.

- Automated evidence: reports that show which policy version applied to which run.

How Protecto helps: Define policies once and apply them everywhere—tokenize/ redact at ingestion, block risky prompts at the LLM gateway, and log which jurisdictional rules were enforced for each dataset and call.

2) Purpose Limitation & Data Minimization

AI loves secondary use: patterns discovered after launch are tempting. Regulators disagree. If you collected data for support, you can’t silently repurpose it for marketing or model training.

What good looks like

- Purpose tagging on ingest (“support,” “fraud,” “training”).

- Minimization by default: only the fields the model truly needs.

- Masking/tokenization that keeps analytics intact but protects identifiers.

How Protecto helps: Auto-discover PII/PHI, tag data by purpose, and apply deterministic masking so models keep their signal without exposing raw values.

3) Right to Erasure & Data Residency

If personal data trains a model, how do you delete it later? Full retrains are costly. Meanwhile, residency rules require data to stay in-region.

What good looks like

- Selective training sets: keep sensitive data tokenized or excluded.

- RAG over governed indexes to reduce embedding personal data into model weights.

- Residency-aware routing so prompts and storage stay local.

How Protecto helps: Redact before embedding, tokenize sensitive identifiers, and maintain lineage so you can prove whether a person’s data ever entered a training set—and where to remove or reindex it.

4) Transparency, Explainability & Right to Explanation

“Because the model said so” won’t satisfy auditors or customers. People want to know what influenced a decision and how to contest it.

What good looks like

- Decision logs with input summaries, policy context, and human checkpoints.

- Model cards/data sheets that explain data sources, limits, and risks.

- Tiered disclosures: one version for users, another for auditors.

How Protecto helps: Produce audit-ready trails showing data sources, transformations, masking actions, and policy versions for each decision or model call.

5) Algorithmic Bias, Fairness & Equity

Fairness is now part of compliance. Some jurisdictions require bias audits in hiring, lending, healthcare, and education.

What good looks like

- Bias monitoring in the pipeline (pre-, in-, and post-deployment).

- Clear governance on which attributes are allowed, proxy risks, and mitigation playbooks.

- Data documentation for auditability.

How Protecto helps: Provide dataset documentation and lineage to support bias audits, plus policy guardrails that prevent disallowed attributes from entering the training or inference path.

Operational & Technical Barriers You’ll Meet

Privacy isn’t just a policy—it’s an operational discipline that spans your entire AI lifecycle.

The AI Data Lifecycle (Where Risks Emerge)

- Ingestion: raw logs, transcripts, tickets—PII/PHI everywhere.

- Preprocessing: tagging, cleaning—mask too late and you’ve already leaked.

- Training/Tuning: embeddings and model weights can capture personal info.

- Deployment: prompts, tools, and APIs expose new surfaces.

- Retraining: schema drift reintroduces sensitive fields without notice.

Failure modes to watch

- Unredacted logs entering vector stores.

- Prompt injection revealing internal context.

- API responses oversharing fields after a harmless refactor.

- Shadow AI tools with no retention guarantees.

How Protecto helps: Redact at ingestion, block risky prompts, enforce API schemas, and surface shadow use via anomaly detection and lineage.

Vendor & Third-Party Ecosystem Risk

Your compliance is only as strong as your weakest vendor. LLM APIs, analytics tools, and SaaS connectors can create cross-border leaks or retention surprises.

What good looks like

- Vendor inventory with purpose, data types, residency, and deletion SLAs.

- Contractual guardrails: no-retention modes, approved sub-processors, audit rights.

- Runtime enforcement: deny egress to unapproved endpoints.

How Protecto helps: Egress controls and gateways ensure only approved vendors receive governed, minimized data—backed by logs that show who sent what, where, and why.

Compliance at Scale (Why Manual Fails)

Manual checklists crack under multimodal inputs and fast release cycles. Schema changes and new agents appear daily.

What good looks like

- Policy-as-code in CI/CD.

- Automated masking/tokenization at ETL and pre-prompt.

- Continuous monitoring and alerting for drift and violations.

How Protecto helps: SDKs, proxies, and CI checks that block risky changes before merge, plus dashboards that track coverage, incidents, and time-to-remediation.

Workforce, Culture & Awareness

Most engineers aren’t lawyers; most lawyers don’t ship pipelines. The gap causes avoidable mistakes.

What good looks like

- Role-based training with real prompts and examples.

- “Guardrails first” culture: privacy checks are as routine as unit tests.

- Lightweight runbooks for DSARs, erasure, and breach response.

How Protecto helps: Just-in-time prompts that warn or block when someone pastes sensitive content, with safe alternatives that keep work moving.

Strategies for Navigating the Compliance Minefield

Build a Unified Governance & Privacy Engineering Framework

- Central policy model: purposes, retention, residency, and allowed attributes.

- Enforce early: mask/tokenize/redact at the edge of data entry and pre-prompt.

- Prove it: lineage that traces fields from source to model to output.

Protecto operationalizes this with discovery, masking, policy enforcement at LLM/API gateways, and end-to-end audit trails.

Risk Assessments & Continuous Monitoring

- AIIAs (AI Impact Assessments) before launch and after major changes.

- Real-time dashboards: violations, drift, incident SLAs, and model/data change logs.

- Anomaly detection to catch exfil patterns, jailbreak prompts, and oversharing APIs.

Protecto flags unusual vector queries, prompts, and API responses, throttles risky behavior, and routes rich alerts to your SIEM/SOAR.

Build Trust Through Transparency

- User-facing disclosures in clear language; no legalese.

- Tiered explanations: end users vs. auditors vs. regulators.

- Accessible redress: make appeals and corrections simple.

Protecto captures the evidence—policies applied, data transformed, and decisions logged—so you can disclose with confidence.

Invest in Compliance Automation

Manual privacy reviews don’t scale across products, teams, and regions.

- Policy-as-code for repeatability.

- Automated PII/PHI discovery and continuous redaction.

- No-retention enterprise LLM tenants and pre-prompt filters.

Protecto drops into CI/CD and runtime, making privacy checks automatic, not optional.

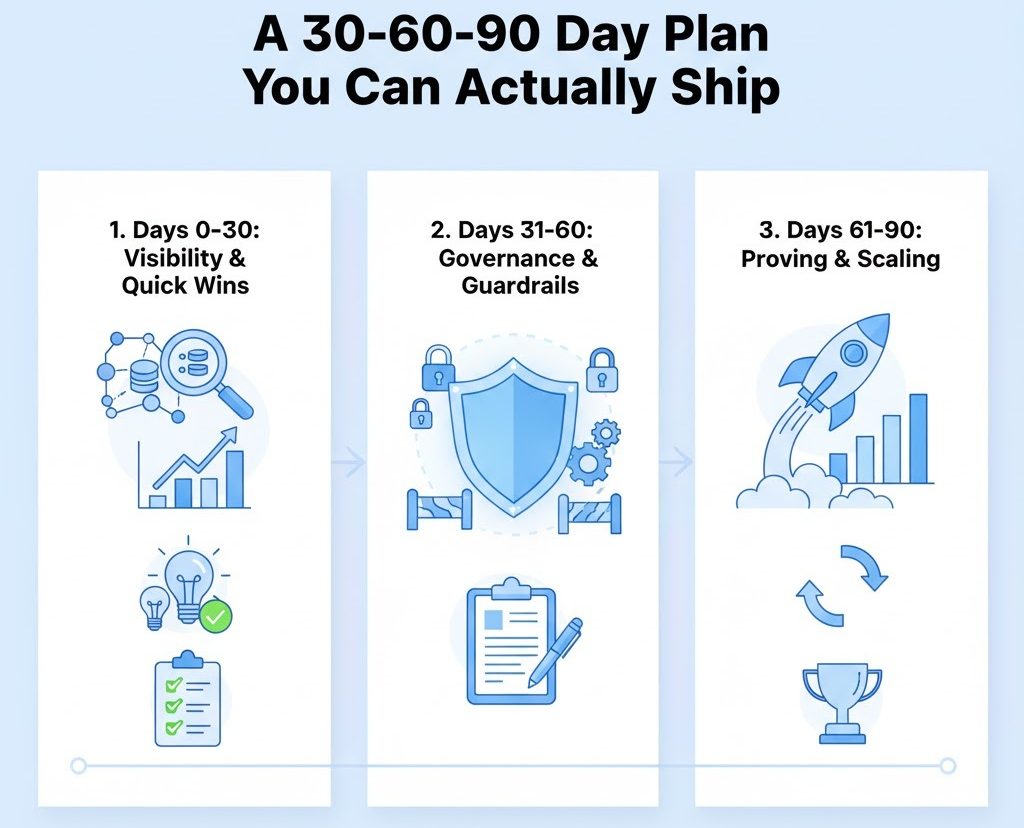

A 30-60-90 Day Plan You Can Actually Ship

Days 0–30: Visibility & Quick Wins

- Inventory data flows and AI endpoints; connect warehouses, lakes, logs.

- Turn on PII/PHI discovery; tag high-risk datasets and owners.

- Enable pre-prompt redaction and prompt-risk scoring for external LLMs.

- Mask/tokenize top 10 sensitive fields at ingestion (emails, phone, MRN, member ID).

- Add CI checks for API schemas and dbt models.

Days 31–60: Governance & Guardrails

- Stand up a cross-functional AI governance council.

- Enforce RBAC/ABAC on feature stores and model endpoints.

- Deploy API gateway policies: scopes, response schemas, egress allow-lists.

- Add lineage across ETL → embeddings → LLM calls; export to SIEM.

- Run your first AIIA and bake “privacy/explainability” into release criteria.

Days 61–90: Proving & Scaling

- Launch a unified dashboard for privacy events, drift, and SLA tracking.

- Simulate a DSAR and a targeted erasure; measure MTTR.

- Contract hardening: no-retention modes, deletion SLAs, sub-processor limits.

- Train teams with role-specific labs (engineers, analysts, PMs).

- Expand protections to multimodal inputs (audio, images).

At each step, Protecto accelerates adoption with SDKs, proxies, and prebuilt policies—so teams keep shipping while privacy becomes part of the build.

Control-to-Outcome Map (with Tooling Examples)

| Control | What It Prevents | How to Implement | Where Protecto Helps |

| Masking/Tokenization | Raw PII/PHI leaking into analytics, features, prompts | Deterministic tokens at ETL; preserve joins | Automated masking at ingestion; vaulted re-ID for authorized roles |

| Pre-Prompt Redaction | Employees pasting secrets; prompt injections exfiltrating data | LLM gateway that scans inputs/outputs | Entity-level redaction; jailbreak/risk scoring; safe refusals |

| Jurisdiction-Aware Policies | Illegal processing across borders | Policy engine tied to user/data region | Residency routing; policy versioning; audit exports |

| API Schema/Scope Enforcement | Oversharing fields and mass exfiltration | Response schema validation; rate limits | API gateway with allow-lists; anomaly detection; egress control |

| Lineage & Audit Trails | Inability to prove compliance | End-to-end trace of data → model → decision | Immutable logs; DSAR/erasure evidence; SIEM integration |

| Continuous Monitoring | Quiet, slow-burn leaks | Behavioral baselines; alerts; runbooks | Vector/prompt/API anomaly detection; auto-throttle |

The Road Ahead: Future-Proofing Privacy Compliance

Expect stricter enforcement around biometrics, children’s data, and high-risk decisions. Harmonization efforts will help, but fragmentation will persist, especially across U.S. states and APAC. The safest path is to design for coherence—one policy model, many regional adapters—while assuming rules will keep changing.

The organizations that win won’t just keep up with the law—they’ll compete on trust. Transparent consent, human-readable decisions, and clear redress build the confidence customers need to say yes to AI.

How Protecto Solves These Challenges

Protecto is a privacy control plane for AI. It prevents leaks before they happen, enforces policy where data actually flows, and proves compliance with audit-ready evidence.

- Regulatory Patchwork & Jurisdictional Rules

Define policies once—purpose tags, allowed attributes, residency—and let Protecto enforce them per region at runtime. Every decision is logged with the policy version and jurisdiction context.

- Purpose Limitation & Data Minimization

Auto-discover PII/PHI, tag datasets by purpose, and apply deterministic masking/tokenization that keeps analytics and model performance intact while removing raw identifiers. If a request violates declared purpose, Protecto blocks or routes to a safe path.

- Right to Erasure & Data Residency

Prevent personal data from entering model weights by redacting before embedding and favoring RAG with governed indexes. Lineage shows if a subject’s data touched training; residency-aware routing keeps prompts and storage in-region.

- Transparency, Explainability & Auditability

Generate end-to-end trails: source datasets, transformations, policies applied, and outputs produced. Produce user-friendly summaries and auditor-grade evidence without manual stitching.

- Bias & Fairness Support

Provide dataset documentation and lineage for bias audits, prevent disallowed or proxy attributes from entering pipelines, and tie policy checks to releases so fairness gates aren’t bypassed.

- Vendor & Third-Party Risk

Control egress with allow-lists, schema enforcement, and no-retention modes. See which vendor received which fields, when, and under which policy. Shut down shadow integrations with anomaly detection.

- Compliance at Scale (Automation)

Use SDKs, gateways, and CI plugins to embed privacy into the developer workflow: fail risky PRs, redact prompts automatically, and monitor vector/API behavior for anomalies—without blocking velocity.

- Workforce Enablement

Just-in-time guardrails warn or block when someone pastes sensitive content. Safe-completion messages suggest approved tools or redacted versions so work continues without risk.

With Protecto, you turn privacy from a doc set into a living system—one that discovers sensitive data, prevents leaks, adapts to jurisdictional rules, and proves compliance when it matters most.

AI data privacy compliance is no longer a speed bump—it’s the steering wheel. Treat privacy as an engineering discipline: minimize and mask sensitive data at ingestion, enforce policy at the edges (prompts, APIs), and keep receipts with lineage and audits. When you automate these steps, compliance stops slowing you down and starts clearing the road. Protecto give you the privacy control plane to discover sensitive data, redact what shouldn’t travel, enforce policies where it matters, and prove compliance on demand.

FAQs

- What is AI data privacy compliance?

AI data privacy compliance involves ensuring AI systems meet regulatory requirements like GDPR, EU AI Act, and other privacy laws for data collection, processing, and storage. - What are the main challenges in AI privacy compliance?

Key challenges include regulatory patchwork across jurisdictions, purpose limitation requirements, data minimization needs, right to erasure obligations, and transparency demands. - How does GDPR apply to AI systems?

GDPR requires AI systems to ensure consent, purpose limitation, data minimization, and right to erasure. Training data embedded in models creates complex compliance requirements. - What is the EU AI Act, and how does it affect compliance?

The EU AI Act imposes risk-based obligations, requires dataset quality documentation, human oversight, and transparency for high-risk AI systems with strict auditability requirements. - How can companies automate AI privacy compliance?

Companies can use policy-as-code, automated PII/PHI discovery, pre-prompt redaction, continuous monitoring, and privacy platforms like Protecto for compliance automation.