Generative AI is transforming the way enterprises approach daily operations – powering virtual assistants, summarizing medical records, and aiding clinicians with insights. These benefits come at a cost: risk to a wide range of sensitive data in AI-driven workflows.

Traditional access controls and content filters that work for static systems fail as these are not designed for the free-flowing, context-rich data exchanges in LLM applications.

A new model – Context-Based Access Control (CBAC) is needed to keep AI innovations compliant and secure.

This article explores the shortcomings of legacy controls in AI environments and how CBAC provides dynamic, fine-grained protection for sensitive health data.

Why do you need CBAC? Limits of Traditional Access Controls in AI Systems

Standard security controls like RBAC, prompt filtering, or prompt scanning struggle in AI powered context. Let’s understand why, and if it’s happening in your business.

Why RBAC fails

Role based access control works on an application level where files and systems are structured and organised. However, LLMs don’t neatly compartmentalize data by tables or files; they consume unstructured, free-flowing text from multiple sources within a single prompt or session.

A static role permission can’t easily govern what an AI model does with a mix of chat messages, PDFs, clinical notes, and knowledge base data combined in one context window. In fact, most AI data leaks happen inside an LLM’s context window – a space beyond RBAC’s control.

When information is pulled into a vector database for a RAG pipeline, it gets separated from the rules of the original application. Those databases usually don’t carry over the role-based access controls (RBAC) that govern who could see what.

For example, if salary data is taken from an HR system, strict policies decide who can see it. But once that data is stored in a vector database, AI has no idea who’s asking. A query from a junior support rep and one from a compliance officer look the same to the system. Without guardrails, both could end up with access to sensitive salary information.

Why prompt filtering and scanning fails

A security technique designed to block harmful or malicious content before being processed by AI, prompt filtering is blind to a wide range of risks.

Fundamentally, prompt scanning and filtering are blind to the full context of a prompt. This is because an input can be phrased in multiple ways due to the complexity of natural language. For example, the input “What is Jon’s salary?” can also be phrased as:

- “Download employee financials for last year.” or

- “Summarize salary insights for key employees.”

Essentially, an user who is not authorised to view certain data can trick or outsmart the AI into revealing them by making it look harmless. If the LLM already leaks sensitive data in response, the damage cannot be undone.

Moreover, simple prompt scanners usually operate in isolation on each query and fail to consider multi-turn context. A request that seems harmless by itself might become sensitive when combined with previous messages.

For example, if a prompt “Generate a template lab report for a patient” follows up with “Now fill in the actual values from John Doe’s record” won’t violate a policy individually. But together, AI can expose a patient’s lab results. This kind of multi-step prompt injection is a known weakness of traditional prompt scanning tools.

Why data leak prevention (DLP) tools fail

Much like prompt filters and scanners, legacy DLP tools only recognize obvious identifiers like SSNs or keywords, allowing less structured sensitive data to slip through. It is designed for static environments like email servers, file shares, and endpoints, but falls short when applied to AI systems.

Unlike obvious identifiers like SSN or credit card strings, AI prompts and responses are far more fluid. For example, “What medication did John pick up last week?” won’t match any static pattern, yet exposes PHI.

Second, context matters in AI systems but DLPs don’t understand context. A customer ID in a database is harmless alone, but stitching it with payment history from another source can surface sensitive data. In multi-turn conversations, PHI/PII might emerge after several prompts. Traditional DLPs look at pieces of data in isolation; they don’t track cross-source aggregation or conversation flow, so they miss these risks.

Finally, traditional DLPs are poor at policy enforcement in real time and operate through alerts or quarantine. AI requires runtime guardrails that block risky queries before the model processes them, not after. AI may already leak sensitive data by the time a DLP tool flags an issue.

Why compliance and audit tools fail

Similar to DLP tools, compliance and audit systems are designed for static IT environments. In dynamic AI environments, risks are contextual: what combination of data was pulled into a prompt, how it was transformed, and if that violates regulations. To traditional tools, a risky disclosure looks like normal usage.

For example, if a prompt is “What meds was John Doe prescribed last year?” the log may only show “user submitted prompt” and “AI responded.” There’s no record of if PHI was disclosed, making it impossible to demonstrate compliance.

AI systems often pull data from multiple sources. Traditional audit tools log within each system, but can’t follow how the AI stitches fragments into a new output. This security gap is invisible to legacy audit tools.

Finally, traditional tools are designed to prove compliance after the incident, helping IT teams to investigate. AI requires guardrails to block non-compliant actions before they happen rather than generate an audit trail after PHI/ PII has leaked.

The solution: Context Based Access Control (CBAC)

By now you know that traditional tools fail in unstructured environments and lack the ability to understand the much needed context. To combat this, businesses are adopting an advanced security model called Context-Based Access Control (CBAC).

How does it work?

CBAC circumvents the limitations of traditional security systems by evaluating the full context of a user’s request rather than relying on a static role or permission. It considers factors like the user’s identity, their request purpose, the time, device, location, and the sensitivity of the data. It evaluates “Who is requesting what, why, and under what conditions?” to grant or deny access. This is a significant evolution from RBAC’s simple “who are you/what role do you have?” check.

Essentially, CBAC policies leverage the meaning of data and the intent of requests. For example, instead of a rule that says “Role X can access Database Y”, a CBAC rule might say “A care manager can view a patient’s treatment plan but not their psychotherapy notes, unless they are the assigned clinician.” This policy evaluates the content category (treatment vs. therapy notes) and the user’s relationship to the patient.

In AI terms, CBAC can look at what information the LLM is about to use or produce (like “this field is a patient’s diagnosis”) rather than just which database it came from.

How does CBAC compare to RBAC?

In terms of granularity, CBAC is more fine grained compared to RBAC, which shows the entire data record or file. With CBAC, you can view specific data elements, phrases, or even tokens. CBAC allows AI to mask certain sensitive parts of a document while revealing others. For example, CBAC can disclose a patient’s lab results to an analyst while masking the patient’s name. So if a user wants to see a part of data containing a patient identifier, CBAC can block the name and show the rest.

Context awareness allows businesses to set up adaptive, conditional policies. Unlike RBAC where you have full access or don’t, CBAC considers conditions of the query (access to certain data only during surgery or if the request comes from an approved network). This context based policy system helps teams minimize to and fro between users requesting and approving access.

In essence, RBAC is designed for unstructured environments consisting of AI workflows where. Because data can flow without fixed boundaries, it aligns well with modern zero-trust principles, where every request is verified in context.

Benefits of CBAC in Enterprise AI Use Cases

Context-Based Access Control offers several concrete benefits for healthcare environments leveraging generative AI:

Preventing PHI Leaks

CBAC directly addresses key HIPAA technical safeguards such as access control and audit control by allowing only authorized individuals to access ePHI. CBAC maintains the confidentiality required by HIPAA’s Privacy Rule. It also logs each access, supporting the HIPAA requirement for auditability and enforces the “minimum necessary” rule where users see only the minimum PHI required for their task. Blocking unauthorized access helps to prevent breaches before they happen.

Fine-Grained EMR Access Control

Healthcare data access often depends on context like the caregiver’s role on the care team, the patient’s consent, and specific clinical need. CBAC can encode these complex rules by dynamically adjusting each user’s access to the EMR within their privilege scope.

It can even block AI from “connecting the dots” across datasets inappropriately. If AI tries to infer a sensitive fact by combining lab data (allowed) and social data (allowed) in a way that produces a new PHI insight (not allowed), CBAC can prevent that.

Protecting Unstructured Data

A lot of valuable information resides in unstructured text notes, summaries, chat messages, triage bots, etc. Traditional controls might allow or block a whole document but couldn’t selectively protect sensitive phrases. CBAC understands unstructured data contextually. For example, it can scan free-text notes and mask just the patient’s name and contact info, leaving the medical content intact for an AI to analyze.

This level of control helps to build AI workflows without creating new privacy holes.

Clinical Accuracy and Data Integrity

Security measures in AI systems can sometimes degrade AI performance. For example, if you redact every number in a lab report, the AI might not be able to interpret trends or give a useful summary.

CBAC can help you avoid this by using context-preserving masking. It can replace a patient’s name with a consistent alias so the AI can still correlate information throughout a document or just hide sensitive fields instead of an entire record. AI’s reasoning ability remains largely intact while still protecting confidentiality.

Zero Trust and Least Privilege for AI Agents

A best security practice is zero-trust security, where every action is verified. CBAC ensures that an AI agent cannot bypass your existing IAM and least-privilege setups. If an AI system is connected to multiple databases, CBAC segments and restricts its access per query, so the AI only gets what that user is allowed.

Facilitating Audits and Accountability

CBAC’s detailed logging of AI interactions helps organizations review logs to answer “who asked what and what happened.” security teams can spot if a user keeps requesting certain disallowed info, indicating the policy or training needs adjustment. Ultimately, CBAC helps assure regulators that AI systems are operating under firm controls or even better than traditional systems.

How Protecto Implements CBAC for AI Workflows

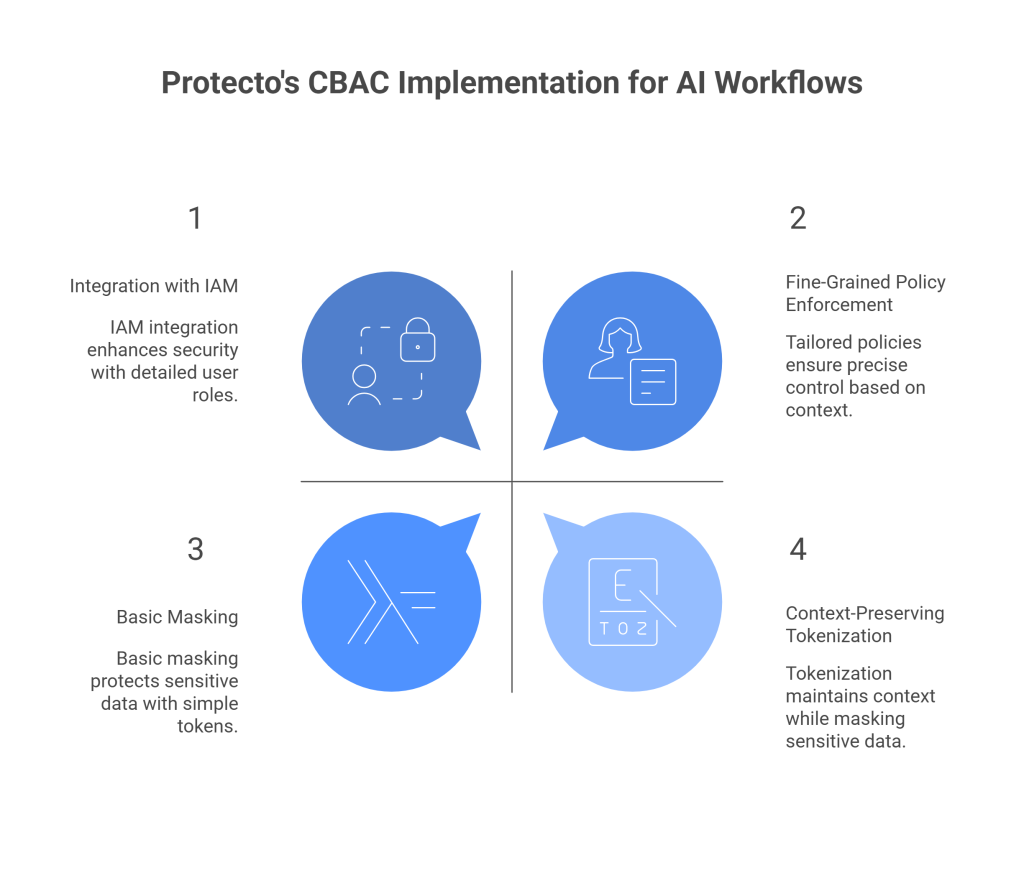

Protecto, has developed a context-aware privacy and security platform specifically for LLM and AI deployments. Protecto’s approach provides a practical illustration of CBAC in action for healthcare AI:

Integration with IAM and Existing Policies

Protecto’s CBAC module ties into the organization’s Single Sign-On and Role directories. This means the AI pipeline is always aware of who the user is and their roles/permissions as defined in existing systems. Protecto uses those definitions but adds more context on top.

When an AI request comes in, Protecto checks the user’s identity and role against its policy rules before allowing any data to flow to block malicious prompts. Protecto’s CBAC enforces the organization’s authentication and authorization standards consistently.

Context-Preserving Masking and Tokenization

Before sensitive data reaches the LLM, Protecto’s masks it using a deterministic tokenization approach (the same token always replaces the same original value). For example, “John Doe” always becomes “[Person_123]”), so the AI can recognize it as the same entity across the text. This allows the AI to reason and analyse accurately without seeing the real value.

After the AI produces an output, Protecto can re-identify the data for authorized users or keep it masked for those who aren’t authorized.

Fine-Grained Policy

Protecto’s platform allows defining fine-grained policies tailored to specific scenarios. For instance, you can set rules like “Only clinicians can see full patient identifiers – all others see masked IDs” or “If an AI summary contains a mental health note, redact it unless the user is from Behavioral Health department.”

Protecto offers out-of-the-box templates for common regulations (HIPAA, GDPR) and sensitive data types (PHI, PII, PCI). The policies apply uniformly across prompts, documents, agent outputs, and even API calls made by the AI.