Compare DPDP and GDPR compliance requirements. Learn how they differ, why they conflict, and what Indian enterprises should prioritize – especially with AI deployment.

The Regulatory Sprawl is Real

Imagine you’re the Chief Information Security Officer of a mid-sized Indian fintech company. You have customers across India, some operations in Europe, and you’re about to launch an AI-powered underwriting system. Someone asks you, “Are we DPDP compliant?”

You pause. Because the honest answer is. “Compliant with what, exactly? DPDP, yes. But also GDPR if Europeans are in our dataset. And the RBI guidelines if we’re handling financial data. And potentially HIPAA if we partner with healthcare providers.

Welcome to the modern enterprise compliance paradox.

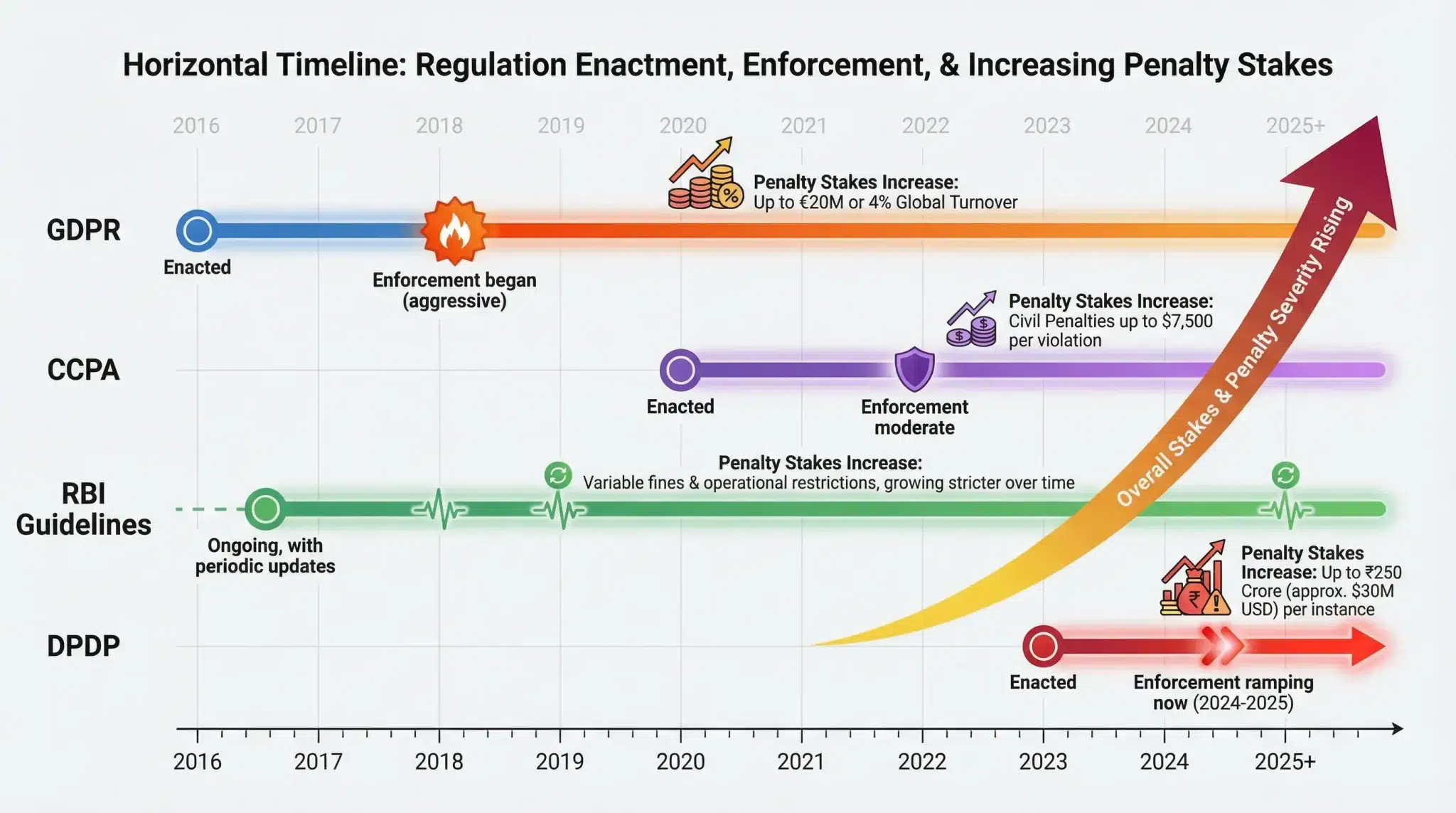

Most Indian enterprises aren’t ignoring compliance. they’re drowning in it. The landscape has evolved rapidly. Ten years ago, India had minimal data protection law. Today, there’s DPDP (Digital Personal Data Protection Act, 2023), and if your company has any international footprint, you’re also managing GDPR, possibly CCPA, and industry-specific regulations that layer on top.

The problem isn’t that each regulation is unreasonable. The problem is that they’re not aligned. They have different definitions of “personal data.” Different retention periods. Different consent models. Different penalties. And most troublingly, they were built in different eras, by different regulatory philosophies.

GDPR was built in 2016 to protect Europeans from data exploitation. DPDP, enacted in 2023, was built to give Indians control over their personal data and enable faster innovation. CCPA prioritizes transparency and consumer rights. Each one makes sense in its context. Together, they create a compliance puzzle.

Let’s Break Down the Key Differences. And Why They Matter

I’m focusing on DPDP, GDPR, and India-specific regulations because they’re most relevant for Indian enterprises. I’ll touch on others where relevant.

1. Scope & Definition of “Personal Data”

GDPR defines personal data very broadly. “any information relating to an identified or identifiable natural person.” Practically, this means almost anything could be personal data if there’s a reasonable way to link it to someone.

DPDP takes a tighter approach. It defines “personal data” as information that can identify an individual, but it also carves out specific categories of “sensitive personal data.” financial information, health data, biometric data, caste/tribe information. This distinction matters because sensitive personal data gets heightened protection under DPDP.

What this means in practice. You might have data that’s “personal data” under GDPR but only “regular personal data” (not sensitive) under DPDP. The compliance burden is different.

2. Consent & Purpose Limitation

Both GDPR and DPDP require explicit consent before you process personal data. But they define “purpose limitation” differently.

Under GDPR, you must get consent for a specific purpose. If you collect customer data for transaction processing, you can’t later use that same data for marketing without new consent. The assumption is. people need to know exactly what they’re consenting to.

DPDP is similar but slightly more flexible. You need consent, but the law allows for “reasonably related” purposes under certain conditions. This is a nod to practical business needs. your compliance team doesn’t need new consent for every related use case.

Why this matters. If you’re an Indian fintech processing customer data, DPDP gives you slightly more breathing room than GDPR would. But if those same customers are EU residents, GDPR rules apply to them, and you’re back to stricter purpose limitation.

3. Data Retention & Storage Limitation

GDPR mandates that personal data be kept “no longer than necessary” for the stated purpose. In practice, this usually means 3-5 years for most use cases, though GDPR doesn’t specify exact periods. each regulator interprets this differently.

DPDP is more prescriptive. It requires data to be kept “for no longer than is necessary to serve the purpose for which the personal data was collected or processed.” But importantly, DPDP also gives the Data Protection Board authority to specify retention periods, and those rules are still being developed.

In reality. both frameworks want you to delete data when it’s no longer needed. But GDPR enforcement is more mature, regulators have clearer expectations, while DPDP is still being interpreted.

4. Data Subject Rights

Both give individuals the right to access their data, correct it, and delete it (the “right to be forgotten”).

GDPR adds complexity here. You have to respond to deletion requests within 30 days, and you must notify other organizations that you’ve shared data with (in some cases) that the data subject wants it deleted.

DPDP is simpler on paper. You must respond within 30 days, but you’re not required to notify downstream users. However, DPDP adds a “right to grievance” where individuals can file complaints with the Data Protection Board if they feel their rights were violated.

Practically. GDPR puts the burden on you to track downstream data transfers and notify everyone. DPDP puts the burden on regulators to handle complaints. This affects your operational complexity.

5. Penalties & Enforcement

Here’s where it gets serious.

GDPR penalties are brutal. up to €20 million or 4% of global revenue, whichever is higher, for serious violations. European regulators, like the Irish DPC which oversees most tech companies operating in Europe, actively enforce this. we’ve seen major fines ($400M+ for Meta, Google, etc.).

DPDP penalties are lower in the immediate term. up to ₹50 crore ($6 million USD) for most violations, up to ₹250 crore ($30 million USD) for the most serious breaches. But here’s the critical point. DPDP enforcement is just starting. The Data Protection Board is still being staffed. Expect enforcement to ramp up significantly over the next 2-3 years as the regulatory machinery matures.

This means. GDPR enforcement is immediate and painful. DPDP enforcement is imminent but still ramping up. Both should be taken seriously, but your risk profile changes depending on which regulator is looking at you.

| Dimension | GDPR | DPDP (India) | Other India-specific rules (IT Act etc.) |

|---|---|---|---|

| Scope | Very broad. Any data that can identify a person, even indirectly | Moderate. Must directly identify an individual. Sensitive personal data categories defined | Narrow coverage. Focused on security practices. Minimal consumer rights |

| Consent & Purpose Limitation | Strict consent rules. New purpose requires new consent | More flexible. Reasonably related uses allowed without fresh consent | Basic consent requirements. Weak enforcement |

| Data Retention | Strict data minimization. Keep data only as long as necessary. Strong enforcement norms | Evolving rules. Data Protection Board may define mandatory periods | Minimal guidance. Mostly security focused |

| Data Subject Rights | Extensive rights. Access, correction, deletion, portability. Downstream notifications required | Core rights. Access, correction and erase. Grievance redressal. No downstream notice required | Limited. Primarily breach-related rights |

| Penalties & Enforcement | Very high penalties. Active enforcement. Up to 4% of global revenue | High but emerging. Up to ₹250 crore. Enforcement ramping up | Lower penalties. Focused on security lapses |

But Here’s Where It Gets Complicated. Generative AI Changed Everything

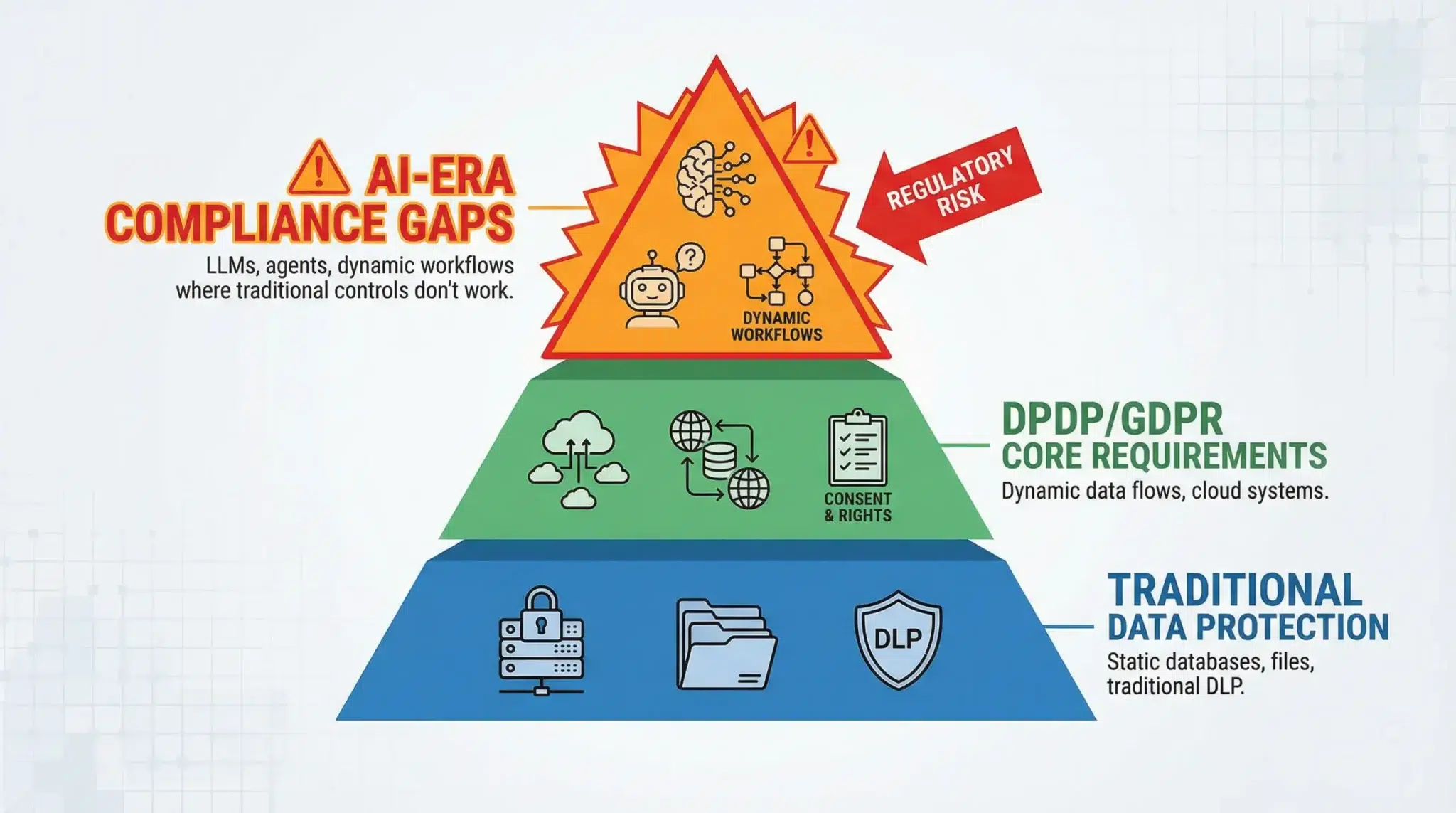

None of these regulations were written with modern AI in mind. They predate widespread LLM deployment. And that’s created a new class of compliance challenge.

When you feed customer data into an LLM (whether it’s ChatGPT, Gemini, or an internal model), something complex happens.

The data is technically “processed,” which means DPDP and GDPR compliance obligations kick in. But the data flows through systems that operate differently from traditional databases. there’s dynamic context, semantic understanding, and outputs that might contain traces of training data. Traditional compliance frameworks aren’t equipped to assess risk in this environment.

GDPR regulators have started issuing guidance on this. For example, some European data protection authorities have indicated that feeding personal data into third-party LLMs, especially US-based ones, is risky because of data transfer concerns and lack of contractual guarantees. DPDP hasn’t issued detailed guidance yet, but the principle is similar. you can’t just send customer data to external AI systems without protective measures.

This is where many Indian enterprises are stuck. They understand DPDP compliance at a traditional level (data storage, access controls, retention). But they don’t have frameworks for AI-era compliance. They don’t know how to evaluate whether their ChatGPT integration is legal under DPDP. They don’t have technical controls to detect when an AI system is about to output sensitive customer data.

So What Should You Prioritize?

If you’re an Indian enterprise with only Indian customers and no AI yet, DPDP is your primary concern. Make sure you’re compliant, and you’re largely safe.

If you have EU customers, GDPR compliance becomes non-negotiable. And here’s the hard truth. if you’re compliant with GDPR, you’re probably DPDP-compliant too. GDPR is stricter on most dimensions. The reverse isn’t always true.

If you’re deploying AI (LLMs, chatbots, agents), your compliance approach needs to change entirely. You can’t assume your traditional controls will work in an AI-driven data environment. You need:

- Real-time detection of sensitive data before it reaches your AI systems.

- Semantic understanding, not just pattern matching, of what constitutes personal data in your specific context.

- Access controls that work in dynamic workflows, not just static databases.

- Audit trails that show what data the AI touched and what it output.

This is the gap most enterprises are missing. And it’s also where the regulatory risk is highest right now, because auditors and regulators are just starting to scrutinize AI and data privacy.

Here’s Where Protecto Comes In

Understanding regulatory requirements is one thing. Actually building compliant systems is another. Most enterprises realize too late that traditional data protection tools were built for a different era. They can’t detect sensitive data in real-time AI conversations. They can’t make context-aware access decisions. They don’t understand that a customer’s account number buried in a casual chat prompt is still sensitive personal data that needs protection.

This is exactly where many Indian enterprises are stuck. They’ve read the DPDP requirements. They’ve mapped their traditional data flows. But when they deploy an LLM or chatbot, they suddenly realize their old DLP tools are useless. The data moves too fast, the context is too dynamic, and the patterns are too unpredictable for rule-based blocking.

Protecto was built for this moment. Our platform detects sensitive data in real-time, across dynamic AI workflows, using semantic understanding, not just pattern matching. This means when your team asks ChatGPT to analyze customer complaints, we identify the personal data before it ever leaves your infrastructure. We mask it on the fly. We ensure your LLM gets enough context to work effectively, but never gets access to raw sensitive data. All without slowing down your business.

More importantly, we help you prove compliance. When a regulator asks “How do you ensure personal data doesn’t leak through your AI systems,” you have an answer backed by audit trails, detection logs, and real-time controls. For DPDP, GDPR, or any other framework. That’s what separates enterprises that are truly compliant from those just hoping they are.

If you’re building AI systems in India, you need both regulatory clarity (which this article provides) and the right technical controls (which Protecto provides). They work together.

The Bottom Line

DPDP, GDPR, and other regulations aren’t going away. They’re converging in some ways, stricter data protection globally, and diverging in others, each jurisdiction adding its own twist. The enterprises that will thrive are those that stop thinking of compliance as a checkbox and start thinking of it as a capability.

Your compliance strategy should acknowledge regulatory differences, prioritize based on your customer geography, and then add a layer specifically for AI. Because in 2025, if you’re deploying AI without a clear answer to “how do we ensure our LLMs don’t violate DPDP,” you have a problem.

The question isn’t “Are we compliant?” It’s “Are we compliant and ready for AI?”