What’s the fastest way to lose trust? Expose private data. With AI moving from pilots to core workflows in support, finance, HR, and healthcare, one careless prompt or leaky integration can turn into headlines, fines, and weeks of incident response.

The most useful way to understand the risks is to study AI privacy issues examples from the real world. These incidents reveal a pattern: organizations move fast, guardrails lag behind, and predictable weaknesses—consent, oversharing, and poor governance—turn into public crises.

Understanding AI Privacy Issues Through Real-World Incidents

Across industries, the same root causes keep resurfacing:

- Unauthorized access to sensitive data

- Consent gaps and opaque secondary use

- Repurposing data beyond original intent

- Weak human oversight and brittle governance

If privacy isn’t designed into the system, it’s only a matter of time before the data goes where it shouldn’t.

Major Incidents That Redefined AI Privacy Risks

The incidents below aren’t just cautionary tales; they’re playbooks for what not to repeat.

Case Snapshot Table

| Year | Incident | What Happened | Privacy Failure | Lesson |

| 2016 | Facebook & Cambridge Analytica | App harvested tens of millions of profiles for political profiling | Consent & purpose limitation | “Free” data is never free—make consent explicit and specific. |

| 2018 | Strava Heatmap | Aggregated GPS activity revealed military bases & homes | Re-identification via metadata | “Anonymous” traces can unmask people at scale. |

| 2016–2017 | DeepMind & NHS | Patient records used for AI app without clear patient notice | Healthcare transparency & lawful basis | Health data requires strict consent and auditability. |

| 2019 | Apple Siri Audio Review | Contractors heard private recordings | Human-in-the-loop governance | Limit data access, require opt-in, and minimize samples. |

| 2023 | OpenAI ChatGPT Bug | Brief exposure of chat titles and some billing data | Access isolation & logging | Design for secure multitenancy and safe defaults. |

| 2023 | Samsung/Amazon Internal Leaks | Staff pasted code and confidential docs into public LLMs | Insider oversharing | Set prompt guardrails, enterprise LLM tenants, and training. |

| 2020–2024 | Facial Recognition Misidentifications | Wrongful arrests disproportionately affecting minorities | Biased training & weak oversight | Test for bias; restrict high-risk biometric use. |

| 2024 | Slack Prompt Injection (research) | Malicious prompts exfiltrated private channel data | Agent/tool-call hardening | Enforce instruction hierarchies and output filters. |

| Various | Voice Assistants & Smart Devices | Always-on mics captured sensitive context | Ambient data & consent | Default to least capture and clear, revocable consent. |

| Various | Third-Party Analytics Leaks | Chatbots or apps sent PII/PHI to analytics tools | Vendor governance | Control egress; contract for no-retention; monitor continuously. |

Common Privacy Risks Illustrated by These Cases

1) Unauthorized or Inadvertent Data Sharing

Example: A scheduling chatbot passes unmasked PHI to a third-party analytics vendor.

Why it happens: Broad SDK defaults, permissive event capture, or lack of pre-prompt redaction.

Fix: Data minimization, tokenization, and vendor egress controls; use an LLM/API gateway to enforce schemas and strip sensitive fields.

Protecto note: Pre-prompt redaction and API schema enforcement stop oversharing before it leaves your network.

2) Repurposing Data Without Consent

Example: Resumes collected for hiring later used to train a screening model; social images used to improve face recognition.

Why it happens: “We already have the data” thinking; unclear purpose tags.

Fix: Purpose tagging at ingestion; block secondary use unless consented; maintain policy-as-code checks in CI.

Protecto note: Purpose-aware policies block non-compliant use at ingestion and log violations.

3) Biometric & Metadata Exposure

Example: Strava’s heatmap revealed base locations; facial recognition misidentifies individuals; voice samples expose sensitive context.

Why it happens: Belief that aggregation = anonymity; underestimation of re-ID risk.

Fix: Strong privacy budgets, aggregation thresholds, k-anonymity/l-diversity, and alerts for linkability.

Protecto note: Discovery classifies biometrics/telemetry; masking and aggregation rules enforce safer releases.

4) Systemic Vulnerabilities & Exploits

Example: Bugs leading to cross-tenant data exposure; prompt injection tricks assistants into leaking private context.

Why it happens: Complex stacks, new attack surfaces, and inadequate instruction hierarchies.

Fix: Safe completion policies, tool-call whitelists, prompt risk scoring, and anomaly detection on vector and API usage.

Protecto note: Prompt-risk scoring flags jailbreak patterns; gateways deny risky tool calls and redact outputs.

5) Insider Misuse & Accidental Leaks

Example: Employees paste keys, code, or strategy docs into public LLMs.

Why it happens: Pressure for speed; unclear rules; lack of enterprise LLM tenants.

Fix: Clear usage policies, UI nudges, enterprise LLMs with no-retention modes, and automated secret scanning.

Protecto note: Just-in-time warnings block sensitive prompts and suggest approved tooling automatically.

Sector-Specific Patterns You Should Expect

Healthcare: PHI at High Stakes

- Risks: PHI in notes, images, transcripts; vendor partnerships; RAG over unredacted charts.

- Guardrails: Tokenize identifiers, redact notes pre-embedding, isolate tenants, log lineage for HIPAA/GDPR.

- Protecto role: Entity-level PHI redaction before RAG; lineage to prove data handling and respond to erasure.

Consumer Tech: Voice, Social, and Location

- Risks: Always-on microphones, location trails, “anonymous” analytics that re-identify people.

- Guardrails: Opt-in review programs, strict aggregation thresholds, on-device processing where feasible.

- Protecto role: Discovery of telemetry fields; aggregation + masking policies enforced at the edge.

Enterprise & Employment

- Risks: Source code and contracts in prompts; resume data reused without notice; vector stores with unmasked PII.

- Guardrails: Enterprise LLM tenants, pre-prompt filters, secrets DLP, scoped embeddings.

- Protecto role: Prompt guardrails block secrets; API schemas prevent oversharing; dashboards evidence compliance.

Law Enforcement & Surveillance

- Risks: Biometric misidentification; disproportionate harm to minorities; lack of oversight.

- Guardrails: Bias audits, restricted deployments, strong human oversight and appeal mechanisms.

- Protecto role: Data documentation, lineage, and attribute controls to keep disallowed fields out of training.

Lessons Learned: Guardrails for the Future

Transparency & Governance First

- Clear consent flows with real choices

- Audit trails for who accessed which data and why

- Explainability so automated decisions can be understood and challenged

Protecto note: Generates immutable logs and policy histories to answer auditor and customer questions quickly.

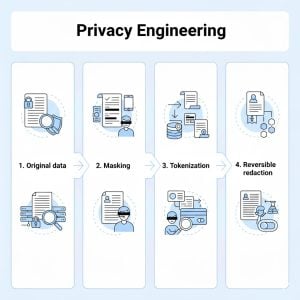

Privacy Engineering as Defense

- Masking: hide identifiers but keep structure

- Tokenization: deterministic replacements that preserve joins

- Reversible redaction: allow controlled re-identification via secure vaults

Protecto note: Applies these controls at ingestion and pre-prompt, preserving accuracy while protecting privacy.

Policies & Regulations Are Catching Up

- Expect risk assessments, biometric limits, and jurisdiction-aware obligations.

- Don’t wait for laws; self-govern with standards that exceed the minimum.

Build AI You Can Trust

- Minimize data by default

- Encrypt in transit and at rest

- Monitor outputs for exposures in real time

- Test for prompt injection like you test for SQL injection

Control Patterns: What to Deploy and Why

| Control | What It Prevents | How to Implement | Where It Lives |

| Pre-Prompt Redaction | Employees pasting secrets/PHI | LLM gateway scans inputs/outputs; safe refusals | App/LLM edge |

| Masking & Tokenization | PII in analytics, features, embeddings | Deterministic tokens; referential integrity | ETL/ELT & feature store |

| Jurisdiction-Aware Policy | Illegal cross-border processing | Policy-as-code tied to user/data region | Policy engine |

| API Schema Enforcement | Oversharing fields, exfiltration | Response contracts, scopes, rate limits | API gateway |

| Data Lineage & Audit | “Who saw what, when?” | End-to-end tracing datasets → models → outputs | Governance layer |

| Anomaly Detection | Slow-burn leaks & exfil | Behavioral baselines over prompts, vectors, APIs | Monitoring layer |

Protecto can deliver each control via SDKs, proxies, and a policy engine that works across warehouses, LLMs, APIs, and vector stores—so safeguards are consistent end-to-end.

Practical Playbook: 30-60-90 Days

Days 0–30: See & Stop the Obvious

- Discovery: Connect warehouses, lakes, and logs; inventory PII/PHI and biometrics.

- Quick masks: Tokenize emails, phone numbers, IDs in top 10 tables.

- Prompt guardrails: Turn on pre-prompt redaction and jailbreak detection for all public LLM calls.

- API contracts: Enforce response schemas for customer and billing endpoints.

- Shadow AI scan: Identify unapproved LLM use and SaaS connectors.

Days 31–60: Govern & Prove

- Policy-as-code: Purpose tags, residency rules, allowed attributes.

- Enterprise LLM tenants: No-retention mode; tool-call whitelists.

- Lineage: Trace data from source to embeddings to model outputs.

- Monitoring: Baselines and alerts for vector/API anomalies.

- Vendor egress controls: Allow-list endpoints; contract no-retention.

Days 61–90: Scale & Assure

- AIIA/PIA gating: Risk assessments before release; rerun on material changes.

- DSAR/Erasure drills: Prove you can find and remove a subject’s data.

- Bias checks: Add fairness metrics and sign-off for high-impact models.

- Multimodal expansion: Extend masking/redaction to audio, image, and video.

- Board reporting: Privacy KPIs (coverage, violations, MTTR, audit readiness).

The Road Ahead: Emerging Privacy Problems

Multimodal AI = Multilayered Risk

When text, images, voice, and video combine, the result is a rich behavioral profile. Even “harmless” timestamps or device IDs can triangulate identity. Controls must apply across modalities, not just text.

Synthetic Data: Useful, Not Magic

Synthetic datasets reduce risk but can still leak patterns or propagate bias. Treat them like sensitive data—govern origins, test for leakage, and document provenance.

Metadata as the New PII

Cursor trails, login times, movement patterns—metadata reveals more than names. It deserves masking, aggregation, and strict purpose limits.

Regulatory Wave

Expect stronger rules on biometrics, children’s data, and automated decisions with meaningful impact. Continuous monitoring and evidence generation will be mandatory, not “nice to have.”

Conclusion

AI is now embedded in how we diagnose, insure, hire, sell, and serve. The AI privacy issues examples we’ve covered show the cost of moving fast without guardrails—and the playbook for doing better. Privacy-by-design is not a drag on innovation; it’s the operating system for sustainable, trusted AI.

Build with minimization and masking from the first pipeline. Enforce policy where data actually flows—prompts, APIs, embeddings—not just in docs. Keep receipts with lineage and audits. If you can do those three things reliably, you’ll avoid most gotchas and earn the trust that fuels adoption.

Key Takeaways (Recap)

- Real-world AI privacy issues examples repeat the same failures: consent gaps, oversharing, weak oversight, and vendor leaks.

- “Anonymous” isn’t anonymous at scale—metadata and biometrics can re-identify.

- Privacy engineering (masking, tokenization, redaction) preserves accuracy while protecting people.

- Continuous governance beats annual checklists; monitor prompts, APIs, vendors, and outputs.

- Platforms like Protecto operationalize discovery, redaction, policy enforcement, and lineage so you can prove compliance on demand.

Next Steps for Your Team

- Map one end-to-end workflow (e.g., support chatbot): where does sensitive data enter, move, and leave?

- Turn on pre-prompt redaction and API schema enforcement for that workflow.

- Tokenize identifiers in top-risk datasets; preserve analytics with deterministic tokens.

- Add lineage to trace data → embeddings → outputs; test a DSAR/erasure path.

- Instrument monitoring for vector/API anomalies and prompt-risk patterns.

How Protecto Helps

Protecto is a privacy control plane for AI. It prevents leaks before they happen, enforces policies where data flows, and proves compliance with audit-ready evidence—without slowing teams down.

- Automatic Discovery & Classification

Find PII/PHI, biometrics, and secrets across warehouses, lakes, logs, and vector stores. Tag data with purpose and residency so rules apply automatically. - Masking, Tokenization & Redaction (Accuracy-Preserving)

Apply deterministic tokens at ETL and reversible redaction for text so models keep signal while raw identifiers stay protected. Pre-prompt filters strip sensitive entities before queries hit LLMs or RAG. - Prompt & API Guardrails at the Edge

Block secrets and regulated data at input, score prompts for jailbreak/injection, enforce API response schemas and scopes, and throttle suspicious egress. - Jurisdiction-Aware Policy Enforcement

Define purpose and residency once; enforce per region and per dataset at runtime. Every decision is logged with policy version and context for audit. - Lineage & Audit Trails

Trace data from source to embeddings to outputs. Answer DSARs and erasure requests with confidence and shorten incident investigations from weeks to hours. - Anomaly Detection for Vectors, Prompts & APIs

Learn normal behavior and flag outliers—enumerations, mass export attempts, or odd-time queries—with automatic containment options. - Developer-Friendly Integration

SDKs, gateways, and CI checks make privacy part of the build: fail risky PRs, suggest tokenized alternatives, and keep teams moving fast.

FAQs

- What are common AI privacy issues?

Common AI privacy issues include unauthorized data sharing, consent gaps, repurposing data beyond original intent, biometric exposure, and insider misuse like employees pasting sensitive data into public LLMs. - What happened in the Cambridge Analytica AI privacy case?

Cambridge Analytica harvested tens of millions of Facebook profiles for political profiling without proper consent, demonstrating how “free” data can be misused for unauthorized secondary purposes. - How do AI systems create privacy risks?

AI systems create privacy risks through unauthorized access to sensitive data, weak human oversight, repurposing data beyond original intent, and systemic vulnerabilities like prompt injection attacks. - What AI privacy issues affect healthcare?

Healthcare AI privacy issues include PHI exposure in notes and transcripts, vendor partnerships without proper safeguards, and RAG systems accessing unredacted patient charts. - How can companies prevent AI privacy issues?

Companies can prevent AI privacy issues by implementing masking and tokenization, pre-prompt redaction, API schema enforcement, data lineage tracking, and continuous monitoring for anomalies.

Ready to adopt AI without the risks?