A major publicly traded CPG company wanted to adopt LLM to improve performance marketing, analytics, and customer experience. However, the IT team blocked AI usage and uploads to external AI tools as interacting with public AI models could expose sensitive brand, consumer, and financial data.

This isn’t an isolated problem. It’s a pattern across enterprises: business agility collides with security requirements. Protecto bridges this gap by enabling safe, compliant LLM adoption at enterprise scale.

The Enterprise LLM Adoption Challenge: Why Standard AI Solutions Fall Short

When we spoke with leaders at this CPG organization, they articulated the core challenge clearly:

“We want to start using ChatGPT and Gemini… but privacy is not up to the standard today.”

The problems were specific and measurable:

- “IT does not believe people will use AI responsibly… we’re a publicly traded company.” Regulatory and fiduciary responsibilities make data exposure unacceptable.

- “How can we make sure the model does not know this is our financial or consumer data?” External LLMs retain training data; every query adds to public records.

- “I don’t want the model to know which company, which brand, which product.” Competitive intelligence leakage is a real risk.

- “Both frontline and backend use cases are blocked for data concerns.” The organization needed solutions for two distinct workflows.

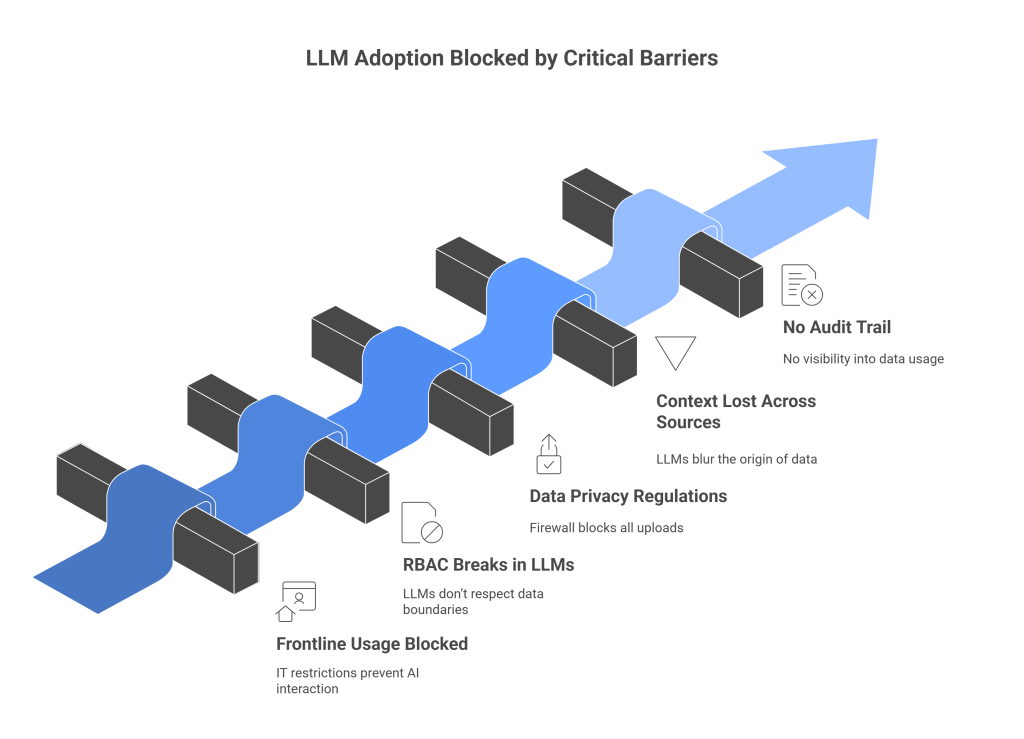

Critical Barriers to LLM Adoption in Enterprise CPG Companies

1. Frontline Usage is Completely Blocked

Employees across marketing, content, and operations need a safe way to use ChatGPT and Gemini for daily workflows, content creation, research, copywriting, without exposing proprietary corporate data.

Currently, IT restrictions prevent AI interaction without privacy guardrails AI as GenAI tools can inadvertently leak data. Manual review is impossible at this scale. But sending raw consumer data to external models violates data governance regulations like DPDP or HIPAA.

2. RBAC Breaks in LLMs

Traditional role-based access control (RBAC) assumes clear boundaries; users only access data they’re allowed to. LLMs don’t respect those boundaries. Once data is fed into a model, it’s abstracted and generalized. That means if one user inputs sensitive info, the model might unknowingly surface it later in response to another user’s prompt, even if that user had no rights to the original data. There’s no concept of “read-only” or role enforcement inside the model’s memory, making RBAC effectively useless.

3. Data Privacy Regulations Prevent Adoption

Organizations must comply with financial disclosure requirements, consumer privacy regulations, and data protection standards. Financial data, consumer inputs, brand names, and product identifiers cannot be exposed to external LLM providers. The current firewall blocks all uploads, a necessary but productivity-killing safeguard.

4. Context Is Lost Across Sources

LLMs blur the origin of data. A single prompt can pull from multiple systems like pulling PHI from CRM data, PII from a support ticket, and confidential product logs all without honoring their separate access policies.

Since LLMs don’t track the origin of each data point, enforcing policies before or after interaction isn’t enough. If you don’t govern access at runtime, the model might expose restricted data simply because it saw it earlier.

No Audit Trail, No Accountability

Once data enters an LLM, there’s no visibility into how it’s used. If a prompt included PHI or a credit card number and wasn’t logged or tagged at ingestion, there’s no way to audit exposure or prove compliance. In regulated industries, this is a dealbreaker. Without tracking or classification, you can’t investigate data misuse, prove minimization, or ensure regulatory compliance.

How Protecto Enables Safe LLM Adoption: Privacy-by-Design AI Gateway

Protecto solves this by introducing an AI privacy gateway that sits between your enterprise and external LLMs. Instead of uploading raw data directly to OpenAI, Gemini, or other providers, sensitive information is intelligently masked, de-identified, and pseudonymized before the model ever sees it.

1. Intelligent Masking Before Data Leaves Your Environment

Protecto intercepts data at the entry point, masks sensitive identifiers, sends only anonymized content to the LLM, and unmasks results on return. This happens in milliseconds, users experience instant responses without knowing privacy transformation occurred.

Protected data types include:

- Names, emails, phone numbers, and personal identifiers (PII)

- Enterprise identifiers and internal reference codes

- Brand names and product references

- Financial data and transaction details

- Consumer behavioral data and preferences

- Any custom sensitive fields your organization defines

2. Policy-Driven Masking Rules Across Teams

Different teams have different privacy requirements. Analytics teams analyzing consumer reviews need different rules than finance teams processing quarterly data. Protecto enables policy-driven controls, each team defines its own masking rules based on organizational governance.

- Analytics teams: Mask consumer PII and product identifiers

- Marketing teams: Mask brand references and competitive data

- Finance teams: Mask account numbers, revenue figures, and customer identifiers

- CX teams: Mask customer names and contact information

3. Enterprise and Brand De-identification

The model never learns which company generated the request or which brands/products are involved. External LLMs see data structure and content patterns, but not organizational identity. This protects competitive intelligence while still enabling advanced analytics.

4. Built for Enterprise-Scale Analytics

For organizations processing millions of data points, Protecto provides:

- Async APIs optimized for high-volume workflows (1M+ records weekly)

- High-performance token entropy systems that maintain data utility while maximizing privacy

- Consistent pseudonymization that links masked records across documents and workflows

- Configurable key vault for centralized privacy governance and audit trails

- Multi-tenant policies for large organizations with multiple teams and departments

5. Enterprise-Grade Deployment and Auditability

Protecto runs as a microservice in your cloud environment, no data leaves your infrastructure unnecessarily. Full audit logging tracks every data transformation, every API call, and every policy application. IT teams get complete visibility and control.

Real-World Workflows: From Blocked to Enabled

Frontline Usage: Chat-Based Content Creation

Before: Employee tries to draft marketing copy using ChatGPT. IT blocks the upload. Productivity stalls.

With Protecto: Employee uploads documents to a thin Protecto chat interface → data is masked → sent to OpenAI → results are returned and unmasked instantly → employee has AI assistance without exposing brand details or internal references.

Backend Analytics: Processing Millions of Consumer Reviews

Before: Analytics team has 1M+ weekly product reviews to analyze. Manual sentiment analysis is impossible. External LLM access is blocked due to privacy concerns.

With Protecto: Reviews are ingested weekly → Protecto masks consumer PII, financial identifiers, and brand names → masked reviews flow through analytics workflows → LLMs perform aspect-based sentiment analysis, topic extraction, and insight generation → all analysis happens on masked data with complete privacy and audit trails.

Financial Workflows: Secure Data Sheet Analysis

Before: Finance team needs LLM help analyzing complex spreadsheets. Attribution data and company identifiers must be removed manually before any external analysis.

With Protecto: Sheets are uploaded → Protecto removes attribution and sensitive identifiers → LLMs analyze structure, patterns, and numbers without knowing the company or specific accounts → results are returned → finance team has insights without exposure.

The Path Forward: From Blocked to Empowered

The enterprise AI paradox, business wants adoption, security requires protection, is solvable. Organizations don’t have to choose between innovation and security. With a privacy-by-design AI gateway like Protecto, teams can:

✓ Unlock LLM adoption safely for both frontline employees and analytics teams

✓ Process massive datasets (1M+ records) with full privacy protection

✓ Maintain competitive confidentiality and regulatory compliance

✓ Integrate with existing workflows in days, not months

✓ Future-proof against LLM model changes and new providers

Ready to Enable Safe LLM Adoption at Your Organization?

If your enterprise faces the same LLM adoption barriers, business demand colliding with security requirements, Protecto can help. Learn how other publicly traded companies are safely adopting LLMs while protecting their most sensitive data.