Why Privacy Is the Make-or-Break for Generative AI

The promise of large language models is simple: turn messy text and data into instant answers, drafts, and decisions. The catch is simple: those models are hungry, and the most valuable data you own is also the most sensitive. If that escapes, you have legal, brand, and trust problems.

This is where the story shifts. How LLM Privacy Tech Is Transforming AI is about making real deployments possible. With modern privacy controls, you can unlock your data’s value while reducing exposure, proving compliance, and avoiding the classic trap of “shadow AI.”

What “LLM Privacy Tech” Actually Means

Privacy tech for LLMs is a stack of capabilities designed to protect sensitive information across the full lifecycle:

- Data discovery and classification to find PII, PHI, PCI, secrets, and regulated content before anything touches a model.

- Redaction, masking, and tokenization so models and prompts never see raw identifiers.

- Policy engines and DLP that block or rewrite risky inputs and outputs in real time.

- Secure training and fine-tuning with de-identified or minimally necessary data, plus opt-outs.

- RAG controls to sanitize documents on ingestion and filter snippets at retrieval time.

- Access control and audit: SSO, RBAC, approval flows, and detailed logs for every input and response.

- Storage governance: short retention, encryption, residency, and proof-of-deletion workflows.

- Evaluation and monitoring for leakage, bias, and prompt-injection resilience.

The Core Principles: Privacy That Scales With Your Models

- Minimize by default: Collect and process only what’s necessary. Store features or embeddings instead of raw documents when possible. Mask direct identifiers early in the pipeline.

- Separate identifiers from content: Keep mapping tables under strict access. Models should operate on tokens or de-identified text, not live names, emails, or account numbers.

- Shift left: Run discovery and redaction at ingestion and before prompts, not as a last-minute output filter. It’s cheaper and safer.

- Make policy executable: Turn your privacy policy into code: deny rules, allow lists, retention timers, and just-in-time checks enforced automatically.

- Prove it: Auditable logs, consent records, deletion receipts, and lineage graphs convert “trust us” into measurable evidence.

Key Techniques Powering the Transformation

1) Automated Discovery and Classification

Modern systems parse text, tables, PDFs, images, and even code to flag risky elements. They recognize emails, SSNs, ICD-10 codes, access keys, and custom patterns. Discovery powers everything else: if you can’t see it, you can’t protect it.

Where it lands: triaging legacy knowledge bases, vetting uploads, pre-flight checks before RAG ingestion.

Protecto discovers sensitive elements across lakes, wikis, ticketing systems, and prompts, then triggers masking policies.

2) Redaction, Masking, and Tokenization

Replace sensitive values with placeholders or deterministic tokens. Maintain a secure vault that can detokenize for authorized workflows, never for models.

Benefit: the model learns structure without learning identities.

3) Differential Privacy and Privacy-Preserving Training

Inject noise or constrain contributions from any single record during training and analytics, so no output reveals an individual’s data.

Use it for: aggregate analytics, model fine-tuning with user data at scale.

4) Retrieval Privacy for RAG

Most LLM apps now use retrieval-augmented generation. Privacy-first RAG means:

- redact at ingestion

- enforce access filters at retrieval

- Run prompt-level DLP before the final answer

- Log what sources were touched

5) Inference Gateways and DLP

A centralized gateway screens prompts and outputs for secrets, PII, and policy violations. It can rewrite or block requests, select safe model routes, and attach context like user role, consent scope, and data residency.

Platforms like Protecto slot in here, enforcing policies across all model providers.

6) Agent Safety and Prompt-Injection Defenses

Agents and tools expand power and risk. Guardrails validate tool calls, strip hostile instructions from untrusted content, and constrain what an agent can exfiltrate. Combine allow lists, sandboxing, and pattern detectors.

7) Secure Storage, Residency, and Retention

Encrypt data at rest, restrict cross-region transfers, and apply short retention. Tie deletion requests to embeddings, caches, and backups with proof.

8) Observability and Audit

Collect structured logs for prompts, retrieved docs, policy hits, redactions, model versions, and latency. Create reports for compliance and incident response.

How LLM Privacy Tech Is Transforming AI Outcomes

A) From Pilots to Production

Privacy used to be a late-stage review that blocked launches. Now it’s part of the architecture. Teams that adopt privacy tech go live faster because audits pass the first time, and user trust is higher.

B) Unlocking Sensitive Use Cases

- Healthcare: draft notes from de-identified text, route PHI through a masked pipeline, obtain BAAs only where needed.

- Financial services: summarize statements with masked account numbers; allow wealth advisors to query a client’s profile without exposing identifiers.

- Legal: analyze case law with client names tokenized, route citations through a secure RAG index.

- Support and sales: use transcripts to train intent models while removing personal details.

C) Higher-Quality Personalization Without the Creep Factor

Personalization thrives when users trust you. Clear notices, opt-outs, and masked processing deliver relevance with control. You keep signal, lose risk.

D) Consistent Governance Across Vendors

Most stacks combine several model providers plus open source. A neutral privacy layer enforces one set of policies everywhere. That’s cheaper to maintain and easier to audit. Protecto excels at this cross-vendor enforcement.

E) Faster Incident Response

When something goes wrong, you need to know what moved where. Centralized logs and lineage shorten the time from detection to fix, reducing exposure and fines.

Technique Comparison: Picking the Right Tools

| Technique | Best For | Strengths | Trade-offs |

| Discovery & Classification | Broad estates with unknown risks | Fast risk reduction, powers policy | Needs tuning for domain-specific entities |

| Redaction/Tokenization | RAG, prompts, fine-tuning | Keeps utility, hides identities | Vault management, mapping maintenance |

| Differential Privacy | Analytics, large-scale training | Strong theoretical guarantees | Accuracy costs, complex tuning |

| Inference Gateway DLP | Day-one protection | Model-agnostic, real-time controls | Must avoid false positives |

| Secure Enclaves/VPC | Highly regulated data | Strong isolation, residency | Cost, ops complexity |

| Agent Guardrails | Tool-heavy apps | Limits exfiltration, safer autonomy | More engineering, eval maintenance |

Case Snapshots

1) Global Bank: Private RAG for Relationship Managers

- Problem: Advisors needed instant answers from policies, product sheets, and client docs without exposing PII.

- Approach: Discovery and tokenization at ingestion, client-level ACLs, masked RAG, output DLP.

- Result: Minutes-to-seconds answers, no PII in prompts, clean audit trails. Protecto enforced masking and policy hits across indices and prompts.

2) Health System: Clinical Summaries Without PHI Leakage

- Problem: Physicians wanted note drafts; compliance required strict PHI controls.

- Approach: On-device capture, server-side de-identification, fine-tuning on de-identified corpora, PHI-blocking inference gateway.

- Result: 25–30% documentation time saved, zero PHI in LLM logs, rapid audit approvals. Protecto handled PHI detection and reversible tokens for authorized re-linking.

3) SaaS Support: Ticket Triage and Auto-Reply

- Problem: Auto-replies risked echoing customer PII.

- Approach: RAG on sanitized knowledge base, inbound DLP on prompts, content filters on outputs, retention set to 30 days.

- Result: Higher first-contact resolution and no privacy incidents. Protecto provided dashboards for policy hits and deletions.

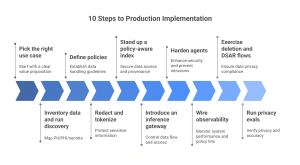

Implementation Playbook: 10 Steps to Production

- Pick the right use case with clear value and moderate sensitivity.

- Inventory data and run discovery to map PII/PHI/secrets.

- Define policies: what to block, mask, retain, and route, per data class and role.

- Redact and tokenize at ingestion before embeddings or fine-tuning.

- Stand up a policy-aware index with ACLs and provenance.

- Introduce an inference gateway for DLP, role-aware routing, and output filtering.

- Harden agents with tool allow lists, sandboxes, and injection scrubbing.

- Wire observability for prompts, retrievals, policy hits, and deletions.

- Exercise deletion and DSAR flows end to end, including embeddings and caches.

- Run privacy evals alongside accuracy tests; ship with pass/fail thresholds.

Short on engineering cycles? Protecto accelerates steps 2–6 and 8–9 so you can focus on application logic.

Metrics That Matter

- Sensitive prompt rate: share of prompts containing PII/PHI/secrets blocked or masked

- Policy hit rate: frequency of DLP blocks and rewrites

- Retrieval leakage rate: times a retrieved chunk violated user or document ACLs

- Time-to-delete: how fast data, embeddings, and caches are purged on request

- Redaction coverage: percent of high-risk sources masked pre-ingestion

- Incident MTTR: detection-to-remediation time for privacy issues

Turn these into weekly dashboards. Privacy becomes a performance discipline, not a quarterly panic.

Common Pitfalls and How to Dodge Them

- Output-only filters. If you only scrub responses, you’re too late. Mask at ingestion and again at inference.

- One-size-fits-all policies. Finance is not supported. Tailor rules by domain, data class, and role.

- Unscoped agents. Tools with broad file access will eventually spill something. Constrain, monitor, and log.

- Forgetting embeddings. Deleting the source file isn’t enough. Purge vectors and caches too.

- Shadow AI. If the official path is slow or restrictive, teams will route around it. Make the safe way the easy way.

The Future: Privacy as a Feature Users Can See

Expect three shifts that will keep redefining How LLM Privacy Tech Is Transforming AI:

- Privacy UX in the product. “Why this answer?” links, consent toggles per use, model change logs, and redaction previews.

- Policy portability. Bring your privacy rules to any model host or cloud, the way you bring IaC to any infra.

- Continuous privacy evals. Just like load tests and unit tests, privacy tests will run in CI/CD and block releases when they fail.

FAQs

Can we fine-tune models without exposing customer identities?

Yes. Use tokenization and de-identification before training. Keep a secure mapping outside training data for authorized re-linking.

Does retrieval make privacy harder?

It makes it manageable if you sanitize on ingestion, enforce ACLs at query time, and apply output filters. RAG is often safer than dumping raw data into training.

What about agents with tool access?

Treat them like interns with superpowers: strict scopes, allow lists, and detailed logs. Block exfiltration patterns and strip hostile instructions from untrusted inputs.

Is differential privacy mandatory?

Not always. It’s strongest at scale for analytics and certain training regimes. For many apps, early wins come from masking, tokenization, and RAG controls.

How do we prove compliance?

Maintain policy-as-code, audit logs, consent records, and deletion receipts. Use dashboards to track policy hits and retention.

Protecto: Your Privacy Control Plane for LLMs

If you’re serious about operationalizing privacy, Protecto adds a unified layer across your AI stack:

- Automated data discovery and classification across wikis, tickets, data lakes, and file stores

- Real-time masking and tokenization before embeddings, prompts, and fine-tuning

- Policy engine and DLP that blocks or rewrites risky inputs and outputs, with role-aware routing

- RAG governance to sanitize ingestion, enforce ACLs, and filter retrieved chunks

- Observability and audit with dashboards for policy hits, lineage, deletions, and consent proofs

- Developer-friendly integration via SDKs and gateways to slot into existing pipelines and agents