“Is ChatGPT safe” is the headline question that nearly every team asks the moment AI enters the room. The better version is: safe for what, and under which controls? Safety is not a single switch. It combines technical security, data privacy, content safeguards, governance, and how your people use the tool.

This guide breaks down how ChatGPT handles data, where privacy risks actually come from, and the practical steps to operate safely at home and at work. We’ll keep the language plain, the advice actionable, and the hype out.

What “Safe” Means in Practice

When people ask “Is ChatGPT safe,” they typically mean one or more of the following:

- Data security: Are my inputs protected in transit and at rest? Who can access them?

- Privacy: Will my data be retained, used to improve models, or shared? Can I control this?

- Content safety: How does the system avoid harmful or biased outputs?

- Regulatory compliance: Can I meet GDPR, CCPA, HIPAA, PCI DSS, or industry rules?

- Operational risk: Could prompts leak confidential information or be manipulated by malicious content?

A sensible answer covers all five, not just encryption and a trust badge.

A Quick Primer: How ChatGPT Works

At a high level, ChatGPT predicts likely next words based on a massive training corpus. It uses your prompt to guide generation. Safety layers and policies monitor inputs and outputs to reduce harmful responses.

Two critical privacy points:

- Inference vs training: Your prompt is used to generate a response (inference). In many setups, prompts may also be retained for service improvement unless you opt out or use an edition that disables training on your data.

- Context windows: The model only “remembers” what you put into the current session or conversation context. It does not inherently recall private chats across sessions unless the product stores them.

For organizations, edition and configuration matter. Enterprise offerings typically provide stronger privacy guarantees, admin controls, and data-use restrictions compared to consumer versions.

What Data Might Be Collected

When you use ChatGPT or similar systems, the following categories may be processed:

- Prompt content: Everything you paste or type, plus files you upload.

- Generated outputs: The responses sent back to you.

- Metadata: Timestamps, usage metrics, language, device, and account identifiers.

- Telemetry and logs: Error logs, performance data, and security events.

Retention and usage vary by edition, region, and your admin settings. Enterprise-grade plans commonly offer controls to opt out of data being used to improve models, to set retention windows, and to restrict data access by vendor employees except for required support. Always check the current product documentation and your contract terms.

Good practice: Treat prompts like emails. If you wouldn’t email unencrypted bank records or source code to an external address without controls, don’t paste them into an AI chat. Use approved channels, redaction, and DLP first.

Privacy Measures Typically in Place

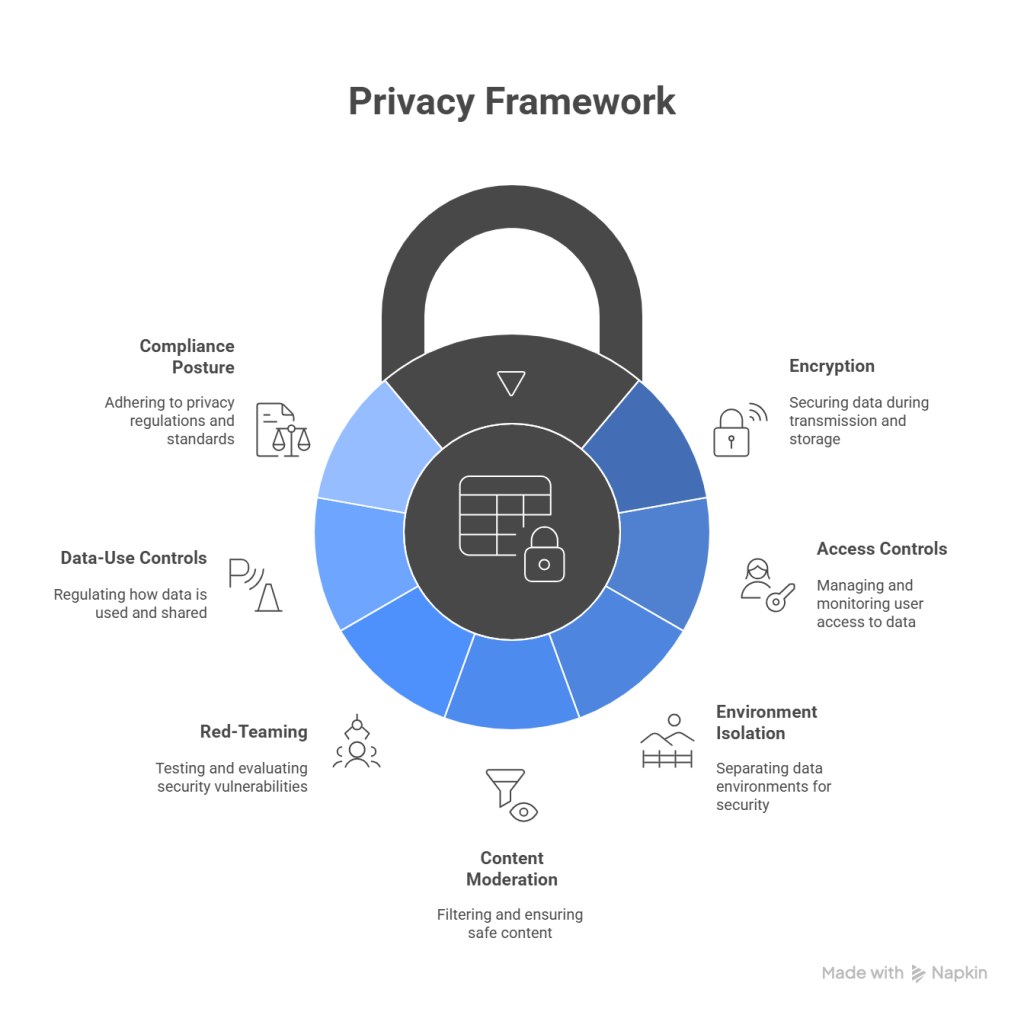

While specific implementations vary by provider and plan, modern AI services generally incorporate:

- Encryption in transit and at rest: TLS for data in motion; strong encryption for stored data.

- Access controls and auditing: Role-based access control (RBAC), SSO/SAML, SCIM, and audit logs.

- Environment isolation: Logical isolation between tenants, sometimes including dedicated environments for enterprise customers.

- Content moderation and safety filters: Guardrails to reduce disallowed content.

- Red-teaming and model evaluations: Continuous testing for prompt injection, bias, and unsafe behavior.

- Data-use controls: Settings to limit training on your data, retention periods, and deletion mechanisms.

- Compliance posture: Independent assessments like SOC 2 Type II, ISO 27001, and regional controls to support GDPR/CCPA obligations.

These are strong foundations. They do not absolve you from minimizing sensitive inputs and building governance around usage.

Where Risks Actually Come From

As with cloud adoption a decade ago, most AI incidents trace back to human and process gaps, not exotic model exploits. Watch for:

- Data overexposure: Users paste PII, PHI, card data, source code, or contracts without approval or masking.

- Prompt injection: Malicious content embedded in websites, PDFs, or emails that tells an AI agent to ignore rules and exfiltrate data.

- Output leakage: Generated text inadvertently reveals internal context (e.g., copying agent chain-of-thought notes or system prompts into an email).

- Weak identity and access: Shared logins, missing MFA, or poor session controls create audit blind spots and abuse risk.

- Lack of data governance: No inventory of prompts, no classification, no retention policy, and no deletion pipeline.

- Hallucinations and bias: Incorrect outputs can harm users or lead to bad decisions if not verified.

A Practical Checklist

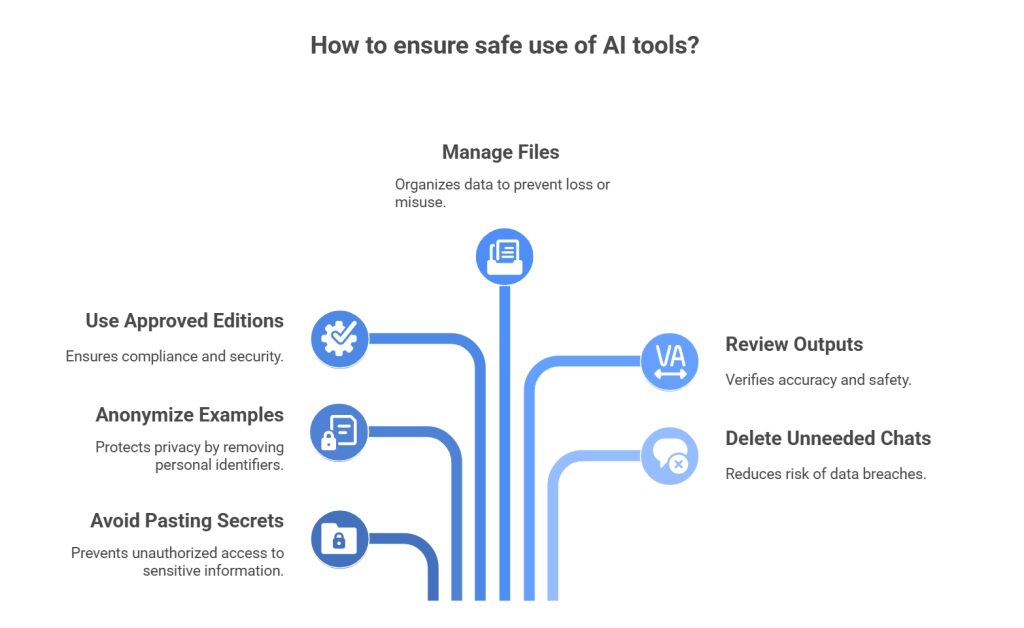

- Never paste secrets: API keys, passwords, card numbers, and medical notes don’t belong in a consumer chat.

- Anonymize examples: Replace names and identifiers with placeholders when seeking help with real cases.

- Use approved editions: If your employer offers an enterprise workspace, use that instead of a personal account.

- Manage files: Remove hidden metadata from documents and images before upload.

- Review outputs: Treat responses as drafts to verify, especially for regulated or high-stakes topics.

- Delete chats you don’t need: Reduce exposure by cleaning up.

Safe Use for Teams and Enterprises

Implement a layered approach:

- Governance

- Establish an AI acceptable-use policy that defines what data types are allowed.

- Classify data and map systems where AI is used.

- Require privacy impact assessments for new AI workflows.

- Identity and Access

- Enforce SSO with MFA and granular RBAC.

- Provision and deprovision via SCIM, not manual account sharing.

- Set session timeouts and monitor high-risk events.

- Data Controls

- Discovery: Find where sensitive data sits and how it flows into prompts.

- Minimization: Redact or tokenize PII, PHI, PCI, and secrets before model calls.

- Retention: Limit storage, define deletion SLAs, and honor data subject rights.

- DLP: Inspect prompts and attachments for sensitive patterns.

- Observability: Log prompts, outputs, and policy decisions for audits.

- Technical Guardrails

- Use allowlists/denylists for tools and connectors.

- Add content filters on both input and output.

- Implement prompt injection defenses and sanitization for untrusted content.

- Sandbox and rate limit agents with tool access.

- Vendor and Edition Selection

- Prefer enterprise editions with contractual data-use restrictions.

- Validate SOC 2/ISO certifications and region residency options.

- Confirm support for data deletion, audit export, and incident response.

Where this gets easier: Protecto centralizes data discovery, masking, and policy enforcement across AI systems so admins don’t chase every team’s custom prompt flow.

Regulatory Considerations: GDPR, CCPA, HIPAA, PCI

- GDPR/CCPA: Define roles (controller vs processor), set lawful bases for processing, offer notices, and support data subject rights. Verify vendor data processing addenda and cross-border transfer mechanisms.

- HIPAA: Only use AI with a Business Associate Agreement and ensure protected health information is handled in compliant environments. Mask or de-identify wherever possible.

- PCI DSS: Don’t put PANs or CVVs in prompts. Tokenize or block patterns at the edge with DLP.

- Records and retention: Build deletion and DSAR workflows that include AI logs and prompts.

Practical tip: Run a privacy impact assessment for AI use cases. Tools like Protecto can map data flows, flag risky prompts, and enforce masking policies to reduce regulatory exposure.

Threat Scenarios and How to Defend

- Prompt Injection via External Content

- Scenario: A research agent reads a webpage that contains hidden instructions to leak internal notes.

- Defend: Sanitize inputs, strip HTML/script artifacts, restrict tool execution, and log agent reasoning. Use pattern-based detectors to spot injection attempts.

- Sensitive Data Paste by Users

- Scenario: A developer pastes a private API key while asking for code help.

- Defend: Client-side DLP and real-time masking. Prevent the prompt from leaving your network. Protecto can block or tokenize secrets before dispatch.

- Output Overflow

- Scenario: The model returns a long response that includes system prompts or file paths.

- Defend: Output filters that redact known sensitive strings and a final reviewer step for high-risk tasks.

- Overbroad Access

- Scenario: Interns have access to the same AI workspace as finance leaders.

- Defend: Separate workspaces, least-privileged roles, and conditional access policies.

- Unlogged Shadow AI

- Scenario: Teams quietly adopt browser chat tools to speed up work.

- Defend: Official AI services with logging, education, and a safe alternative that performs better than shadow tools.

What About On-Prem or Private LLMs?

Running models privately or via a virtual private cloud can reduce data movement and increase control. This helps answer “Is ChatGPT safe” in environments with strict regulations. Tradeoffs include:

- Cost and complexity: GPUs, MLOps pipelines, and continuous patching aren’t trivial.

- Safety parity: You still need guardrails, redaction, and DLP. Private models can leak sensitive info if you feed them risky prompts.

- Latency vs governance: Private deployments can be tuned for your needs, but governance and monitoring become your responsibility.

For many, a hybrid model works best: enterprise SaaS for general productivity, private endpoints for high-sensitivity workloads, and Protecto providing consistent data policies across both.

Handling Files, Images, and Structured Data

Modern AI tools accept uploads: PDFs, spreadsheets, presentations, and images. Treat these as potentially sensitive:

- Strip metadata from files (authors, geolocation, version history).

- Chunk and sanitize large documents; exclude sections with PII or secrets from retrieval pipelines.

- Watermark or tag outputs to prevent accidental downstream reuse.

- Use staging buckets with time-limited access and automatic deletion.

- Policy-based processing: With Protecto, define rules such as “mask emails and phone numbers in any uploaded document before indexing.”

Accuracy, Hallucinations, and Safety

Privacy and security protect data; accuracy protects decisions. Even safe systems that hallucinate can cause real harm. Build in:

- Source grounding: Use retrieval-augmented generation with curated sources for business tasks.

- Confidence cues: Provide citations and uncertainty flags in UI.

- Human-in-the-loop: Require verification for customer-facing or regulated outputs.

- Feedback loops: Capture user ratings and corrections to improve prompts and guardrails.

Accuracy practices won’t answer “Is ChatGPT safe” alone, but they stop safe systems from confidently producing the wrong thing.

Common Myths, Debunked

- “The model remembers everything I type forever.”

The model’s context window only includes the current conversation. Storage beyond that is product-specific and governed by retention settings. - “If it’s encrypted, it’s automatically compliant.”

Encryption is necessary, not sufficient. Compliance needs lawful basis, data minimization, rights handling, and audit evidence. - “Private or open-source models don’t need guardrails.”

They need the same or stronger controls because your team is responsible for everything, including redaction and logging. - “We can’t use AI until every risk is solved.”

You can start safely with a narrow scope, strong guardrails, and continuous improvement.

FAQs

Is ChatGPT safe for kids or students?

For schoolwork, yes, with supervision, content filters, and awareness of accuracy limits. Don’t share personal details or school records.

Can I use ChatGPT with client data?

Only under an enterprise setup with clear contracts, data-use restrictions, and DLP controls. Mask client identifiers.

Does a VPN make my prompts private?

It protects network transit from your device to the VPN. It does not replace account-level controls, retention policies, or vendor-side security.

Can I ask about medical or legal issues?

You can ask for general information, but do not paste personal medical records or privileged legal documents. Always verify with professionals.

Will the model train on my data?

Depends on the edition and settings. Enterprise plans typically restrict model training on your data by default. Verify your configuration and contract.

So, Is ChatGPT Safe?

For everyday personal use with non-sensitive topics, yes, ChatGPT is safe when you avoid sharing private data and review outputs. For businesses, “Is ChatGPT safe” becomes “Have we configured the right edition, applied guardrails, and trained our people?” With a clear policy, enterprise controls, redaction, and monitoring, you can meet tough privacy expectations and still move fast.

If you want an operational shortcut, add Protecto to automate data discovery, masking, and enforcement across your AI workflows.

Protecto: Strengthening AI Privacy and Compliance

If you’re serious about privacy-first AI, Protecto provides a control plane for data flowing into and out of ChatGPT and other models:

- Automated data discovery: Find PII, PHI, PCI, secrets, and sensitive patterns across prompts, files, and knowledge bases.

- Real-time masking and tokenization: Redact or tokenize sensitive elements before the model sees them, with reversible tokens for authorized users.

- Policy engine and DLP: Enforce allowlists/denylists, block risky prompts, and tailor rules by team or data classification.

- Observability and audit trails: Log prompts, outputs, policy decisions, and retention events for compliance audits.

- Developer-friendly: SDKs and gateways integrate with your existing AI stack, RAG pipelines, and agents.

- Cross-vendor coverage: Apply the same privacy controls whether you use ChatGPT, private LLMs, or a hybrid setup.