Healthcare has always been one of the toughest environments for maintaining privacy. Now add AI assistants, retrieval-augmented generation, and multimodal inputs like clinical images and voice notes. Sensitive information travels farther and faster than ever before, and the fallout from a single leak can be devastating, affecting clinical, legal, and reputational aspects. The question for 2025 is simple: how do we harness the advantages of AI without compromising private health data?

This guide addresses the most common privacy concerns associated with AI in healthcare, then translates current regulations into practical controls. You will find a practical checklist for pipelines, prompts, and APIs; a short overview of the big 2025 regulatory updates on both sides of the Atlantic; and ways to show auditors and partners real evidence that your guardrails work.

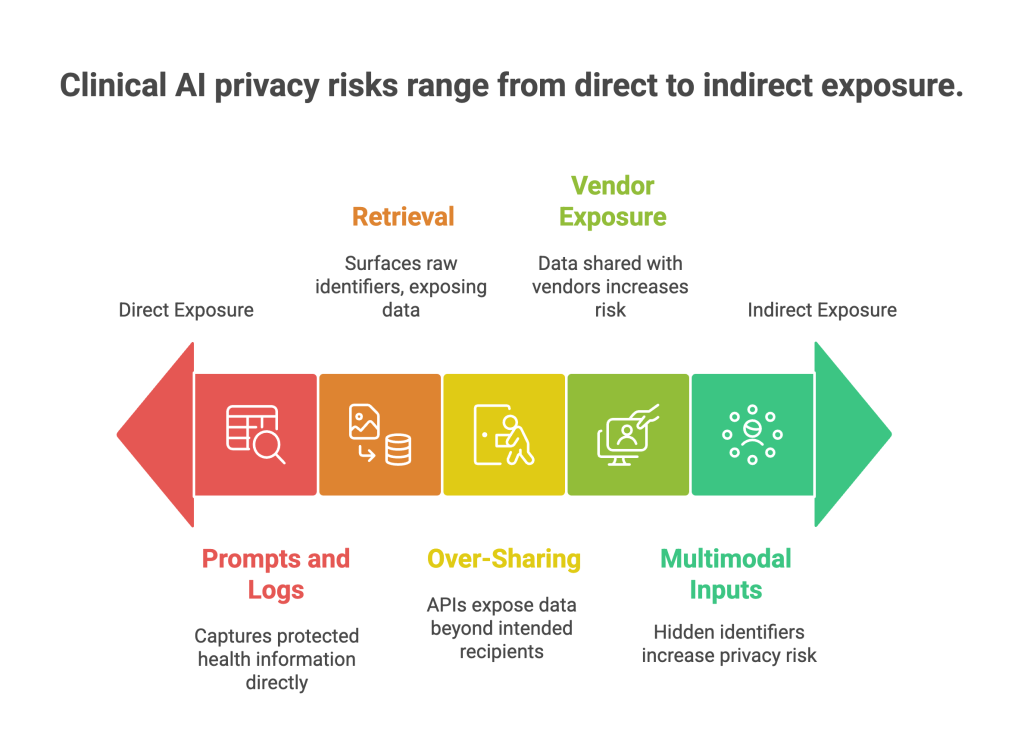

Where privacy risk really shows up in clinical AI

Healthcare organizations rarely face exotic attacks. Most issues come from ordinary work that lacks guardrails.

Prompts and logs that capture PHI

Clinicians and support staff paste encounter notes, addresses, or claim IDs into an assistant. If prompts and logs aren’t filtered, protected health information can end up in model traces or third-party telemetry.

Retrieval that surfaces raw identifiers

Retrieval-augmented generation is great for clinical guidance and policy answers. But if the document index contains unredacted patient names, MRNs, or claim numbers, normal questions can expose raw identifiers.

Over-sharing through APIs

Support or analytics endpoints can return more fields than necessary, including identifiers or location data. Without response schemas and scopes, third-party tools may ingest PHI they don’t need.

Vendor and cross-border exposure

Cloud services, app SDKs, and model hosts may store data in the wrong region or retain more than your contract allows. Healthcare apps outside HIPAA can still trigger federal breach duties in the United States. (Federal Trade Commission)

Multimodal inputs, hidden identifiers

Images, audio, and video can expose faces, voices, on-screen names, and date stamps that text-only filters miss. Without image and audio redaction, sensitive features can slip into vector stores and retrieval pipelines.

The fix is not one giant tool. It is a small set of precise controls placed at high-risk points in the lifecycle.

2025 regulatory insight: what changed and why it matters

Regulators did not invent new privacy principles this year; they raised expectations for proof and scope. Here are the updates that matter most to healthcare teams.

EU AI Act: staged obligations begin

The EU AI Act entered into force in 2024 and begins applying obligations in stages through 2026. High-risk systems will face documentation, data governance, and oversight requirements, while providers of general-purpose models have new duties beginning in 2025. Planning now for dataset registers, transparency, and post-market monitoring will save rework later. (Digital Strategy)

European Health Data Space (EHDS): in force, long runway

The EHDS was published in March 2025 and is now in force. It aims to standardize primary access and enable secure secondary use of health data across the EU, with key application milestones extending toward 2029. For hospitals, payers, and life sciences, this means stronger rights for patients to access and share records, plus clearer pathways for research using anonymized or pseudonymized data under strict conditions. (Public Health)

United States: HIPAA security update and FTC reach beyond HIPAA

HHS proposed the first major HIPAA Security Rule update in decades to better address modern cyber threats. Expect more specific expectations for risk management, contingency planning, and technical safeguards for ePHI. At the same time, the FTC finalized Health Breach Notification Rule amendments that explicitly cover many digital health apps and connected devices outside HIPAA, expanding notice obligations after breaches of identifiable health data. (HHS.gov)

FDA and ONC: transparency and lifecycle discipline

For AI-enabled medical devices, the FDA continues to steer toward lifecycle management with draft guidance and international Good Machine Learning Practice principles published in 2025. ONC’s HTI-1 final rule expands transparency for decision support features, which influences how vendors explain AI logic and source data inside certified health IT. Together, these push AI toward clear provenance and predictable updates. (U.S. Food and Drug Administration)

China and India: cross-border clarity with stricter expectations

China further clarified cross-border data transfer rules in 2024–2025, easing some pathways but signaling national standards with technical safeguards for overseas processing; India’s DPDP Act continues to roll out implementing rules affecting consent, rights, and transfers. Multinational providers and medtech vendors must keep residency and transfer logic current in code, not only in contracts. (China Law Translate)

The headline is simple: show your work. Evidence of minimization, purpose limits, residency routing, and working safeguards matters as much as policy.

Translate rules to everyday controls

The best programs don’t start from a legal memo. They start from the places where data enters, moves, and leaves your systems.

| Step | What to implement | Why it matters |

| Ingestion | Classify PHI and identifiers; tokenize MRNs, account and claim IDs; block files with secrets | Minimization at the edge keeps raw identifiers out of downstream stores |

| Preprocessing | Redact names, addresses, and keys in notes before indexing or embedding | Retrieval won’t surface raw PHI if the index never stored it |

| LLM gateway | Pre-prompt scanning; output filters for PHI; tool allow lists | Stops leaks in everyday prompts and prevents tool misuse |

| APIs | Enforce response schemas and scopes; rate limits and anomaly detection | Prevents over-sharing and catches slow exfiltration |

| Logging | Redact logs; shorten retention; isolate secure telemetry | Traces often contain PHI; treat them as sensitive by default |

| Lineage and audits | Keep dataset registers, policy versions, and user context for each action | Turns audits into exports and speeds breach investigations |

These are the controls regulators and customers expect to see working, not just written down.

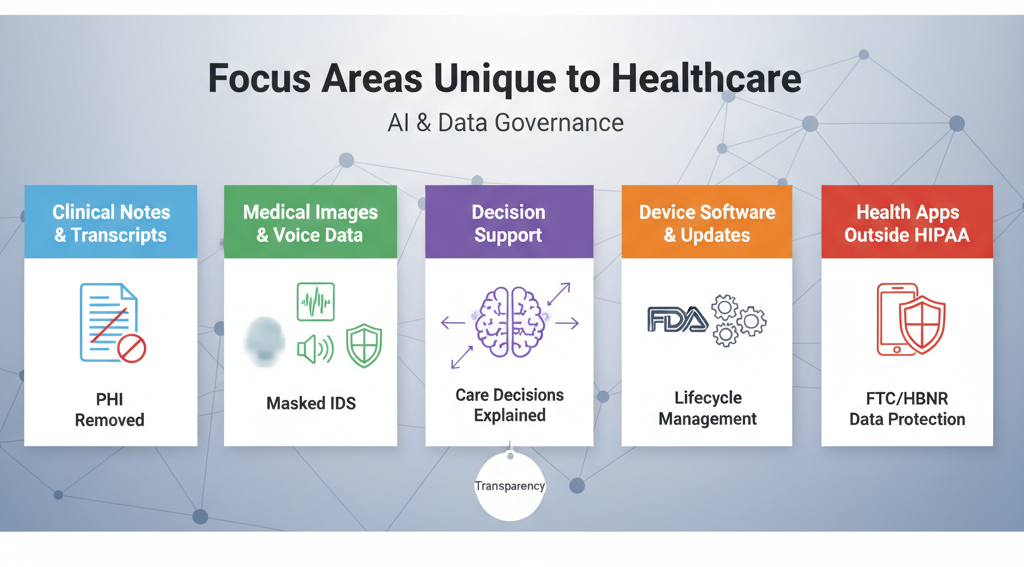

Focus areas unique to healthcare

Healthcare workflows carry special risks. Address these directly.

- Clinical notes, tickets, and transcripts: Free text hides names, dates, and record numbers. Use entity redaction tuned for PHI before indexing or prompting.

- Medical images, waveforms, and voice: Apply face blurring, on-screen identifier masking, and voice de-identification before storage or retrieval.

- Decision support and explanation: When AI affects care, provide plain-language rationales and cite source documents. ONC’s transparency rules push vendors in this direction. (HealthIT.gov)

- Device software and updates: If you build AI-enabled device functions, follow FDA’s lifecycle guidance and GMLP principles to manage updates safely and predictably. (U.S. Food and Drug Administration)

- Health apps outside HIPAA: If you touch personal health information and you are not a HIPAA covered entity or business associate, the FTC’s HBNR may still require breach notices. Confirm coverage and update runbooks. (Federal Trade Commission)

Practical playbook: privacy by design for clinical AI

Start with a small set of moves that prevent most incidents without slowing care teams.

- Tokenize identifiers at ingestion: Deterministic tokenization preserves joins and analytics while removing raw MRNs, claim IDs, and account numbers.

- Redact before indexing and prompting: Run contextual PHI redaction on notes, PDFs, and transcripts before embeddings or LLM calls so retrieval and answers cannot echo raw PHI.

- Enforce schemas and scopes: Lock down API responses to a minimal field set per role and purpose. Rejections and masks should be automatic, not manual reviews.

- Add a policy-aware LLM and API gateway: Filter inputs and outputs for PHI, limit tools to allow-listed actions, and apply residency routing for cross-border rules.

- Keep lineage and policy logs: For every model call and retrieval, record what data was touched, which policy version applied, and why a decision was allowed or denied.

- Monitor vectors, prompts, APIs, and egress: Baseline behavior and alert on enumeration, scraping, or after-hours spikes. Throttle first, then investigate.

Evidence that passes audits and speeds deals

Regulators and enterprise buyers want a short set of proof points.

- Coverage: Percent of critical datasets classified for PHI and masked or tokenized at ingestion.

- Edge safety: Percent of risky prompts blocked or redacted; number of PHI hits removed from outputs.

- Guardrails: Response schema violations per ten thousand API calls; mean time to detect anomalies.

- Rights and incidents: Average time to fulfill access and deletion requests; mean time to respond to privacy incidents.

Publish an internal trust dashboard and keep exportable reports for the EU AI Act, EHDS secondary-use access, HIPAA reviews, and FTC inquiries.

Vendor governance and cross-border reality

Healthcare stacks depend on vendors: cloud, EHR extensions, model hosts, analytics SDKs. Contracts are not enough; verify practice.

- Maintain egress allow lists by destination and region

- Require no-retention modes and documented sub-processors

- Route data based on residency and purpose in code, not just policy

- Rotate keys, review scopes, and test deletion paths quarterly

These steps keep you ahead of evolving state, federal, and international expectations.

Short case example

A regional health system rolled out a care-coordination assistant. Early pilots revealed PHI leaking into logs and raw identifiers indexed in a knowledge base. The team added tokenization at ingestion for MRNs and claim IDs, PHI redaction before embeddings, and a prompt filter at the LLM gateway. In six weeks, PHI in logs dropped by more than 95 percent, API oversharing violations fell to near zero, and audit prep time for a payer integration shrank from weeks to days. The assistant stayed useful because joins and retrieval still worked on tokenized values.

How Protecto helps

Protecto is a privacy control plane for AI and analytics. It places precise controls where risk begins, adapts enforcement to jurisdiction and purpose in real time, and produces the evidence that healthcare auditors and partners expect.

What Protecto does for healthcare teams

- Automatic discovery and classification: Scan warehouses, lakes, logs, and vector stores to find PHI, PII, biometrics, and secrets. Tag data with purpose and residency so enforcement is automatic.

- Masking, tokenization, and redaction: Apply deterministic tokenization for MRNs, claim and account identifiers, and contextual PHI redaction for clinical notes and transcripts at ingestion and before prompts. Keep analytics and retrieval accurate while removing raw values.

- Prompt and API guardrails: Block risky inputs at the LLM gateway, filter outputs for PHI, and enforce response schemas and scopes for support and billing APIs. Add rate limits and egress allow lists to prevent quiet leaks.

- Jurisdiction-aware policy enforcement: Define purpose limits, allowed attributes, and regional rules once. Protecto applies the right policy per dataset and per call and logs each decision with policy version and context, supporting EU AI Act evidence, EHDS reuse requests, and HIPAA reviews.

- Lineage and audit trails: Trace data from source to transformation to embeddings to model outputs. Answer who saw what and when, speed investigations, and complete access and deletion requests on time.

- Anomaly detection for vectors, prompts, APIs, and egress: Baseline normal behavior and flag enumeration or exfil patterns. Throttle or block in real time to contain risk.

Developer-friendly integration: SDKs, gateways, and CI checks make privacy part of the build. Pull requests fail on risky schema changes, prompts are redacted automatically, and dashboards report real coverage and response times.