In a bid to quickly join the AI race, enterprises are steadily pouring time and money to adopt it. While designing a new AI tool, security and compliance are often an afterthought for developers and product managers.

For industries that don’t handle sensitive data, AI adoption does not necessitate embedding strong privacy controls. However, highly regulated sectors like healthcare, finance, or government defence contractors can’t afford to launch without adhering to regulations.

But first, why should you care?

Data regulation is complicated – from understanding where you should focus to how to actually implement, it has layers of technicalities. At the initial layers, it is critical to know how it impacts your business.

Data sensitivity and trust

Healthcare and finance organizations process personal data that people consider highly private. If unauthorised users gain access to such data, it can harm individuals and erode public trust. A privacy-first design helps maintain customer confidence and your organization’s reputation.

Legal compliance and penalties

Regulations like the EU’s GDPR and industry-specific laws like the U.S. HIPAA or PCI-DSS impose strict requirements on how personal data is collected, stored, and used. Non compliance and violations can lead to hefty fines and legal action. For example, HIPAA violation can incur fines up to $1.5 million per year, and GDPR allows fines up to 4% of global annual turnover.

Preventing breaches and AI risks

Highly regulated sectors are prime targets for cyberattacks and also face unique AI risks. If an AI model is trained on sensitive data without proper safeguards, it could inadvertently memorize and leak that data. Cases of AI systems exposing personal info due to poor controls are added to the statistics every day.

Core Principles of a Privacy-First AI Stack

Before diving into the stack itself, let’s outline some fundamental privacy-by-design principles that should guide every layer of your AI architecture:

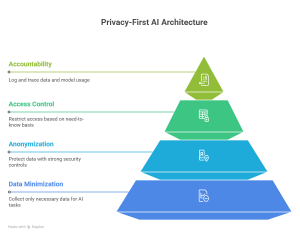

Data Minimization

Collect and use only the data that is necessary for the AI task at hand. This is in keeping with GDPR’s requirements that require data collectors from collecting data “just in case.” The idea is to minimize the probability of a successful breach by reducing the attack surface.

Anonymization and Pseudonymization

Protect data at rest and while flowing through strong security controls like anonymization or tokenization to render data and models unreadable to unauthorised users. Data stores or pipelines handling sensitive info should assume hostile conditions and safeguard accordingly.

Access Control (Least Privilege): Restrict access to data and AI systems on a need-to-know basis. RBAC does not work in AI tools, so consider advanced techniques like context control based access control (CBAC) at every interface like APIs, databases, or model endpoints.

Accountability & Auditability: Log and trace data and model usage throughout the AI lifecycle like who accessed what data, when models were trained or updated, and who is querying the models. This helps to verify that privacy controls are working as intended and to identify lapses.

With these principles in mind, let’s look at each component of an AI stack and discuss how to implement privacy by design at every layer.

Data Ingestion and Collection

Sensitive data has to be protected at every stage of its utility. At this stage, the goal is to ingest data securely and in a minimized, compliant way. Here’s what that entails:

Secure transfer and ingestion: Secure incoming data into your systems using protocols like HTTPS or other encrypted channels for data import/export. For internal data usage like pulling data from a hospital database, do this over secure networks or VPNs, not the open internet.

Minimize and filter at the source: Filter certain fields immediately at the point of ingestion. For instance, if you’re building an AI model to predict hospital readmissions, you can hash or remove raw identifiers using techniques like tokenization during ingestion because the model doesn’t need them.

Classification and tagging: Implement a system to tag or classify data by sensitivity as it’s ingested. For example, label columns or data sources as “PHI”, “PII”, “financial confidential”, etc helps to enforce the right controls.

3rd data and agreements: If your AI stack ingests data from third parties or vendors, it should contractually and technically align with your privacy requirements. Data coming from external APIs should not have personal info. Use on-premises or in-network data sources, or techniques like federated learning.

Privacy in Model Training and Development

Model training is the heart of the AI stack, but it’s also a stage where there’s potential for privacy leakage if not handled carefully. Here, you’re taking raw data (which may include sensitive personal information) and using it to train machine learning models. A privacy-first approach to training encompasses protecting the data during training, limiting what’s learned or exposed, and governing the whole process.

Key practices for privacy-safe model training:

De-identify or tokenize training data: Before using any sensitive dataset for training, pseudonymize or anonymize wherever possible. Replace user IDs with tokens, strip out names/addresses, and generalize or bin sensitive attributes.

HIPAA recommends de-identification of PHI via the Safe Harbor method or Expert Determination. If you can train your models on de-identified data and still achieve business objectives, do it. However, note that even de-identified data can sometimes be re-identified with advanced attacks, so additional measures might be needed for high-stakes data.

Use privacy-enhancing training techniques: There are advanced techniques and frameworks to ensure the training process itself is privacy-preserving. Two notable ones:

- Federated Learning (FL): Instead of gathering all data in one place, you send the model to the data. For instance, multiple hospitals can collaboratively train an AI model without sharing raw patient data with each other. Data stays on-premises to satisfy confidentiality constraints.

- Differential Privacy (DP): This mathematical framework adds noise to the training process or outputs to limit what an attacker could learn about any individual data point. A common approach DP-SGD (Stochastic Gradient Descent) involves gradient clipping and adding noise during model training to prevent model parameters from encoding too-specific information. The result is a model that is a little less precise but much safer to release.

Secure compute environment: Perform training in a secure, isolated environment to prevent leaks or unauthorized access. For cloud infrastructure, ensure the region and service is compliant. For ultra-sensitive scenarios, use Trusted Execution Environments (TEEs) or enclaves (Intel SGX, AMD SEV, etc.) to train inside a hardware-protected memory region.

Avoid inadvertent data exposure: When developing models, intermediate outputs, debug logs, or visualizations shouldn’t contain raw sensitive data. For example, avoid printing training data samples for debugging or use sanitized data. Use synthetic/ dummy data in tutorials or to share examples internally.

Limit human access to training data: Often, model development teams don’t need access to full raw datasets once pipelines are set up. Use tooling to curate datasets and then limit direct human access. Feature engineering and preprocessing on the server side and give data scientists a processed dataset that contains no direct identifiers.

Monitor and test for privacy leaks: Conduct pen tests to check to check for privacy gaps. After training, run tests for memorization. Check if the model can reproduce sensitive records or if certain inputs reveal training data using techniques like membership inference tests and model inversion. If the model leaks information, you may need to adjust training.

Documentation and approval: Maintain documentation of the datasets used, how they were obtained, any anonymization steps, and the privacy measures applied during training (PIAs, differential privacy parameters, etc.). This level of transparency is valuable for internal governance and external regulators.

Privacy in AI Deployment and Inference

In regulated industries, deployment must be handled with AI-specific considerations:

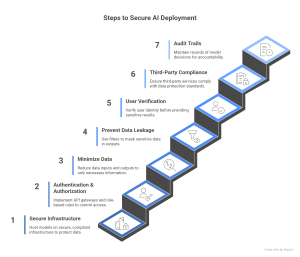

- Secure infrastructure: Irrespective of where your model is deployed, host it on secure, compliant infrastructure. For cloud deployment, use a vetted environment. Many enterprises opt for private cloud or on-premises deployment to avoid data leaving their controlled environment.

- Authentication, authorization, and throttling: If your model is exposed as a service, put it behind an API gateway or authentication layer. Implement role-based rules for who can call the model or get certain details. Use rate limiting and throttling to stabilize service and prevent data extraction.

- Minimize data: Minimize data to include only those inputs the model truly needs. For example, to predict credit risk, send the zip code and income instead of full name or account number. On the output side, design the response to contain the only information needed for the task. Avoid including sensitive data in logs.

- Prevent data leakage: Gen AI may regurgitate training data if prompted a certain way. To mitigate this, implement output filters to scan for PII like names, ID numbers, etc., in outputs and mask them. For instance, to summarize documents containing PHI, add a post-processing step to detect and redact it.

- User verification and context: If the AI generates sensitive results, the end user should be authorized to see it. For example, a banking AI assistant discussing account details should verify the customer’s identity before divulging account-specific info. This touches on the wider application design, the AI may correctly analyze data, but you need access controls to deliver the output.

- Third-party and API usage: If your deployed model calls external APIs or services for processing, scrutinize those calls. For instance, under HIPAA, if your deployment gives shared access with third parties, they must sign a BAA and ensure HIPAA safeguards.

- Audit trails for model decisions: In finance and healthcare, it’s a good practice to retain a record of model decisions and the data that went in. Log each prediction along with an anonymized identifier and timestamp to serve as an audit trail.

Monitoring, Logging, and Auditing

A privacy-first AI stack isn’t “set and forget.” Ongoing monitoring and auditing are crucial to maintain compliance, detect issues, and analyse the system’s behavior. Best practices include:

- Comprehensive logging: Document access logs, training logs, inference request logs, and treat them as sensitive data. Store logs securely with access control, and don’t log raw sensitive values unnecessarily. Aim for logs that provide accountability without becoming a new trove of PII.

- Real-time monitoring: Utilize security monitoring tools to detect abnormal patterns like unusual data access, strange model usage, or system anomalies. For models, monitor the distribution of inputs and outputs to detect drift or strange spikes that could indicate misuse.

- Periodic privacy reviews: Schedule regular audits of your AI pipeline to verify if certain datasets that should be deleted are deleted, access control lists are updated, and test the system as an adversary. Also, revisit your PIA or risk assessment if something changes.

- Audit trails and reporting: Generate reports demonstrating compliance to prove to auditors or regulators. If using cloud services, leverage any built-in compliance reports or logging. In finance, maintaining evidence of controls can be crucial during audits. Some frameworks recommend creating “privacy reports” or adding to model documentation the details of privacy measures.

- Monitor model performance: Monitor if the model’s performance is degrading or behaving unexpectedly, like generating unexpected outputs that carries privacy risks. This helps to retrain or recalibrate models in a controlled, compliant manner instead of rushing after a failure. Also monitor fairness and bias metrics if applicable.

- Incident response plan: Develop a clear incident response plan. For instance, GDPR has a 72-hour breach notification rule for serious incidents, and healthcare breaches above a certain size must be reported. From a stack perspective, this means having the ability to quickly shut off or patch parts of the pipeline. PCI suggests disabling AI systems if required as a safety measure.

- Continuous improvement: Use monitoring findings to improve the system. Frequent permission denials for a certain data store may suggest that unauthorized users are trying to access data. Either tighten communication or adjust access if appropriate. A privacy-first stack should evolve as new tools and best practices emerge.

Industry Spotlights: Healthcare and Finance Compliance

Let’s now look at how these general practices map to the specific requirements of healthcare and financial services, two of the most heavily regulated domains for data privacy.

Healthcare (e.g. HIPAA, Health Data Privacy)

Healthcare AI must comply with laws like the Health Insurance Portability and Accountability Act (HIPAA) in the U.S. Key considerations include:

- HIPAA’s Safeguards: Under HIPAA, any AI system handling PHI must implement administrative, physical, and technical safeguards. The Privacy Rule limits uses and disclosures of PHI, and the Security Rule mandates controls for electronic PHI. Encrypt health data in transit and at rest, use access controls, log all accesses, and adhere to the “minimum necessary” standard.

- Business Associate Agreements (BAA): If your AI solution shares PHI access to third parties, have a BAA to obligate the vendor to follow HIPAA rules. For example, if you use a cloud ML service to train on PHI, the vendor should sign a BAA to ensure their platform is HIPAA-compliant.

- De-identification and research use: HIPAA distinguishes between identified PHI and de-identified data. De-identified patient data is no longer regulated by HIPAA and can be used freely for research or AI development. Use classic anonymization or newer techniques like smart tokenization that maintains statistical properties without real patient info.

- Audit and patient rights: HIPAA gives patients rights to access their records and accounting disclosures. For clinical decisions, the data and logic has to be available for review. Internally, HIPAA-covered entities must maintain a log of who viewed or modified PHI.

Finance (e.g. PCI-DSS, GDPR, Financial Regulations)

Privacy and security are tightly linked in finance, and there are multiple frameworks to consider:

- PCI-DSS for payment data: If your AI processes payment card data, it must comply with PCI DSS. Concretely for our AI stack: Requirement 3 and 4 demand encryption of card data at rest and in transit. Requirement 7 says implement least privilege and Requirement 10 requires audit logs – our logging and monitoring cover that. It also emphasizes not storing sensitive authentication data after authorization. A practical tip is to use tokenization: replace actual card numbers with tokens.

- Need-to-know and segregation: Financial institutions are big on segregation of duties and data. This means the AI team might not be allowed to access raw production data directly; instead, they might get a sanitized export. Financial regulators also expect firms to control model risk – part of that is ensuring models don’t introduce new security risks. Showing that your AI training and deployment environments are isolated and secure will satisfy a lot of concerns in audits.

- GDPR and global privacy laws: GDPR requires Privacy by design and default (Article 25); implementing protection principles from the start and by default settings. Additionally, GDPR’s consent and lawful basis rules mean you need a legal justification to use personal data for AI. Techniques like smart tokenization ensures that personal data is not seen by unauthorized users.

- Auditability and model governance: Financial regulators focus on auditability for model behavior; if an AI model makes decisions, a clear record of what data went in, which model version was used, and why that decision was reasonable is required. Also, implementing access controls means you can show regulators that only authorized, qualified personnel can deploy or change a model. For third-party AI service or tools, vendor security is important.

Enabling Innovation with Privacy by Design

Building a privacy-first AI stack might seem like a formidable task – layering safeguards and meeting strict rules. But it’s important to recognize that these practices are what allow highly regulated industries to leverage AI safely and confidently.

Rather than seeing privacy regulations as a barrier, think of them as a blueprint for responsible innovation.

Protecto helps organizations build a Privacy-First AI Stack for highly regulated industries like healthcare and finance by securing sensitive data across the AI lifecycle.

- It automatically detects and masks PII or PHI, applies context-aware access control, and prevents data leaks during model training or inference.

- Protecto ensures compliance with regulations such as HIPAA, GDPR, and PCI-DSS through real-time policy enforcement, tokenization, and detailed audit logs.

- Its privacy intelligence scans reveal risk exposures early, while integrations with AI pipelines (LLMs, APIs, cloud data) enable safe data use without sacrificing model performance or business agility.