Data privacy is no longer a policy binder. It is an engineering practice that must run every day, close to where data enters, is processed, and leaves your systems. That is why the conversation has shifted to The Role of AI in Enhancing Data Privacy Measures. AI can inspect millions of records, watch billions of events, and detect quiet patterns that humans and static rules miss. When applied correctly, AI turns privacy from a paperwork exercise into a set of working parts.

This guide explains how AI strengthens privacy across the lifecycle. You will learn the core concepts, where to place controls, how to measure progress, and how to build a practical 30 60 90 day plan. You will also see how a control plane such as Protecto helps automate discovery, redaction, enforcement, and evidence without slowing product teams.

Privacy and security are related but not the same

- Security focuses on protecting systems and data from unauthorized access or change

- Privacy focuses on how personal data is collected, used, stored, and shared in ways that respect law and user choice

AI helps both areas, but the focus here is privacy. That means lawful basis, purpose limitation, data minimization, residency, and user rights, with the right technical controls to make those principles real.

The Role of AI in Enhancing Data Privacy Measures across the lifecycle

AI is most effective when it is placed at the points where risk begins. Below is a map from data collection to deletion, and how AI helps at each step.

| Lifecycle stage | Where AI helps most | What changes in practice |

| Collection and ingest | Auto classification of PII, PHI, biometrics, secrets | Tag records on arrival, block unsafe uploads, apply masking immediately |

| Data preparation | Entity and context detection in text, images, audio | Redact sensitive entities before indexing or training |

| Training and tuning | Dataset documentation and gap finding | Exclude or tokenize risky fields without breaking learning tasks |

| Retrieval and RAG | Policy aware retrieval and result filtering | Prevent verbatim return of personal data and secrets |

| Inference and prompts | Pre prompt scanning and output filters | Block risky content at the edge without stopping work |

| APIs and integrations | Schema validation and scope control | Deny oversharing fields, throttle mass extraction attempts |

| Logging and telemetry | Smart redaction and retention control | Strip sensitive data from logs, shorten retention, isolate secure logs |

| Monitoring and response | Behavioral anomaly detection and drift alerts | Detect exfil patterns in vectors and APIs within minutes |

| Deletion and rights | Record linkage and lineage search | Answer access and deletion requests with evidence, not guesswork |

In each stage, AI reduces manual work, increases coverage, and improves signal to noise. That is the essence of The Role of AI in Enhancing Data Privacy Measures.

Top use cases where AI meaningfully lifts privacy

Automated discovery and classification

AI models find names, addresses, medical record numbers, and secrets inside structured tables and unstructured text. This is more accurate than simple regex lists, especially in messy data.

Practical move: run continuous classification on warehouses, lakes, logs, and vector stores. Tag by sensitivity, purpose, and residency.

Smart masking, tokenization, and redaction

AI identifies context so masking and redaction remove the right tokens without over blocking. Deterministic tokenization preserves joins and analytics so utility remains high.

Practical move: apply tokenization at ingestion and contextual redaction for free text, then keep a secure vault for narrow re-identification under runbooks.

Pre prompt redaction and safe completion

Assistants leak when users paste stack traces, keys, or patient notes. AI can scan inputs and outputs in milliseconds, block risky strings, and return a safe path.

Practical move: place an LLM gateway in front of every assistant to filter inputs and outputs and to score prompts for injection patterns.

Policy aware retrieval for RAG

A retrieval system will surface whatever is indexed. AI can redact before indexing and apply retrieval filters that respect sensitivity and purpose tags.

Practical move: run entity redaction before embedding and configure retrieval to exclude sensitive entities automatically.

API schema enforcement with learning

AI compares real responses to allowed schemas, learns common oversharing patterns, and tightens controls where it observes drift.

Practical move: reject fields not in the contract, mask over shared values, and log violations for developer follow up.

Anomaly detection for vectors, prompts, and APIs

Static thresholds miss slow burn leaks. AI baselines normal behavior and flags exile attempts, unusual enumerations, or out of hours spikes.

Practical move: throttle or deny when risk scores exceed thresholds and route alerts to your SIEM with context.

Data minimization by design

AI recommends smaller field sets for use cases by simulating the effect of dropping or tokenizing columns on accuracy.

Practical move: use automated impact analysis to prove that minimization keeps utility, then lock the smaller schema.

Rights handling with linkage

Subject access and deletion requests require finding the same person across many systems. AI improves record linkage and returns a definitive set for action.

Practical move: use AI assisted search to assemble the package, then capture lineage and policy context as evidence.

Synthetic data and privacy preserving learning

When real data is too sensitive, AI can generate synthetic datasets or run federated learning to keep raw data local.

Practical move: validate synthetic sets against leakage tests and bias checks and keep provenance records.

Vendor governance and egress control

AI classifies outbound traffic by destination and content to spot shadow tools and non compliant transfers.

Practical move: maintain allow lists for egress with just in time prompts to route users to approved paths.

Across these use cases, a control plane such as Protecto orchestrates enforcement and evidence, which is the operational side of The Role of AI in Enhancing Data Privacy Measures.

Short case studies

Healthcare triage assistant

A hospital launched a triage chatbot to guide patients. Early logs contained names, symptoms, and insurance details. After deploying pre prompt redaction and entity redaction before indexing, the team reduced PHI in logs by more than 95 percent while answer quality remained stable. Anomaly detection later caught an enumeration attempt against the knowledge base and automatically throttled the client until an investigation cleared the activity.

Financial services lending reviews

A lender used retrieval to explain credit decisions. AI led the minimization effort by testing which features were necessary. Tokenization and policy aware retrieval removed personal identifiers from explanations while preserving the rationale. Access requests dropped review time from days to hours because lineage could show exactly which records were touched.

Retail customer support

A retailer used an LLM to summarize tickets. Employees sometimes pasted entire email threads with addresses and return labels. Pre prompt redaction stripped addresses and order numbers, while a schema check prevented the support API from returning full profiles. First response time improved and escalation rates fell since agents could keep using the assistant safely.

Architecture pattern you can adopt

The following map shows how to place AI powered controls at the right points. Use it as a starting point and adjust to your stack.

Sources: apps, forms, tickets, files, logs

|

v

Ingestion and ETL

– AI discovery and classification on arrival

– deterministic tokenization and contextual redaction

|

v

Warehouse, lake, vector DB

– redact entities before indexing for retrieval

– tag datasets with purpose and residency

|

v

LLM and API gateway

– pre prompt scanning and output filtering

– tool allow lists and rate limits

– response schema and scope enforcement

|

v

Applications and analytics

– least privilege by role and purpose

– short retention with log redaction

Lineage and audit logs wrap every hop

Monitoring baselines vectors, prompts, and APIs for anomalies

Protecto can operate as the privacy control plane over this architecture. It ships SDKs for pipelines, a gateway for LLMs and APIs, and dashboards for lineage and alerts.

Regulations and how AI helps you comply in practice

Most teams operate across several rule sets at once. The trick is to convert legal terms into simple, testable controls, then let AI enforce them at scale.

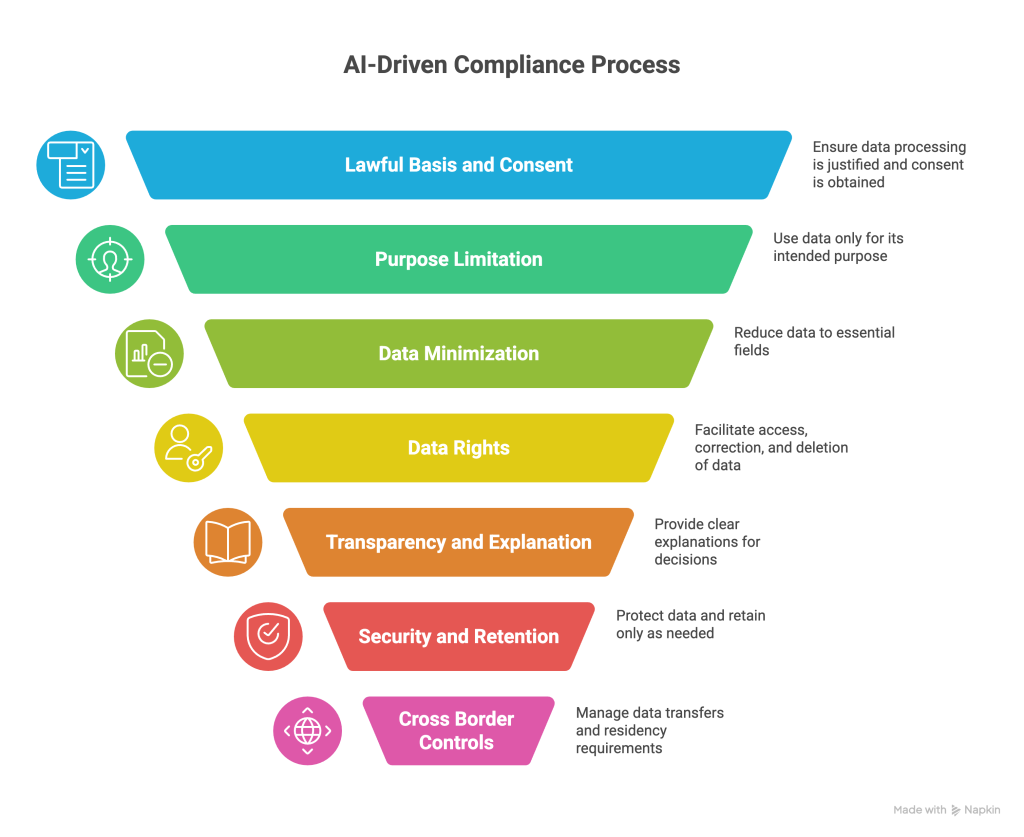

- Lawful basis and consent: Record the reason for processing and present clear notices. AI can ensure datasets and requests carry a lawful basis tag and can block use that lacks one.

- Purpose limitation: Use data only for the stated purpose. AI applies purpose tags and prevents secondary use unless an approved policy allows it.

- Data minimization: Keep only the minimum needed fields. AI can propose reduced schemas and measure the effect on accuracy.

- Data rights: Provide access, correction, and deletion. AI improves record linkage and assembles evidence packages faster.

- Transparency and explanation: Offer plain language explanations for important decisions. AI composes readable rationales and includes dataset and policy context.

- Security and retention: Protect data at rest and in transit and do not keep it longer than necessary. AI redacts logs and suggests shorter retention by measuring utility.

- Cross border controls: Keep data in region when required and control transfers. AI routes requests based on residency and keeps export records.

Common pitfalls and how AI helps avoid them

- Policies without enforcement: Documents do not block leaks. AI must sit in code and gateways to make policy real.

- Over collection and long retention: Collecting everything invites risk. AI can recommend smaller schemas and shorter retention based on utility.

- Indexing before redaction: If you index raw text, retrieval will eventually surface it. AI should redact before embedding and filter retrieval results.

- Too many false positives: Overzealous rules frustrate teams. AI reduces false alarms by using context and by learning from feedback loops.

- Privacy of the privacy system: Your privacy tools also process sensitive data. Isolate them, log carefully, and apply the same standards to their own telemetry.

- Vendor drift: Contracts say one thing, traffic does another. AI watches egress and flags deviations.

Choosing privacy enhancing technologies with AI in the loop

| Technology | Best use | How AI improves results | Watch outs |

| Deterministic tokenization | Emails, phones, IDs where joins matter | Select fields and verify referential integrity | Secure the token vault and re identification workflows |

| Contextual redaction | Free text in tickets, notes, and PDFs | Detect entities with context to reduce over blocking | Tune models and sample outputs to check quality |

| Differential privacy | Published analytics and reporting | Choose privacy budgets that balance noise and utility | High privacy budgets reduce accuracy |

| Federated learning | Multi-region or multi-party training | Orchestrate training, monitor convergence and drift | Operational complexity and evaluation across nodes |

| Secure multi-party computation | Joint analysis without sharing raw data | Plan computation graphs and allocate costs well | Higher compute costs and careful key management |

| Encryption and enclaves | Sensitive storage and computing | Recommend where to use enclaves vs standard crypto | Performance trade offs and deployment complexity |

AI plays the role of recommender, tester, and guardrail. It selects parameters, measures quality, and alerts when drift or leakage risk grows.

The future of AI powered privacy

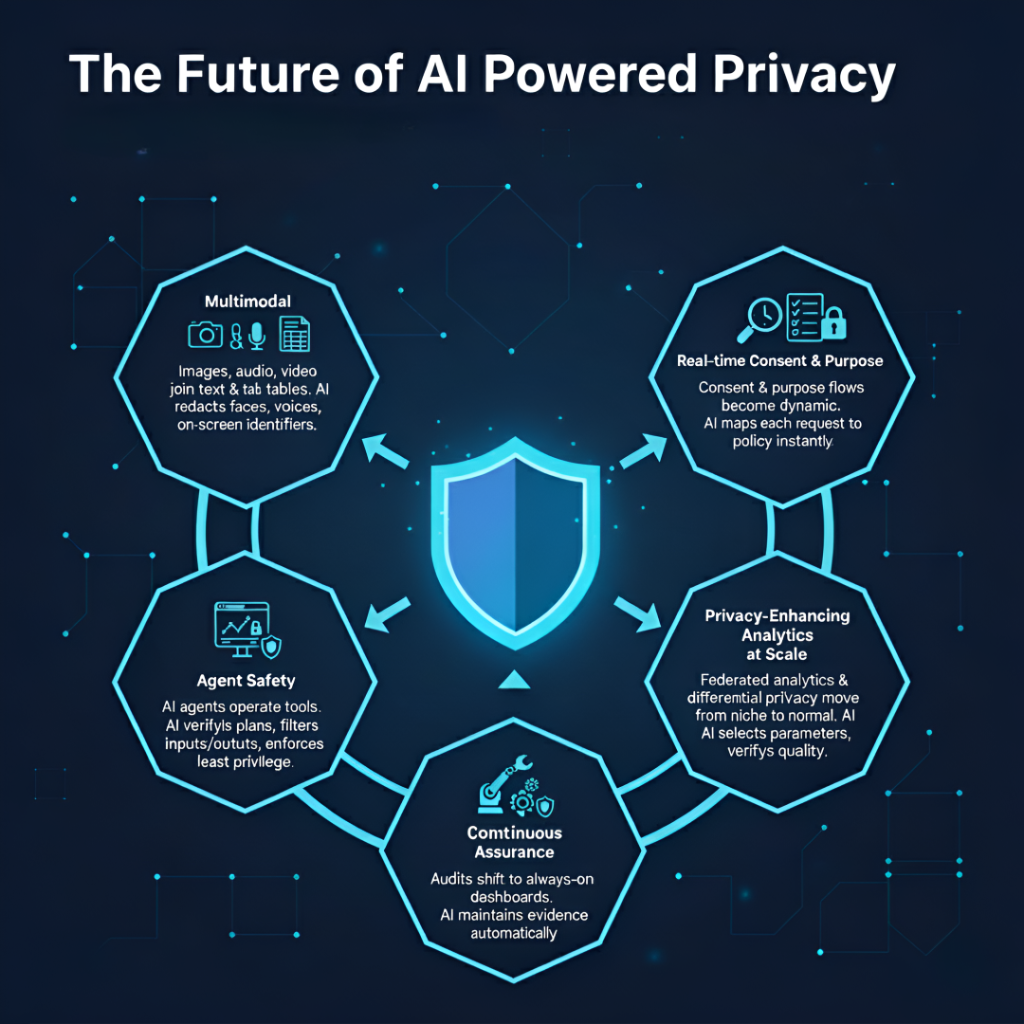

Several trends are shaping what comes next.

- Multimodal privacy: Images, audio, and video join text and tables. AI must redact faces, voices, and on screen identifiers before indexing and prompts.

- Real-time consent and purpose: Consent and purpose flows become dynamic. AI maps each request to the right policy instantly.

- Continuous assurance: Audits shift from once a year to always on dashboards. AI maintains evidence as a byproduct of normal operation.

- Privacy-enhancing analytics at scale: Federated analytics and differential privacy move from niche to normal as AI systems select parameters and verify quality without manual tuning.

- Agent safety: As AI agents operate tools, guardrails move into the tool layer, with AI verifying plans, filtering inputs and outputs, and enforcing least privilege.

How Protecto helps

Protecto is a privacy control plane for AI. It places precise controls where risk begins, adapts enforcement to region and purpose at runtime, and produces the audit evidence that customers and regulators expect.

Protecto is a privacy control plane for AI. It places precise controls where risk begins, adapts enforcement to region and purpose at runtime, and produces the audit evidence that customers and regulators expect.

- Automatic discovery and classification: Scan warehouses, lakes, logs, and vector stores to find PII, PHI, biometrics, and secrets.

- Masking, tokenization, and redaction: Apply deterministic tokenization for structured identifiers and contextual redaction for free text at ingestion and before prompts. A secure vault supports narrow, audited re-identification when business processes require it.

- Prompt and API guardrails: Block risky inputs and jailbreak patterns at the LLM gateway, filter outputs for sensitive entities, and enforce response schemas and scopes for APIs.

- Jurisdiction aware policy enforcement: Define purpose limits, allowed attributes, and regional rules once. Protecto applies the right policy per dataset and per call and logs each decision with policy version and context.

- Lineage and audit trails: Trace data from source to transformation to embeddings to model outputs. Answer who saw what and when, speed up investigations, and complete access and deletion requests on time.

- Anomaly detection for vectors, prompts, and APIs: Learn normal behavior and flag enumeration or exfil patterns. Throttle or block in real time to contain risk.

- Developer friendly integration: SDKs, gateways, and CI checks make privacy part of the build. Pull requests fail on risky schema changes, prompts are redacted automatically, and dashboards report real coverage and response times.

The outcome is a system where privacy is continuous and measurable. Teams deliver features quickly, users feel safer, and audits become routine.

FAQs

- How does AI enhance data privacy measures?

AI enhances data privacy by automating discovery and classification, providing smart masking and redaction, enabling real-time anomaly detection, and enforcing policies across the entire data lifecycle. - What are the top AI privacy use cases?

Key use cases include automated discovery and classification, smart masking and tokenization, pre-prompt redaction, policy-aware retrieval for RAG, API schema enforcement, and anomaly detection. - How does AI help with data privacy compliance?

AI helps compliance by recording lawful basis, enforcing purpose limitation, enabling data minimization, improving rights handling, providing transparency, and controlling cross-border data transfers. - What privacy enhancing technologies use AI?

AI-powered privacy technologies include deterministic tokenization, contextual redaction, differential privacy, federated learning, secure multi-party computation, and intelligent encryption controls. - How can organizations implement AI for data privacy?

Organizations can implement AI privacy controls through automated classification at ingestion, smart redaction before prompts and indexing, API schema enforcement, and continuous monitoring for anomalies.