Protecting sensitive information has never been more critical, especially in today’s AI-driven world. As businesses increasingly leverage AI and advanced analytics, safeguarding Personally Identifiable Information (PII) and Patient Health Information (PHI) is paramount. Data masking has become a cornerstone strategy, allowing organizations to securely manage and analyze data while significantly reducing the risks of exposure and misuse.

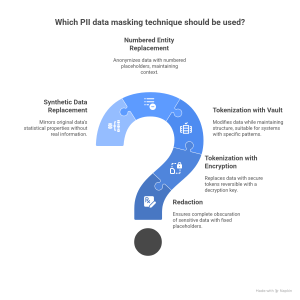

In this blog, we’ll explore the top 5 PII data masking techniques, delving into their benefits, limitations, and best use cases. These techniques are presented not in order of popularity.

Let’s discover how these methods can help you maintain data privacy without sacrificing usability.

Redaction

Description: Redaction involves removing or replacing sensitive data with a fixed placeholder, ensuring that the PII is completely obscured.

Example:

Original: “John Smith, SSN: 123-45-6789”

Masked: “John Smith, SSN: [REDACTED]”

Pros:

- No risk for PII leak

- Irreversible, making it highly secure for use cases where original data is not required

Cons:

- Removes data utility.

- Not suitable for scenarios that require realistic or analyzable data.

Best Use Case:

- Compliance reports or permanent removal of sensitive information from shared documents.

Tokenization Using Encryption

Description: Tokenization replaces sensitive data with non-sensitive tokens, which act as placeholders and can be mapped back to the original data through a secure token vault. Encryption using a cryptographic algorithm can be used to generate tokens for sensitive data, reversible only with a decryption key.

Example:

Original: “Jane Doe, CC: 4111-1111-1111-1111”

Masked: “xcalzmpqwrt234dkl9 wssjllw92opalc, CC: xyz12jksdjwqeuoo45” (encrypted)

Pros:

- High security; Tokenization protects sensitive data at rest and in transit. Reversible, allowing for safe retrieval of original data when needed.

Cons:

- Using encryption for masking requires key management, which can be complex.

Best Use Case:

- Payment processing, healthcare, or financial systems require reversible masking for transactions or audits.

- AI/ML model training and large-scale analytics without risking data privacy.

Tokenization Using Vault

Description: This technique modifies data while maintaining its structure and format, making it suitable for systems that rely on specific patterns.

Gives flexibility in generating tokens. Masked value can retain the original text’s format, length and type.

Example:

Original SSN: “123-45-6789”

Masked SSN: “987-65-4321”

Pros:

- Maintains data realism for testing or analysis.

- Works seamlessly with systems requiring structured data.

- Alternative to generating synthetic data, production data can be anonymized using

Cons:

- Potentially can be reverse-engineered the original PII if patterns are predictable for shorter-length tokens.

- Tokenization relies on a token vault, adding operational complexity.

Best Use Case:

- Testing environments, especially in applications requiring realistic but anonymized datasets.

- AI/ML model training and large-scale analytics without risking data privacy.

Numbered Entity Replacement

Description: Replaces sensitive data with numbered placeholders or generic labels, anonymizing the information while maintaining context.

Example:

Original: “Alice Johnson, Client ID: 987654”

Masked: “Client 1, Client ID: ID-001”

Pros:

- Simple for anonymizing repetitive entities.

- Preserves relational and contextual data within session or limited context

Cons:

- Context may be lost in complex datasets especially when dealing with data masking across multiple sessions.

- Limited utility for detailed analysis or machine learning models.

Best Use Case:

- Anonymizing customer datasets for presentations, reports, or shared logs.

Synthetic Data Replacement

Description: Replaces original PII with synthetic data that mirrors the statistical properties and format of the original data while containing no real information.

Example:

Original: “Alice Johnson, ZIP: 90210”

Masked: “Emma Brown, ZIP: 70011”

Pros:

- Allows data analysis and sharing without privacy risks.

- Preserves patterns and correlations useful for analytics and AI training.

- Useful in workflows which involves users since synthetic data is easy to understand e.g. human verification

Cons:

- Complex to generate realistic synthetic data for diverse use cases.

- May create misinformation if synthetic data (fake data) coincides with another actual person

- Can be reverse engineering through some brute force efforts

Best Use Case:

- Workflows where human validators and testers are involved. Synthetic data is simple to understand for human readers.

Conclusion

Selecting the right data masking technique requires a balance between security, usability, and system compatibility.

Protecto specializes in advanced masking solutions that preserve data utility and security for enterprises.

Which technique do you rely on most? Let us know in the comments!