AI is now part of customer service, product design, operations, and decision making. That reach brings real benefits, and it also surfaces personal and sensitive data in new places. It raises the question: How do we ship useful AI while protecting people and meeting laws?

This guide helps you understand AI and data privacy as one practice through core principles, common pitfalls, practical controls, and a step by step plan to build privacy into your AI stack from the start.

What understanding AI and data privacy really means

Data privacy in AI is the set of rules, designs, and technical steps that protect people when AI systems process information, from collection to deletion. It applies to structured data, unstructured data, and also metadata, because small details such as timestamps and device identifiers can re-identify a person when combined.

At any moment, a responsible team can answer four questions with evidence.

- What data do we have

- Why do we have it

- Who can access it

- How do we protect it today and delete it on request

Key concepts and simple definitions

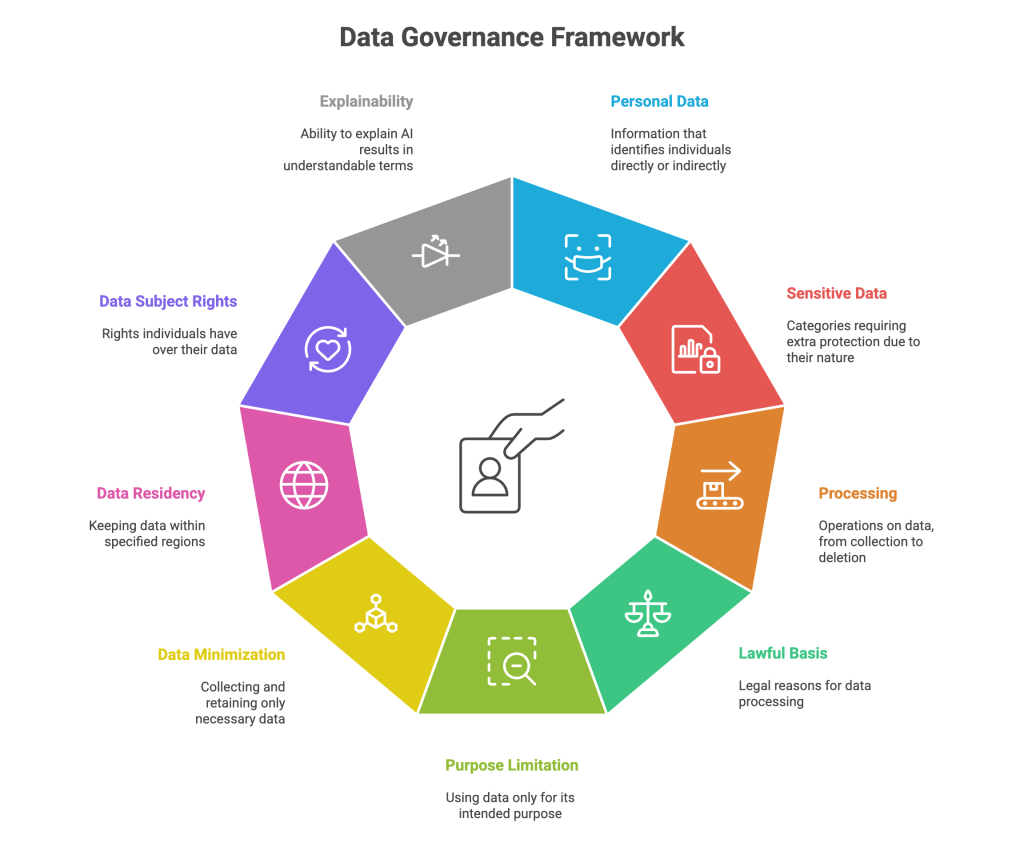

Use these short definitions across product, data, engineering, security, and legal so everyone speaks the same language.

- Personal data: Any information that can identify a person directly or when linked with other information

- Sensitive data: Categories that require extra protection, for example health data, biometrics, precise location, financial credentials, or information about children

- Processing: Any operation on data, including collection, storage, analysis, sharing, and deletion

- Lawful basis: The legal reason for processing, such as consent, contract, legal obligation, vital interest, public task, or legitimate interest, depending on the jurisdiction

- Purpose limitation: Use data only for the reason it was collected, or get approval for new uses

- Data minimization: Collect the smallest amount of data needed and keep it only as long as necessary

- Data residency: Keeping data in a defined region when law or contract requires it

- Data subject rights: Rights such as access, correction, deletion, and opt out that people can exercise over their data

- Explainability: The ability to explain how an AI system reached a result in language a person can understand

Types of data and why sensitivity matters

Not all data carries the same risk. Some categories are more sensitive because harm is greater if they leak or are misused.

| Data type | Examples | Why is it sensitive | Typical protection |

| Identifiers | Name, email, phone, device ID | Enables direct contact or linking | Tokenization, masking, least privilege access |

| Financial | Card numbers, account IDs, transactions | Enables fraud and theft | Strong encryption, tokenization, scope limits |

| Health and PHI | Diagnoses, prescriptions, lab results | Legal duties and risk of harm | Redaction, strict RBAC, audit logs |

| Biometrics | Face, voice, fingerprints, gait | Cannot be changed if leaked | Explicit consent, storage limits |

| Location | GPS trails, home address, check ins | Reveals routines and sensitive places | Aggregation, anonymity, residency controls |

| Children’s data | Accounts and usage by minors | Extra protections by law | Parental consent, minimization |

| Metadata | Timestamps, IPs, referrers, device info | Can re-identify people when combined | Minimize capture, aggregate, limit retention |

| Secrets | Keys, tokens, passwords | Direct path to compromise | Secret managers, detection, redaction |

A short rule of thumb. If a data point can be tied back to a person or a small group, treat it as sensitive until proven otherwise.

The AI data lifecycle and where risk concentrates

Privacy risk clusters at a handful of steps in the lifecycle. Placing the right control at each step prevents most incidents while keeping work moving.

| Lifecycle stage | What happens | Common risks | Practical controls |

| Collection | Forms, logs, tickets, transcripts | Overcollection, hidden PII, unclear purpose | Minimize at capture, classify on ingest, mask sensitive fields |

| Preprocessing | Clean, label, split, embed | Leaking identifiers into features or vector stores | Tokenize identifiers, redact entities in text, keep a catalog |

| Training and tuning | Train base and fine-tune models | Weak legal basis, poor documentation | Dataset registers, lineage, exclusion of sensitive fields |

| Retrieval and RAG | Index files, query knowledge bases | Indexing unredacted PII, verbatim returns | Redact before indexing, retrieval filters that respect policy |

| Inference | Prompts and tool calls | Prompt injection, oversharing in outputs | Pre-prompt scanning, output filters, tool allow lists |

| APIs and integrations | Expose model results | Oversharing in responses, cross border egress | Response schemas, scopes, region routing |

| Logging and telemetry | Traces and events | Secrets or PII in logs, long retention | Log redaction, short retention, separate secure stores |

| Monitoring and retraining | Drift checks and updates | Reintroducing sensitive data over time | Continuous classification, CI checks, reviews on change |

Four moves address most issues quickly.

- Tokenize or mask at ingestion

- Redact before prompts and before indexing for retrieval

- Enforce response schemas and scopes on APIs

- Keep a complete lineage that ties data, models, and outputs together

A privacy platform such as Protecto can automate these moves in one place. It discovers sensitive fields, applies tokenization and redaction in pipelines, filters prompts at the edge, enforces API schemas, and records audit trails.

Common pitfalls and straight fixes

Oversharing through prompts and logs

Teams paste stack traces, keys, client emails, or patient notes into chatbots. Logs then copy the same content into files and dashboards.

Fix with pre prompt scanning that blocks secrets and sensitive entities, enterprise LLM tenants with no retention, and log redaction with short retention.

Retrieval without redaction

Retrieval augmented generation is powerful and risky. If a document index contains raw personal data, a normal question can surface it later.

Fix with entity redaction before indexing, retrieval filters that exclude sensitive entities, and approvals for new sources.

Extra fields in API responses

Endpoints return more than needed, for example, a full profile when a masked subset would be enough.

Fix with response schema enforcement, strict scopes by role and purpose, and rate limits with anomaly detection to catch scraping or exfiltration.

Secondary use without consent

Data collected for support shows up in a marketing dataset or a training corpus.

Fix with purpose tagging at ingestion, policy checks that block non matching use, and recorded decisions for later audits.

Vendor exposure

An analytics SDK collects more than intended or stores data in the wrong region.

Fix with egress allow lists, contract terms for retention and residency, and runtime checks that verify what the contract promises.

Across these cases, Protecto can enforce pre-prompt redaction, tokenization at ingestion, schema checks at APIs, and egress controls, then provide lineage that proves the right policy fired.

Principles that make privacy practical

These principles connect rules to daily work. They also map well to most regulations.

- Privacy by design: Plan for privacy from the first draft of a feature or workflow. If a field is unnecessary, do not collect it. If a prompt can avoid personal details, make that the default.

- Least privilege: Give each person and service the minimum access required, tied to purpose and time. Review access on a schedule.

- Data minimization and retention limits: Keep only what you need and only as long as you need it. Delete or archive the rest in a secure form.

- Purpose transparency: Explain in simple language what you collect, why, and what choices exist. Avoid dark patterns.

- Explainability: Give users and reviewers clear, human readable reasons for important decisions, and define how to appeal or correct them.

- Defense in depth: Combine controls so a failure in one layer does not cause a leak. Tokenize at ingestion, redact before prompts, and enforce schemas at APIs.

- Evidence by default: Assume you will need to show proof. Record policy versions, transformations, access events, and decisions in audit logs.

Protecto supports these principles by attaching policy to data and by logging which rule was applied to each action. That evidence answers common questions from auditors and partners without a scramble.

Regulations explained: what to implement

You may operate across multiple legal frameworks. Translate their requirements into simple, testable controls.

| Framework theme | Practical requirement | What you implement |

| Lawful basis and consent | A valid reason to process data, with clear notice | Record legal basis per dataset, present notices, log consent when needed |

| Purpose limitation | Use only for the stated purpose | Tag purpose at ingestion, block non matching use, log policy decisions |

| Data rights | Access, correction, deletion | Build self service where possible, keep DSAR workflows, measure time to close |

| Transparency and explanation | Explain decisions to users and auditors | Model cards, user facing explanations, evidence of training data sources |

| Security and minimization | Reduce exposure to what is necessary | Tokenization, redaction, RBAC, encryption, short retention |

| Cross border controls | Handle residency and transfers correctly | Region routing, local storage, tokenize or encrypt before transfer |

| High risk oversight | Extra controls where impact is significant | Human checkpoints, impact assessments, bias tests, and enhanced logging |

A platform such as Protecto can simplify this work by enforcing purpose and residency rules at runtime and exporting audit ready reports.

Privacy enhancing technologies that preserve utility

Start with the simplest tool that gives real protection, then add advanced methods where needed.

- Deterministic tokenization: Replace identifiers with repeatable tokens so joins and analytics still work. Protect the token vault and restrict re-identification to narrow, audited workflows.

- Contextual redaction: Detects and removes sensitive entities in free text at ingestion and before prompts. Targets include names, addresses, medical record numbers, emails, and keys.

- Masking: Hide parts of values, such as the middle of a phone number, to reduce exposure while keeping format and partial utility.

- Differential privacy: Add controlled noise to aggregated results so individual records remain hidden. Use for published metrics and data sharing.

- Federated learning: Train across sites or partners without centralizing raw data. Useful when residency laws or partner confidentiality apply.

- Encryption and secure enclaves: Encrypt data at rest and in transit. Use trusted execution environments for computations that must not expose raw data.

- Role and attribute based access control: Tie access to job function and purpose, not just to team membership.

Protecto standardizes tokenization and redaction within pipelines and prompts, then backs each transformation with lineage and policy logs. That gives you both protection and proof.

A simple architecture for privacy by design

Most stacks can follow the same pattern with clear control points.

Sources: apps, forms, tickets, files, logs

|

v

Ingestion and ETL

– classify PII, PHI, biometrics, secrets

– tokenize or mask identifiers

|

v

Warehouse, lake, vector DB

– redact entities before indexing for retrieval

– tag datasets with purpose and residency

|

v

LLM and API gateway

– pre prompt scanning and output filtering

– tool allow lists, rate limits

– API response schema enforcement

|

v

Applications and analytics

– least privilege access

– short log retention and log redaction

Lineage and audit logs wrap the entire flow

Monitoring watches vectors, prompts, and APIs for anomalies

Protecto can serve as the privacy control plane across these steps. It offers SDKs for pipelines, a gateway for LLMs and APIs, and dashboards for lineage and alerts.

Vendor and partner checklist

Use this list when assessing AI vendors, model hosts, analytics tools, and data partners.

- Data retention default off or short, with clear deletion paths

- Residency options by region, with documented sub processors

- Purpose scope defined and contractually limited

- Encryption at rest and in transit, with key management you control

- PII and PHI redaction or masking before logs, training, or retrieval

- Enterprise LLM modes such as no retention and isolated tenants

- Exportable evidence for enforcement events and lineage

- Support for access, correction, and deletion within timelines

- Incident response times you can accept and test

If a partner cannot meet these points, consider placing an enforcement layer. Protecto can sit in front of vendors to filter prompts, mask responses, and prevent unapproved egress.

How Protecto helps

Protecto is a privacy control plane for AI. It reduces exposure by placing precise controls where risk begins, adapts enforcement to region and purpose at runtime, and produces the audit evidence that customers and regulators expect.

- Automatic discovery and classification: Scan warehouses, lakes, logs, and vector stores to find PII, PHI, biometrics, and secrets. Tag data with purpose and residency so enforcement is automatic.

- Masking, tokenization, and redaction: Apply deterministic tokenization for structured identifiers and contextual redaction for free text at ingestion and before prompts. Preserve analytics and model quality while removing raw values. A secure vault allows narrow, audited re-identification when business processes require it.

- Prompt and API guardrails: Block risky inputs and jailbreak patterns at the LLM gateway, filter outputs for sensitive entities, and enforce response schemas and scopes for APIs. Add rate limits and egress allow lists to prevent quiet leaks.

- Lineage and audit trails: Trace data from source to transformation to embeddings to model outputs. Answer who saw what and when, speed up investigations, and complete access and deletion requests on time.

- Anomaly detection for vectors, prompts, and APIs: Learn normal behavior and flag enumeration or exfil patterns. Throttle or block in real time to contain risk.

- Developer friendly integration: SDKs, gateways, and CI checks make privacy part of the build. Pull requests fail on risky schema changes, prompts are redacted automatically, and dashboards report real coverage and response times.

FAQs

- What are the key principles of AI and data privacy?

The six key principles include lawfulness/fairness/transparency, purpose limitation, data minimization, accuracy, storage limitation, and integrity/confidentiality/security in AI systems. - How does data minimization apply to AI systems?

Data minimization requires AI systems to collect only necessary data for their functions, reducing privacy risks and ensuring compliance with data privacy guidelines. - What is the purpose of limitations in AI data usage?

Purpose limitation ensures personal data collected by AI systems is used strictly for specified, legitimate purposes and cannot be repurposed for unrelated activities without user consent. - Why is accuracy important in AI data management?

Accuracy ensures AI systems function correctly and make reliable decisions. Inaccurate data can lead to faulty conclusions, poor decision-making, and potentially harmful outcomes. - How do security principles apply to AI systems?

Security principles require AI systems to implement strong encryption, multi-factor authentication, regular audits, and data integrity tracking to prevent unauthorized access and breaches.

Ready to adopt AI without the risks?