Data privacy and security are critical concerns for businesses using Large Language Models (LLMs), especially when dealing with sensitive information like Personally Identifiable Information (PII) and Protected Health Information (PHI). Companies typically rely on data masking tools such as Microsoft’s Presidio to safeguard this data. However, these tools often struggle in scenarios involving LLMs/AI Agents.

This post will explore why traditional masking tools fall short in LLM use cases and introduce how Protecto overcomes these limitations.

The Limitations of Regular Masking Tools Like Presidio

Tools like Microsoft Presidio rely on deterministic masking, meaning they can only unmask data if the exact original text is returned. This limitation creates a significant challenge in LLM interactions, where the AI-generated response may alter the text (e.g., rephrasing and adding context). Even a minor deviation can prevent successful unmasking.

For instance, if a Social Security Number (SSN) is masked in the prompt and the LLM returns a slightly altered version of the number or sentence structure, Presidio will fail to unmask it.

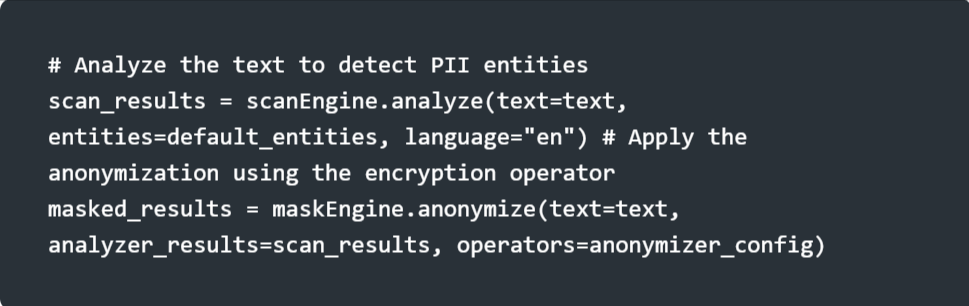

Example Code with Presidio:

To anonymize text:

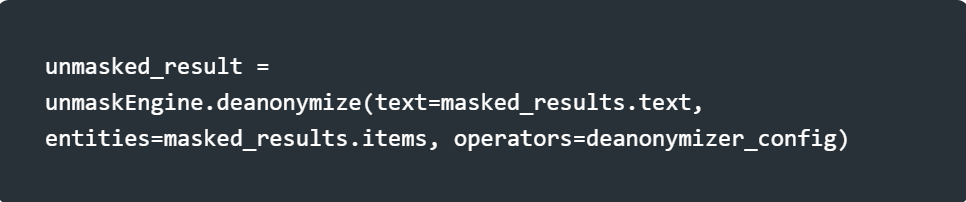

To de-anonymize (unmask) the text:

Just so you know, the above unmasking only works if the exact original text is provided. Any slight modification in the LLM’s output will cause the out-of-the-box unmasking in Presidio to fail.

Why Presidio-Like Tools Can’t Be Used for LLM Use Cases

Common Approach to Protecting PII/PHI in AI Interactions

Most businesses typically follow a standard workflow when handling sensitive data in LLMs. (An alternative approach is to mask the information before enriching the context database, which we will explore in a separate blog post.)

- User Input: The user provides a prompt that may contain sensitive information.

- Enriching the Prompt with Context Data: Before sending the prompt to the LLM, the system enriches it with contextual information using techniques such as Retrieval Augmented Generation (RAG). This additional information may contain PII/PHI.

- Masking PII/PHI: The AI system identifies and masks sensitive data (PII/PHI) before sending the prompt to the LLM.

- Send Masked Data to LLM: The masked prompt is processed by the LLM.

- Receive Response: The LLM returns a response containing the masked data.

- Conditional Unmasking: Based on user permissions, the sensitive information is unmasked before presenting the final response, or if the user lacks permissions, sensitive data remains masked.

Example Workflow:

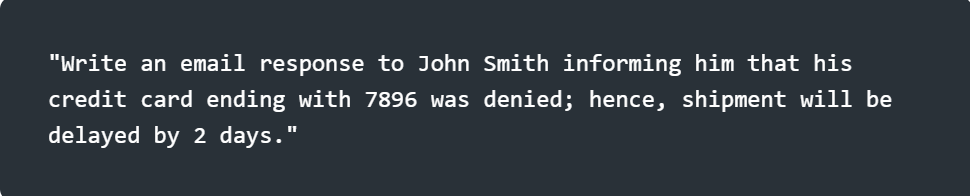

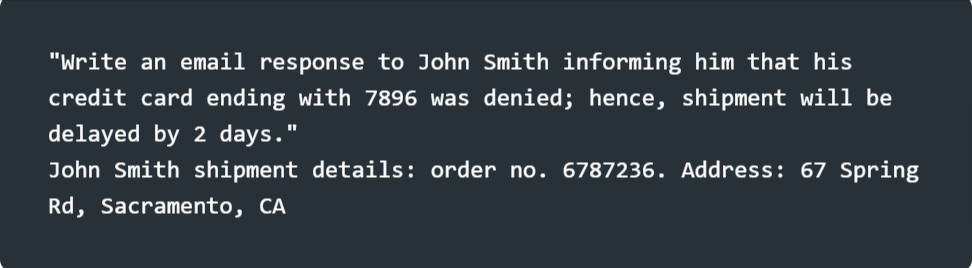

1) A customer support person asking an AI agent (Original User Prompt):

2) Add Context: Add the shipment details and the address to the prompt.

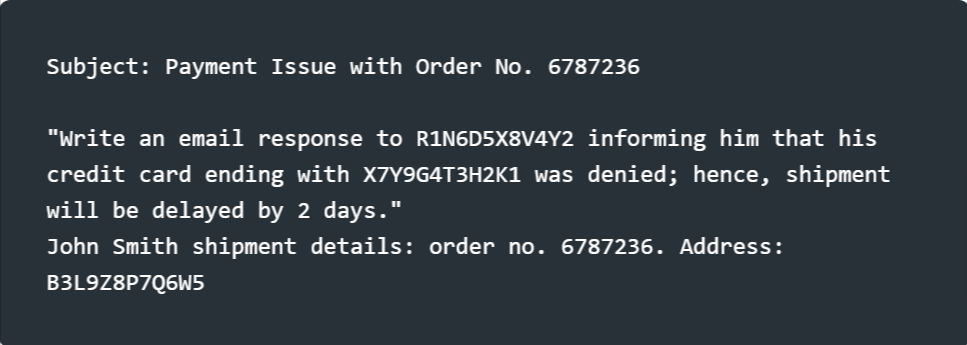

3) Mask PII/PCI in the Context + Prompt, Send to LLM:

4) Response from LLM (with masked values for all PII/PCI):

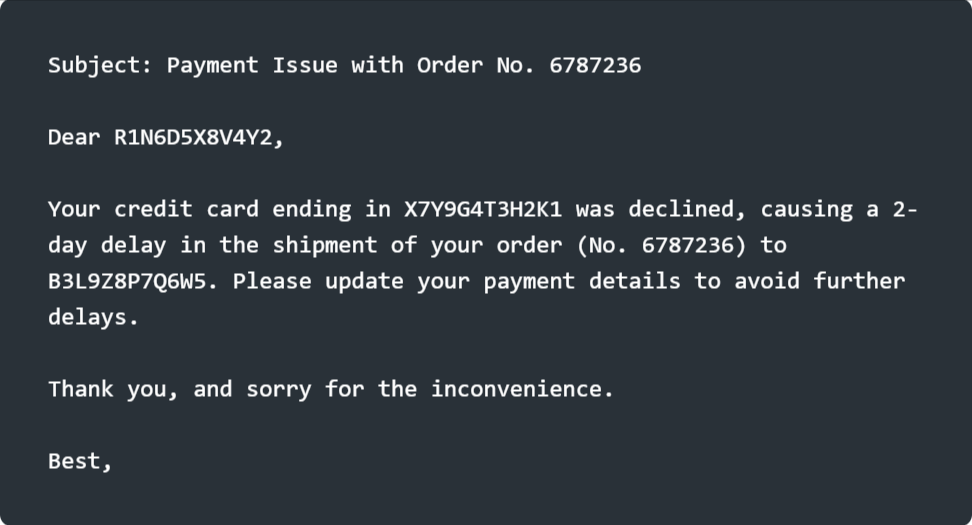

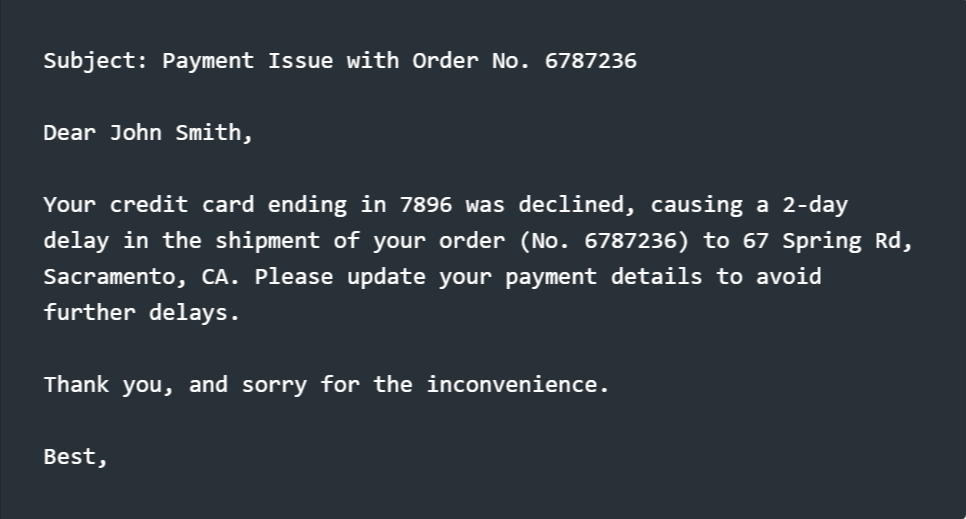

5) Final Response to User (with PII data is Unmasked):

Why Presidio Like Tools Cant be Used for LLM Use Cases

In this scenario, Presidio could not unmask the response (from step 4) because even minor changes in sentence structure can interfere with the precise matching needed for unmasking. In this case, the response text from Step 4 no longer matches the original text that was masked in Step 3.

This limitation becomes problematic in AI-driven interactions, where you have to build masking and unmasking logic, which leads to inefficiencies and hinders scalability.

How Protecto Solves the Problem

Protecto offers a dynamic, flexible approach to masking and unmasking that is explicitly designed for LLM/AI Agent use cases. Unlike traditional tools, Protecto can handle variations in LLM-generated responses without compromising on the ability to securely mask and unmask sensitive data.

Protecto doesn’t require the exact same text to unmask entities. It intelligently handles variations in text structure and content, ensuring that sensitive data remains masked unless authorized for unmasking.

Benefits of Protecto over Traditional Masking Tools like Presidio:

- Superior Accuracy and Recall in PII Detection: Protecto outperforms Presidio in detecting PII with higher accuracy and recall. This means fewer missed entities and more precise identification of sensitive information, reducing the risk of exposing PII. An independent study indicates that Protecto outperforms both Presidio and AWS Comprehend.

- Maintains Data Integrity and LLM Accuracy: Protecto is designed to retain data integrity during the masking process, which ensures that the overall accuracy of the LLM is maintained. We track this using a proprietary metric called Response Accuracy Retention Index (RARI), and Protecto consistently scores the highest, ensuring reliable performance.

- Enterprise-Grade Scalability and Flexibility: Protecto offers enterprise-grade features to handle high-volume data processing.

- Real-time APIs for prompt and response masking in low-latency, high-traffic environments.

- Asynchronous and bulk APIs for large-scale data masking, providing efficient processing for massive datasets.

- Comprehensive Audit and Compliance Features to simplify management and ensure regulatory adherence, making Protecto a robust solution for enterprise environments. Download the full Protecto datasheet from here.

While traditional masking tools like Microsoft Presidio serve a valuable purpose in many contexts, they often fall short when applied to LLM use cases due to their reliance on exact matches for unmasking. Protecto’s flexible and adaptable approach provides a streamlined solution that meets the unique demands of LLM interactions, ensuring that sensitive information is both protected and accessible when needed.

In the next post, we’ll cover how masking tools like Presidio impact your LLM and AI agent accuracy, while Protecto can retain accuracy even in scenarios involving complex masking and unmasking requirements.