Role-Based Access Control (RBAC) is a fundamental, critical part of security architecture that prevents data from falling into the wrong hands. In regular data-based environments (deployed on the cloud or on-premise), RBAC is an effective measure in preventing unauthorized access, with a few exceptions, like successful hacking attempts or breaches.

However, this system breaks down once AI comes into the picture. Let’s understand why RBAC doesn’t work with AI agents – and what you can do about it.

Enterprises are building AI agents without knowing the inevitable complications

As AI rapidly percolates into every bit of technology, more enterprises are building their own AI agents. Tools like AI copilots, chatbots, and retrieval-based agents draw data from multiple systems CRMs, document repositories, support tools, contracts, and knowledge bases. They build real time context using tools like vector databases and embeddings.

While these agents significantly reduce manual heavy activities and boost productivity, they also introduce a number of security and privacy challenges that cannot be solved with traditional RBAC.

Once data leaves the original application and enters an AI pipeline, RBAC quietly breaks down and no longer applies. This is true, especially for LLMs and agentic systems.

The shift from app centric to data centric access: where RBAC fails

Traditional RBAC works at the application level. It controls access within the UI of a CRM, ERP, or HR platform. But AI systems rarely interact with applications that way. They access raw data from exported tables, APIs, or embedded vectors to generate responses or trigger workflows.

When data is extracted and stored in a vector database for use in RAG pipelines, it’s stripped from its application context. These new storage layers typically lack any awareness of the source system’s RBAC logic. They don’t know that a particular user should see only partial information about a customer.

For example, if it draws salary data from HRM systems, it should ideally be accessible to staff like support agents, sales teams, or engineers. However, AI cannot differentiate between prompts and sees it all as the same. It cannot tell a query apart from an executive assistant and one from a compliance officer. Without guardrails, both can access the same underlying data.

The privacy perspective: why you need RBAC in AI systems

All enterprises store, transmit, or process a wide range of sensitive data in their systems including:

- Personal employee details like salary, address, or health insurance information

- Client data like Personally Identifiable Information (PII), payment information like credit card numbers, Personal Health Information (PHI), or customer behavior patterns

- Business confidential information like Intellectual Property (IP), product development codes, email or chat logs

Employees using AI agents often upload these sensitive data as a part of their prompt. Once entered, there is no mechanism to delete or edit it. AI tools ingest the data and use it to learn and improve future outcomes. Let’s break down why this is a security and compliance issue with an example:

Let’s say employee A, working in a healthcare organization, is authorized to view and edit a file containing PHI. They upload the file to an AI agent to analyze some data. Now, if employee B, an unauthorized user, enters a prompt related to the sensitive dataset entered by employee A, the AI tool may generate an output containing PHI as the file already exists in the AI’s memory.

When unauthorized users access data, it can lead to security breaches, even if the user is an employee. A report by Cybersecurity Insiders found that about 83% of organizations have reported an incident of insider attack. This report highlights the importance of implementing strict access controls across every component of the cloud architecture.

Security breaches are not the only concern for enterprises. Regulatory frameworks like HIPAA and GDPR mandate implementing strict access control measures to protect sensitive private data.

Let’s understand some of these legal requirements.

HIPAA RBAC requirements

HIPAA recommends implementing administrative, physical, and technical safeguards to protect PHI.

Under technical safeguards subpart C, 164.312, it covers access and user identification measures. It requires business associates(BA) and covered entities (CE) to implement technical policies and procedures across systems that process ePHI (PHI in electronic format). The aim is to allow only authorized personnel to access sensitive health data.

GDPR RBAC requirements

Under Article 32, GDPR requires organizations to implement “appropriate technical and organizational measures” to ensure data security. That includes access control, specifically:

“…the ability to ensure the ongoing confidentiality, integrity, availability and resilience of processing systems… and to ensure that any natural person acting under the authority of the controller… does not process personal data except on instructions.”

DPDP RBAC requirements

Section 8(5)(a): Data fiduciaries must “implement appropriate technical and organizational measures to ensure effective compliance.” That includes ensuring only authorized personnel can access personal data.

The solution: smart tokenization without breaking context

To make AI safe and compliant, enterprises need access controls that follow the data—not just the app.

That means:

- Enforcing access policies at the prompt level, based on user roles, tasks, and context.

- Masking sensitive data before it reaches the LLM, based on who’s asking.

- Auditing all accesses and responses, even in multi-agent or automated flows.

Protecto’s Data Guardrails MCP does exactly this. It acts as a policy-aware proxy between your AI agents and your data, enforcing who can access what, regardless of where the data came from or how it’s stored.

Protecto enforces RBAC in GenAI tools by masking sensitive data before it hits the model and unmasking it only if the user’s role allows it in real time.

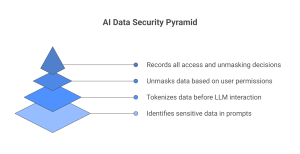

Here’s how it works:

- Auto-detects sensitive data (PII, PHI, secrets) in prompts and responses.

- Applies dynamic masking or tokenization before sending data to the LLM.

- Checks the user’s role and permissions before unmasking data in the response.

- Logs every access and unmasking decision for audit and compliance.

It plugs into your AI pipeline, wraps around models like OpenAI, and makes sure only the right people see the real data.

For example, if your prompt contains

“John Kurt Doe”s credit card number is 4567-63736-3456-83609”

the AI sees –

“<PER>hsbd eidhf sjfbr</PER>’s credit card number is <CRD>4984020658256988804</CRD>”

By masking the name as <PER> and card number as <CRD>, it eliminates the chance of ingesting sensitive data.

This way, when non authorized individuals enter prompts related to the data set, the AI system will not disclose sensitive data in the output.

Want to use our tool for free? Sign up here.