Thanks to a wide range of use cases that automate manual activities, enterprises are rushing to integrate GenAI into their IT stack, only to realize they’ve hit a privacy wall. A concerning number of use cases involve the use of sensitive data like PII and PHI, risking data privacy and compliance.

Enterprises today are becoming increasingly aware of these multifaceted risks associated with unfiltered AI usage and turning to the common solution available in the market – AI privacy tools. Let’s explore how DeepSight by Protecto solves these challenges.

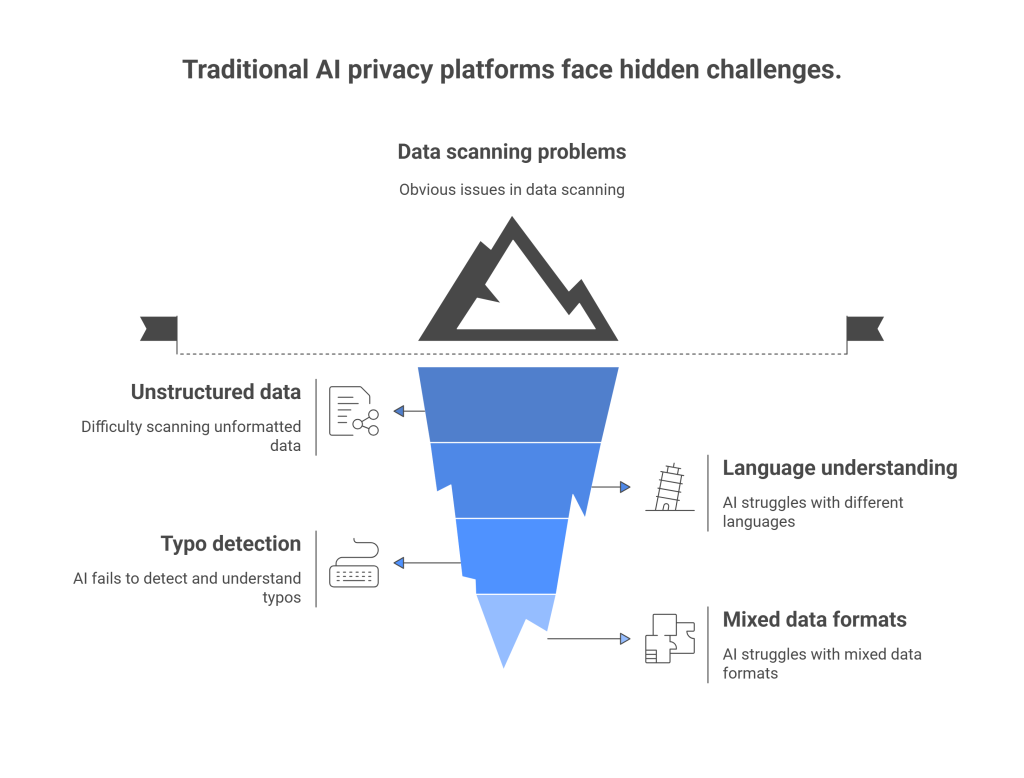

Challenges of traditional AI privacy platforms

The current privacy tools in the market do a fairly decent job of identifying and blocking AI from accessing sensitive information, but fall short when it comes to identifying data in complex edge cases.

Problem #1: Scanning unstructured or unformatted data

AI privacy tools are generally engineered to function in clean, well structured environments. Essentially, structured data follow a defined set of fields, predictable format, and standardized flow. When data is formatted this way, AI tools can easily scan through to detect PII (Personally Identifiable Information) or PHI (Protected Health Information).

However, the problem arises when the data in the prompt is in unstructured format. Here, data does not follow a defined or predictable format.

Most enterprise use cases consist of unstructured data – emails, support tickets, or transcripts. LLMs work by inferring meaning, sentiment, and intent from context. Given that unstructured data lacks these elements, extracting PII, understanding the real message, and making accurate predictions are harder.

Problem #2: Understanding languages

AI tools are trained on data sets written in a specific language – predominantly English. If a prompt contains certain words or sentences of a language other than English, the model’s accuracy and ability to scan for PII will break.

For example, let’s say a prompt contains a mix of English and French like this: “Bonjour, I forgot to update my address. It’s 45 Rue Saint-Antoine, Paris. También, my SSN is 123-45-6789 and mi numéro de téléphone es +33 6 12 34 56 78. Can you help me fix this ASAP?”… If the AI tool is trained only in English, it won’t detect the PII in this sentence, resulting in sensitive data exposure.

Problem #3: Detecting and understanding typos

Traditional AI privacy tools often rely on rigid pattern matching, regular expressions, and static dictionaries. While this works when data is clean and neatly formatted, enterprise use cases often involve typos, spelling errors, character substitutions, or obfuscations that can cause these tools to produce erroneous outputs.

For example, if someone writes “jon.doe(at)gmail(dot)com” instead of “jon.doe@gmail.com,” a rule-based system might miss it. Similarly, if a credit card number has a misplaced digit or is partially masked like “1234-****-5678.” These tools are looking for exact matches.

In addition, these tools have low semantic understanding. If someone says “My SSN is one-two-three, four-five-six…” in text or a voice transcript, traditional systems won’t catch it unless it exactly matches “123-45-6789.”

This results in a higher number of false negatives, creating blind spots in compliance, security, and audit trails.

Problem #4: Scanning through mixed data formats

Traditional AI privacy tools stumble on mixed data formats because they’re built with a narrow lens usually tuned for structured or semi-structured inputs. Once they encounter hybrid formats like logs, documents with embedded tables, or nested JSONs, they either fail or require extensive configuration.

For instance, a log might contain a mix of timestamps, IP addresses, error codes, and a random line like: “User john.doe@example.com failed login attempt at 10:43 from IP 192.168.1.1”. A rule-based scanner may flag the email but completely ignore the IP address, missing the fact that it’s an access violation tied to a user ID.

Now consider JSONs. Sensitive information may be buried several layers deep:

{

"user": {

"profile": {

"email": "john@example.com",

"ssn": "123-45-6789"

},

"activity": [{ "timestamp": "2024-10-01T10:00Z", "action": "login" }]

}

}

If the tool can’t recursively parse and understand nested fields, it either misses key PII (like the SSN), or treats the entire blob as a string and misfires with irrelevant flags.

Documents are even trickier. A single PDF might have scanned pages, embedded spreadsheets, headers in five languages, and free-text notes like “Jane’s PAN: ABCDE1234F.” Traditional tools can’t OCR scanned images, don’t grasp semantic relationships in free text, and completely miss subtle references.

The result? False negatives shoot up and context is lost. You might catch some email addresses, but miss financial IDs, personal notes, or PHI buried in attachments and logs.

Why should you care?

Incorrect data processing due to a lack of context results in false positives and negatives.

- False positives occur when the tool wrongly flags non sensitive data as sensitive, resulting in unnecessary restrictions. This compromises output quality.

- False negatives are the failure to detect actual sensitive information, resulting in unintentional data exposure risks and non compliance.

False positives kill productivity. False negatives kill trust and compliance. If your AI privacy tool can’t strike the right balance, it’s not protecting, but exposing you.

DeepSight: The only tool that does not break AI accuracy

Built by Protecto, DeepSight is an AI-native sensitive data identification engine designed specifically for today’s unstructured, high-volume, context-rich environments. It plugs directly into your data pipeline, understands real world use cases, and identifies PII, PHI, and proprietary data without breaking workflows.

Here’s a detailed breakdown on the technology behind DeepSight:

Unstructured data scanning

As previously discussed, AI privacy tools are not designed to scan unstructured data for PII.

To combat this, Protecto combines three machine learning modules to maintain a high percentage of accuracy for PII identification across structured and unstructured data.

These modules have been trained with large data sets designed to comprehensively cover every type of patterns, context, and semantics. As the modules are continuously undergoing training with actual use case inputs, it offers higher than industry average output accuracy.

DeepSight is trained to understand PII in unstructured data by going far beyond basic keyword matching. It uses transformer-based language models trained to understand meaning, context, and relationships between words.

For example, if the prompt is: “Talk to Jane, our patient with DOB 05/11/92 at Mercy Clinic” DeepSight doesn’t just look for the date. It knows that in this context, date likely refers to a person’s date of birth (i.e., sensitive data), because it includes context like “patient” and “Mercy Clinic” to make a judgement.

Scanning through language variations

Protecto detects PII in multilingual prompts by combining language-agnostic models with language-specific training and rules. First, it identifies the language(s) present in the input prompt. Next, it uses transformer models trained on large multilingual corpora to understand syntax and semantics across many languages.

Protecto fine-tunes its models on labeled datasets in different languages. For example, PII in Hindi or Spanish might have different contextual cues compared to English, and the models are trained to understand these.

In unstructured multilingual prompts, Protecto uses surrounding context to confirm whether a string is sensitive. For instance, the name “Ali” in Arabic is common, but if it’s followed by a bank account number, Protecto flags it with higher confidence.

Once detected, PII is tokenized in a way that preserves the structure and utility of the data while removing exposure. This ensures multilingual prompts don’t lose meaning during obfuscation or redaction.

Context aware semantic scanning to understand typos

Compared to most AI privacy tools that scan for strings, regex, or rigid dictionaries, DeepSight is trained to scan for meaning. Its transformer-based models look beyond keyword patterns and analyze sentence context to determine whether something is actually sensitive.

For example, if you type “rahul(dot)mehta(at)gamil(dot)com” instead of “rahul.mehta@gmail.com”, the tool misses it.

Protecto solves this by shifting from surface-level string detection to context-aware semantic understanding. DeepSight is trained on large, noisy, real-world datasets to look beyond exact matches and understand intent.

If a user writes “My SSN is 123-45-678” (missing a digit) or “Card: 9876-xxxx-1234” (partially masked), DeepSight knows what an SSN looks like, how credit card numbers behave, and what they usually appear near. Instead of rigid templates, it uses deep learning models that recognize these patterns even when they’re malformed.

It also applies fuzzy matching and error-tolerant parsing techniques to detect slight spelling errors, character swaps, or masking styles commonly used to bypass filters. This enables DeepSight to flag sensitive information even when it’s badly typed, partially hidden, or intentionally obfuscated. This way, it goes beyond legacy privacy detection.

Scanning complicated information like mixed data formats

Given that most AI privacy tools are designed for clean, structured rows and columns. Once formats get nested, multiline, or semi-structured, those tools either miss sensitive data or throw false flags.

DeepSight engine is format-agnostic and optimized for unstructured and semi-structured data. It can parse, normalize, and scan JSON with deeply nested fields, a log file with garbled timestamps and mixed metadata, or a scanned PDF converted to text contextually.

Instead of relying on shallow pattern matching, Protecto’s deep learning models understand how PII, PHI, or PCI data tends to appear within different formats. For example:

- In JSON, it knows to traverse key-value pairs and detect sensitive values even if the field name is non-standard.

- In logs, it understands timestamped noise, user agent strings, and malformed inputs while still spotting embedded email IDs or access tokens.

- In freeform documents or transcripts, it reads like a human would—line by line, paragraph by paragraph—understanding structure and extracting meaning.

It doesn’t care what the format looks like; it cares what the data means, allowing DeepSight to perform with high accuracy where traditional tools simply give up.

Faster custom entity training

Each business uses a specific set of sensitive data. It could include a combination of spiral codes, social security numbers, VIP user flags, driver’s license numbers, and another sensitive number unique to the business. Unless your AI privacy tool allows you to set a custom value, the unique sensitive entity becomes a privacy risk.

DeepSight’s model fine-tuning pipeline allows users to define custom entities. You can create and ready custom detection models in days by uploading examples, labeling them with tags, validating the output, and finally shipping them. This eliminates the need for machine learning operations or spending months to manually label your data.

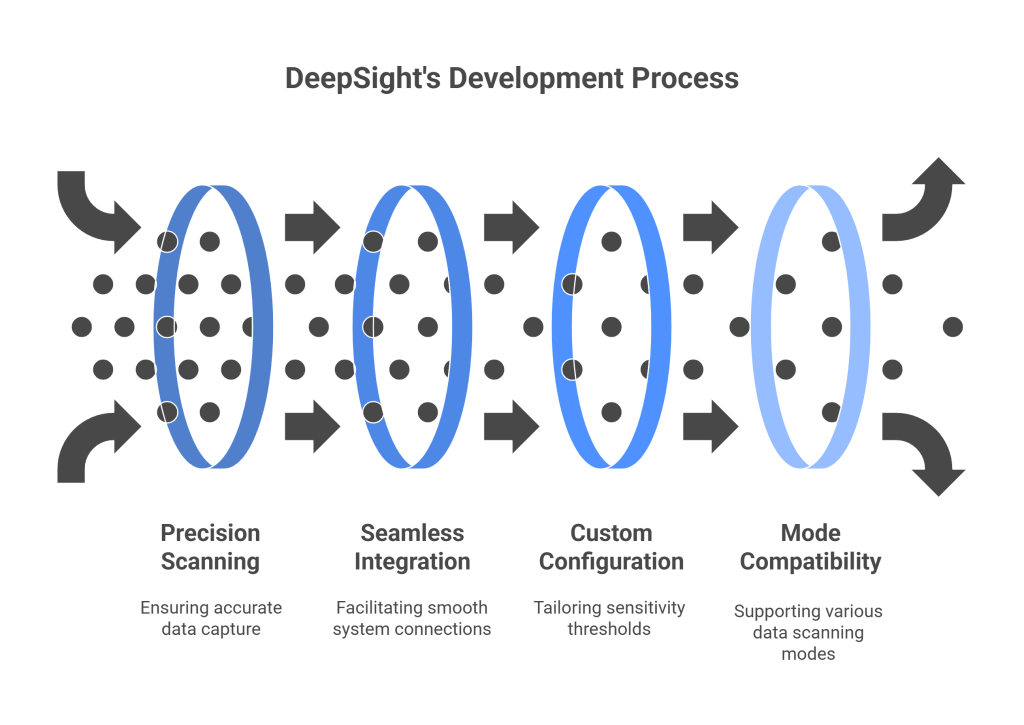

How DeepSight enables developers and businesses build smarter

DeepSight equips developers with the tools and technologies to seamlessly integrate robust privacy controls into their stack.

1. Precision scanning

Quickly scan large volumes of data with high accuracy and without the need to manually spend hours setting up. It works with equal precision and accuracy irrespective of the type of file; PDF, JPG, Excel file, or plain text. DeepSight automatically and accurately adjusts to the type of data fed into it, so your team does not have to configure the settings.

2. Faster, seamless integration

DeepSight’s plug and play approach eliminates the need for coding, enabling developers to quickly integrate in hours, instead of days. Users simply need to insert the input and the system replies with the list and type of sensitive information. It uses REST API to simplify the response:

POST /scan

{

"text": "Hey, this is my PAN card: ABCDE1234F",

"entities": ["PII", "PAN", "EMAIL"]

}

The response includes entity type, confidence score, context window, and recommended redaction or token.

3. Configure custom sensitivity thresholds

DeepSight allows users to set a custom threshold for identifying sensitive data as per their business requirements and compliance obligations.

For example, if your business processes medical records of patients, you can set the detection threshold to a higher level of caution. In contrast, if you are working on product insights which generally does not include highly sensitive information, you can dial down the threshold to a low caution to avoid false alarms.

4. Data scanning mode compatibility

DeepSight works by protecting data in two ways:

- Batch mode: Scans large volumes of data or logs before they are used. For example, you can scan a folder containing customer emails for sensitive data.

- Streaming mode: Here, you scan and protect data in real time as it flows into your system. Examples include sensitive information in a live chat, traffic from application, or voice transcriptions.

No matter the mode, DeepSight logs every detection, letting your security team audit what’s been scanned, flagged, and redacted.

Architecture & Tech Stack Integration

- Language Support: Works across English and multiple international languages.

- Deployment Options: Cloud API (default), On-prem (for regulated industries), VPC deployment (for data residency)

- LLM agnostic

- Security: Data never leaves your VPC (if you choose on-prem mode). Supports audit logging, role-based access, and end-to-end encryption.

Try DeepSight for free

Not sure if Protecto is the right tool for your business? No worries, you can get started with:

- Free scanning API access (limited tokens)

- Sample redaction/playground UI

- White-glove onboarding for enterprises

DeepSight sees what other tools can’t – that is the difference between an AI win and a compliance failure. Talk to our data privacy experts to discuss your needs.