Large language models like OpenAI’s GPT, Anthropic’s Claude, and Google’s Gemini have changed the way businesses process and transmit sensitive data. LLMs boosted productivity and enhanced customer experience like never before, triggering unprecedented adoption across enterprises.

Amidst all the rush and excitement, the negative impacts were overlooked and swept under the carpet – till it became a privacy and compliance issue. As a number of privacy, ethical, and compliance issues began to surface, more enterprises are hitting the brakes on rapid AI adoption and rethinking their practices.

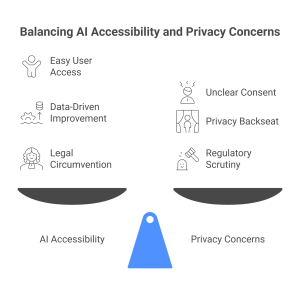

While the case for using LLMs is compelling, the risks are even more pressing. Here are three key enterprise LLM privacy concerns – why they are hesitate to share their data with LLMs:

1. Unclear or Insufficient Consent

Most AI tools are free and users can access them without spending time or effort to set it up. This level of accessibility comes at a price; you pay with privacy.

While LLMs depend on data to function and improve performance over time, they often do so without the user’s consent. These tools allow users to interact immediately after signing in using just an email ID.

Terms and conditions are usually cleverly crafted to circumvent stringent regulatory scrutiny, potential lawsuits, or attract the attention of privacy conscious netizens.

Let’s look at the registration page of Claude AI. Only a handful of users might click on the terms and conditions and actually read through them.

Moreover, LLM developers design their tools with the goal of improving their product over time. To meet this goal, the LLM should be able to collect data with minimum hindrance but privacy takes a backseat. This clash of interests gave rise to a number of debates around the safe use of AI in recent years.

Unfortunately, LLMs are winning the AI safety wars, as AI has permeated business workflows to a point where removing it would significantly hamper productivity.

Agentic AI tools are not built with a focus on privacy and transparency, but to collect as much data as possible within its legal scope. For example, if a customer gave consent for their data to be used “to improve our services,” it does not necessarily include sending their personal information to a third-party LLM.

This uncertainty forces enterprises to exercise caution, especially when data subject rights, such as those under GDPR or HIPAA, are involved. As a result, enterprises using LLMs risk losing their internal, sensitive data unless used with a privacy guardrail.

2. Data Residency and Cross Border Transfers

Concerned by the widespread misuse of personal data, many governments have developed data residency laws to protect the privacy of their citizens.

Data residency laws specify that certain types of data, such as personal information, must be stored and processed within the geographic boundaries of a specific country or region. It aims to ensure that sensitive information is handled in accordance with local legal requirements.

LLMs are often hosted on cloud infrastructure in the U.S. or other regions. For businesses operating outside these regions, this is a blocker. In such cases, using internationally hosted LLMs results in non compliance with the government regulation. Violating regulations can result in hefty penalties, possible loss of a business license, and damage to one’s reputation.

Even a single prompt that sends sensitive data to a non-compliant region could trigger a violation. Enterprises need t o use LLMs with absolute certainty that data doesn’t leave legal perimeters. LLM APIs don’t always provide those guarantees.

Enterprises that heavily depend on LLMs can comply with data residency laws without compromising on data availability or quality of output using tools like Protecto. Learn how.

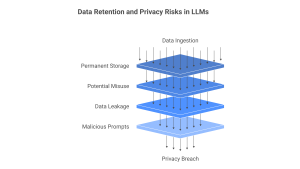

3. Data Retention and Model Training

LLMs essentially function like a black box, but for information – sending data to AI models is a one way street. Once the system ingests your input, it is stored in its memory forever – it cannot be erased, edited, or removed.

From a privacy and security perspective, this is a concern. LLMs may use the data for training, which is an infringement of sensitive personal data. Even if the vendor drafts a “no data will be used for training or marketing purposes” clause into their terms and conditions, it is possible to exploit some legal loophole that allows them to misuse data for training. LLMs can then unintentionally reveal this data in their outputs, exposing sensitive information to unauthorized users.

Moreover, malicious prompts can trick the model into disclosing or confirming sensitive information. There have been high-profile cases where data ended up in logs or was accidentally used for optimization.

How to address enterprise LLM privacy concerns: sharing data in a secure way

Enterprises want the power of LLMs but not at the cost of privacy, compliance, or user trust. Until AI systems can guarantee safe handling of enterprise data with clear controls and policies, companies will continue to block sensitive data from flowing into these models.

To address these challenges, enterprises can utilize a tool like Protecto to unlock the full potential of Large Language Models without compromising privacy or compliance. It’s AI Privacy Guardrails ensure sensitive information is identified, masked, or tokenized with precision before it ever reaches an LLM by eliminating the exposure of private data at its source.

Protecto can be deployed across on-premises, cloud-based, or hybrid environments to restrict sensitive data, no matter where your LLM resides. This enables enterprises to leverage globally-hosted AI models without worrying about violating cross-border regulations.

Finally, Protecto ensures LLMs never ingest raw, unmasked sensitive data. This approach ensures data security, reduces exposure to malicious prompts, and safeguards against inadvertent leaks, allowing businesses to confidently maintain compliance with GDPR, HIPAA, and other privacy regulations.

With Protecto, enterprises don’t have to choose between powerful AI and robust privacy. They can embrace innovation without compromise.

Ready to safely harness the power of LLMs? Book your free trial now.