As enterprise data moves across applications, databases, and analytics pipelines, uncontrolled proliferation of PII increases compliance risk and a potential breach. IT leaders and product managers are often struggling to find the best way to protect data.

Protecto Vault helps organizations contain this risk by centralizing PII governance and offering two powerful architectural models to minimize data exposure – the Tokenization Model and the Centralized Profile Model. Let’s understand how these methods work.

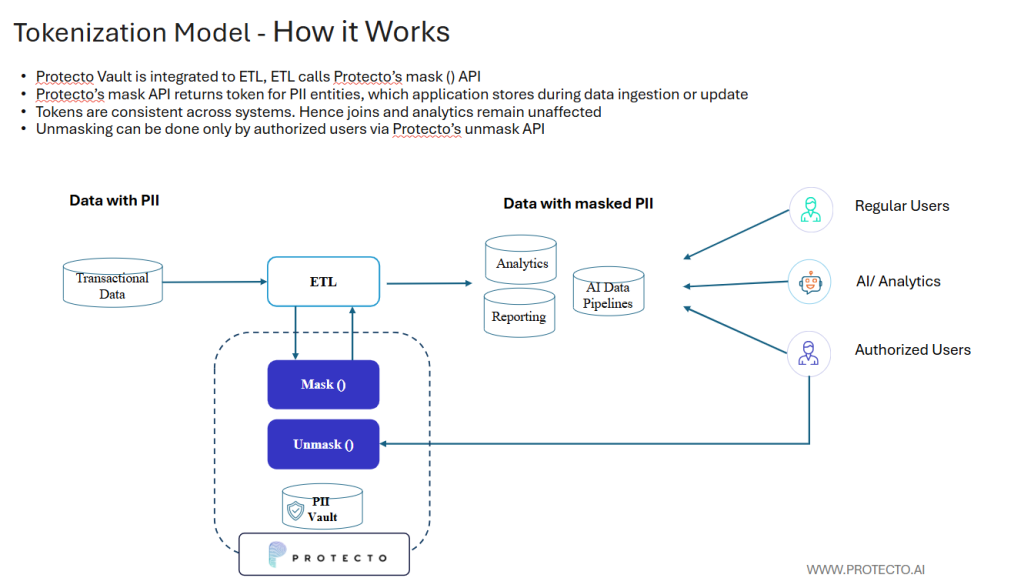

Approach 1: Tokenization Model (Data Masking)

The Tokenization Model replaces sensitive data with consistent, non-sensitive tokens while keeping the original database schema intact. This enables seamless integration without re-engineering existing systems, making it a low-effort, yet high-performance approach for large enterprises.

How It Works

Protecto Vault integrates with your ETL or data ingestion workflows through simple API calls:

- During data ingestion or updates, the Protecto mask() API replaces PII (like Email, Phone, or SSN) with unique tokens.

- These tokens are stored in the Protecto vault containing the PII mapping of the tokens to the original data.

- The tokens are consistent across systems, allowing joins and analytics to work exactly as before.

- Authorized users can retrieve original PII only via controlled unmask() API calls.

Key Benefits

- Schema Unchanged: No need to alter existing tables or relationships. Applications and reports run seamlessly on tokenized data.

- High Performance: Tokens behave like the original values, so analytics and joins remain fast.

- Secure by Design: Tokens are generated using entropy-based true random numbers, ensuring they’re pattern-less and impossible to reverse-engineer.

- Controlled Access: Only authorized users, governed by admin policies or Active Directory, can unmask tokens.

- Scalable Integration: Works across multiple systems and databases with minimal dependency.

In short, the Tokenization Model offers quick integration, strong security, and scalability,ideal for organizations needing immediate compliance protection with minimal disruption.

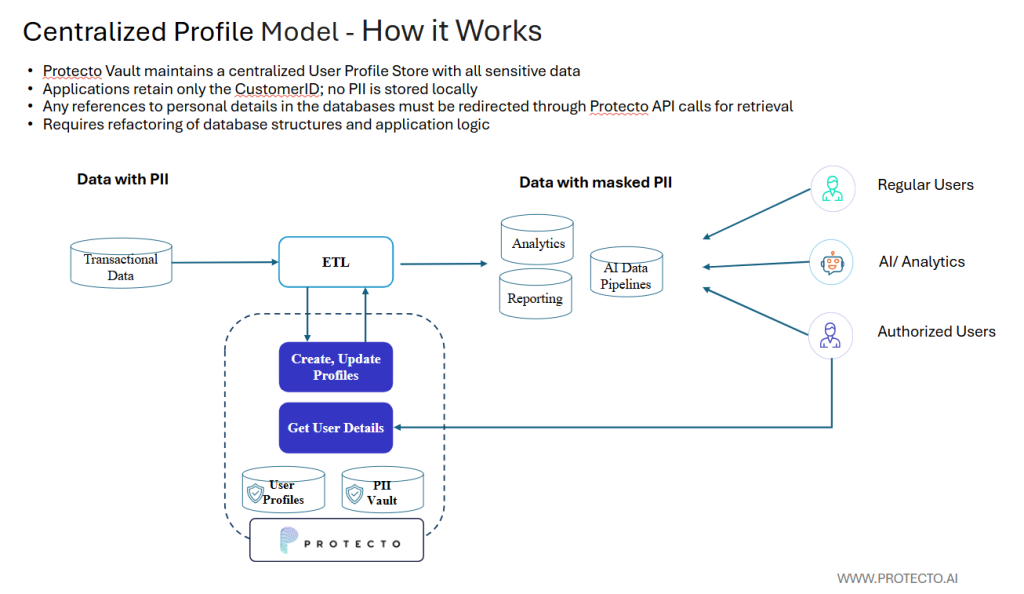

Approach 2: Centralized Profile Model (Data Isolation)

The Centralized Profile Model takes a more transformative approach. It extracts all personal data from operational databases and consolidates it in a secure User Profile Store within Protecto Vault. Operational tables retain only reference IDs (like CustomerID), and any request for personal data is served through Protecto’s API.

How It Works

- All PII is moved from individual tables into a centralized User Profile Store.

- Applications now hold only reference IDs, not actual PII.

- Any request for personal data must go through Protecto Vault APIs (getUserDetails()), ensuring a single point of access and auditability.

Key Benefits

- Single Source of Truth: All personal data resides in one secure repository, simplifying governance and audits.

- Reduced Exposure: Operational databases never hold clear-text PII.

- Compliance Strength: Simplifies privacy operations such as Data Subject Requests (DSRs), including deletion or “Right to Forget.”

- Strong Governance: Ensures every access or update to PII is traceable and policy-enforced.

While this approach delivers maximum isolation and compliance, it requires significant database and application re-architecture, making it a high-effort model best suited for organizations prioritizing deep privacy controls.

Comparison: Choosing the Right Model

| Factor | Tokenization Model | Centralized Profile Model |

| Implementation Effort | Low — schema unchanged, quick integration | High — major re-engineering required |

| Performance | High — analytics on tokens, no frequent lookups | Moderate — frequent API calls introduce latency |

| Compliance | Moderate — deletion requests must identify related tokens | Strong — centralized data simplifies DSRs |

| Scalability | Easy — minimal dependency, quick rollout | Hard — dependency on Profile Store and APIs |

| Best For | Quick integration, analytics-heavy environments | Privacy-first organizations prioritizing deletion and auditability |

Conclusion

Both approaches significantly reduce the risk of PII exposure and improve data governance.

For most enterprises, the Tokenization Model offers the best balance of speed, security, and scalability. It allows existing systems to operate without disruption while providing strong protection through true random tokenization.

Organizations with complex regulatory environments or frequent data deletion requests may find the Centralized Profile Model better suited for long-term compliance and data isolation.

In both cases, Protecto Vault enables enterprises to protect sensitive data while preserving the speed, accuracy, and flexibility their AI and analytics systems demand.