Tokenization replaces sensitive data with non-sensitive stand-ins called tokens. The mapping between the token and the original value sits in a secure service or vault. If attackers steal a database full of tokens, the stolen data has little value. This is why tokenization is popular for payment card industry (PCI) workloads, customer PII, and healthcare records.

However, like any control, tokenization has weak points and practical limits. This article explains the real challenges and limitations of data tokenization, then shows how to design around them. You will see concrete examples, patterns that scale, and checklists that you can use in your next sprint.

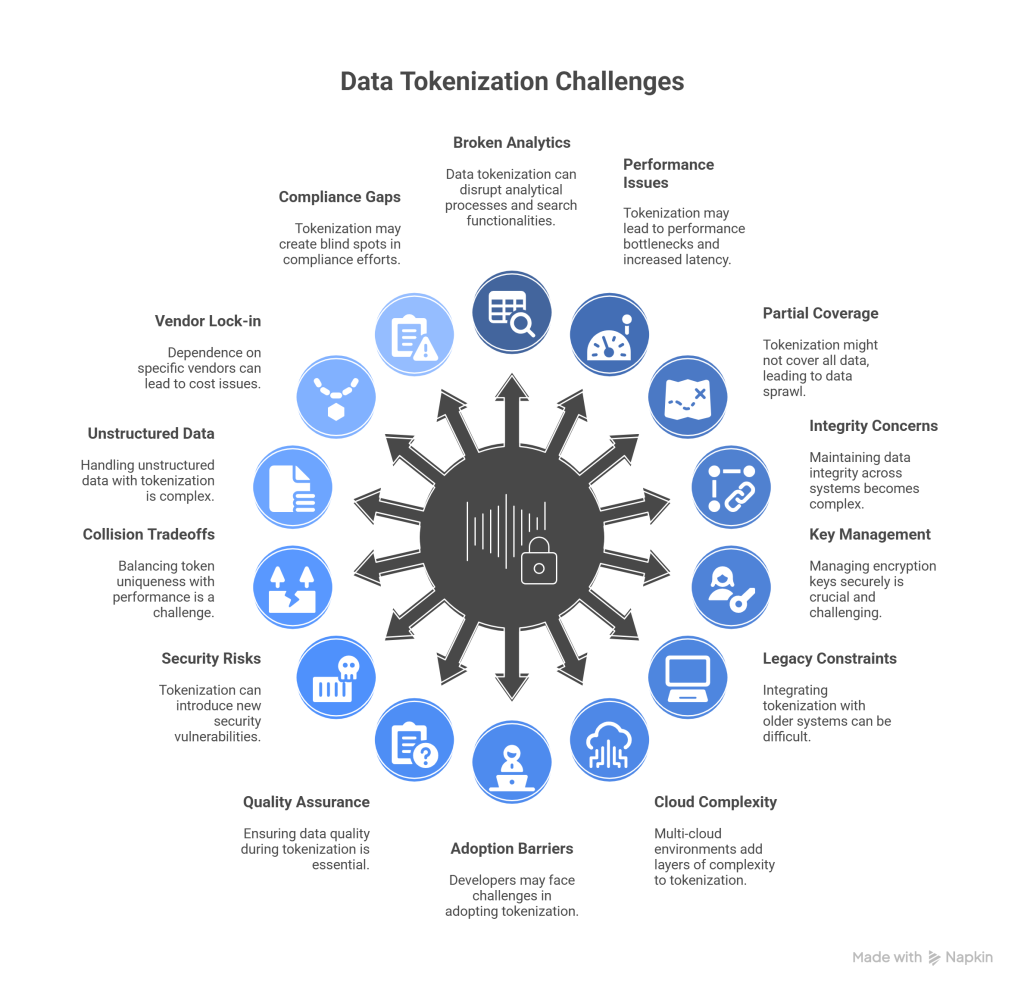

The challenges and limitations of data tokenization

1) Broken analytics and search

Tokens are not useful for text search, fuzzy match, or machine learning features. Deterministic tokenization can support exact match joins. It still blocks sorting by range, numeric operations, and free-text search. Teams discover this when their dashboards return empty results or when fraud models lose accuracy.

Mitigation. Keep raw data in a secure analytics environment. Use privacy-preserving transforms that support computation, such as format-preserving encryption for sortable fields or salted hashing for joins that do not need detokenization. Document which fields are tokenized deterministically vs randomly.

2) Performance and latency

Every tokenization or detokenization call adds network hops and I/O. A card vault that handles 200 requests per second in testing can face thousands per second in production during a campaign. If the token service stalls, the app stalls.

Mitigation. Use local caching for read-heavy detokenization, with strict TTLs and hardware security modules for key protection. Shard vault data by tenant or region. Batch operations when possible. Load-test the token path with realistic traffic and failure scenarios.

3) Partial coverage and data sprawl

Sensitive values hide in strange places. Think logs, screenshots, BI extracts, sandbox copies, or SaaS syncs. If your tokenization strategy covers only primary tables, the risk remains. Attackers aim for the weakest link.

Mitigation. Start with automated data discovery and classification across data stores, SaaS apps, and pipelines. Inventory where sensitive data lives, where it moves, and who can access it. Then define enforceable policies.

4) Cross-system referential integrity

Users expect one customer to be the same customer across CRM, billing, and support. Random tokenization breaks referential integrity. Deterministic tokenization can preserve joins, but it raises the risk that tokens leak linkage across datasets.

Mitigation. Use deterministic tokenization within a defined trust boundary. Introduce per-domain salts or keys to prevent linkage across domains that should not be correlated. Maintain a clear data contract that lists which fields must join and where.

5) Key and vault management

Tokenization reduces the blast radius of a breach. It also creates a single service that must not fail. The vault’s security model, HA design, and audit controls become central. Poor rotation, weak authentication, or missing tamper evidence can undo the benefits.

Mitigation. Treat the vault like a critical payment system. Enforce strong identity and MFA. Rotate keys and salts on a set schedule. Keep immutable logs. Practice break-glass procedures. Perform third-party pen tests. If you use a vendor, review their SOC 2 reports and shared responsibility model.

6) Legacy system constraints

Many legacy apps assume readable strings or numeric types. They may validate checksums or mask digits on the UI. A token that looks valid but fails checksum can break the flow. PDF templates and batch files can be even stricter.

Mitigation. Use format-preserving tokens that pass validation and meet length constraints. Where checksum is required, use format-preserving encryption with valid Luhn digits for card-like tokens. Test with the oldest batch jobs and printing flows, not just APIs.

7) Multi-cloud and SaaS complexity

Modern stacks span AWS, Azure, GCP, and dozens of SaaS tools. Each platform moves and transforms data differently. Centralized tokenization can become a bottleneck. Decentralized tokenization can get inconsistent.

Mitigation. Adopt a hub-and-spoke model. Use a central policy service and distributed tokenization gateways close to the data. Synchronize policy, not secrets. Provide SDKs and APIs that feel native in each platform. Protecto’s policy engine can act as the central source of truth while enforcing controls at the edge of each data zone.

8) Developer experience and adoption

If tokenization APIs complicate development, engineers will work around them. Shadow copies of data appear. That leads to inconsistent tokens and surprise detokenization needs.

Mitigation. Make secure use of the path of least resistance. Provide lightweight client libraries, declarative annotations for fields, and code samples by framework. Integrate policy checks into CI. Offer a test mode with fake tokens that behave like real ones.

9) Testing, QA, and data quality

Masked or tokenized test data can break test scripts. QA teams often copy production data to stage to preserve behavior. That creates risk and compliance issues.

Mitigation. Generate high-fidelity synthetic data that matches distribution, edge cases, and referential integrity. Use deterministic tokens in test environments when you need realistic joins. Block detokenization outside production. A platform like Protecto can automate realistic test data with consistent tokens across services.

10) Insider threats and access misuse

Tokenization does not stop an admin who can detokenize everything. Over-privileged service accounts present the same risk.

Mitigation. Enforce least privilege with attribute-based access control. Wrap detokenization with policy checks tied to user role, purpose, and context. Require approvals for bulk exports. Alert on anomalous detokenization patterns.

11) Token collisions and determinism tradeoffs

Random tokens avoid collisions but break joins. Deterministic tokens enable joins. They must handle collisions, skew, and predictability. If an attacker knows the algorithm and sees enough tokens, they can infer popular values.

Mitigation. Use per-field salts and tenant-specific secrets. Monitor token distribution and collision rates. For high-cardinality fields, prefer random tokens. For low-cardinality fields like country codes, consider hashing then encrypting to reduce inference risk.

12) Unstructured and semi-structured data

Documents, images, chat transcripts, and logs carry sensitive data in free text. Traditional tokenization focuses on columns. Manual redaction is error prone.

Mitigation. Use NLP-based detection to find PII and PHI in text, then apply contextual redaction or replacement. Keep a provenance record for audit. For generative AI use cases, consider prompt-time redaction with policy-based detokenization at retrieval.

13) Vendor lock-in and cost

Tokenization can be cheap at small scale and expensive at high TPS. Vault egress fees, per-call pricing, and migration costs can surprise teams. Moving from one token format to another is hard.

Mitigation. Choose open formats for tokens when possible. Keep a migration plan and export procedure. Isolate vendor-specific logic behind an internal interface. Track unit economics early. Run canary workloads with two providers to keep options open.

14) Compliance blind spots

It is easy to assume that tokenization alone makes you compliant. Regulators look at data life cycle, purpose limitation, and user rights. A process that cannot fulfill a subject access request or right to deletion can fail audits, even if everything is tokenized.

Mitigation. Map user identities to tokens. Document data flows end to end. Prove that you can retrieve, correct, and delete a subject’s data across systems. Protecto’s data maps and policy logs can help automate subject request workflows while keeping raw data protected.

Quick comparison: tokenization vs adjacent techniques

| Technique | What it does | Strengths | Weak spots | Good for |

| Tokenization | Replace value with mapped token | Strong breach reduction, format preservation, access audit | Analytics, cross-system joins, latency | PCI, PII storage, app fields |

| Format-Preserving Encryption | Encrypt while keeping length and charset | Supports validation and sorting in some modes | Key management, performance | Legacy schemas, fields with checksums |

| Hashing with Salt | One-way mapping | Exact-match joins without detokenization | No recovery, leakage on low-cardinality values | Linking across systems without clear text |

| Masking/Redaction | Remove or obfuscate portions | Simple, fast, safe for sharing | Loses utility | Log sharing, demos, support tickets |

| Differential Privacy | Adds statistical noise | Strong privacy for aggregates | Complex to tune, not for row-level use | Analytics, reporting at scale |

Many teams mix these. The trick is to match the technique to the use case. This is where policy-driven orchestration helps. A platform like Protecto can apply tokenization for storage, hashing for joins, and redaction for sharing, all from one policy.

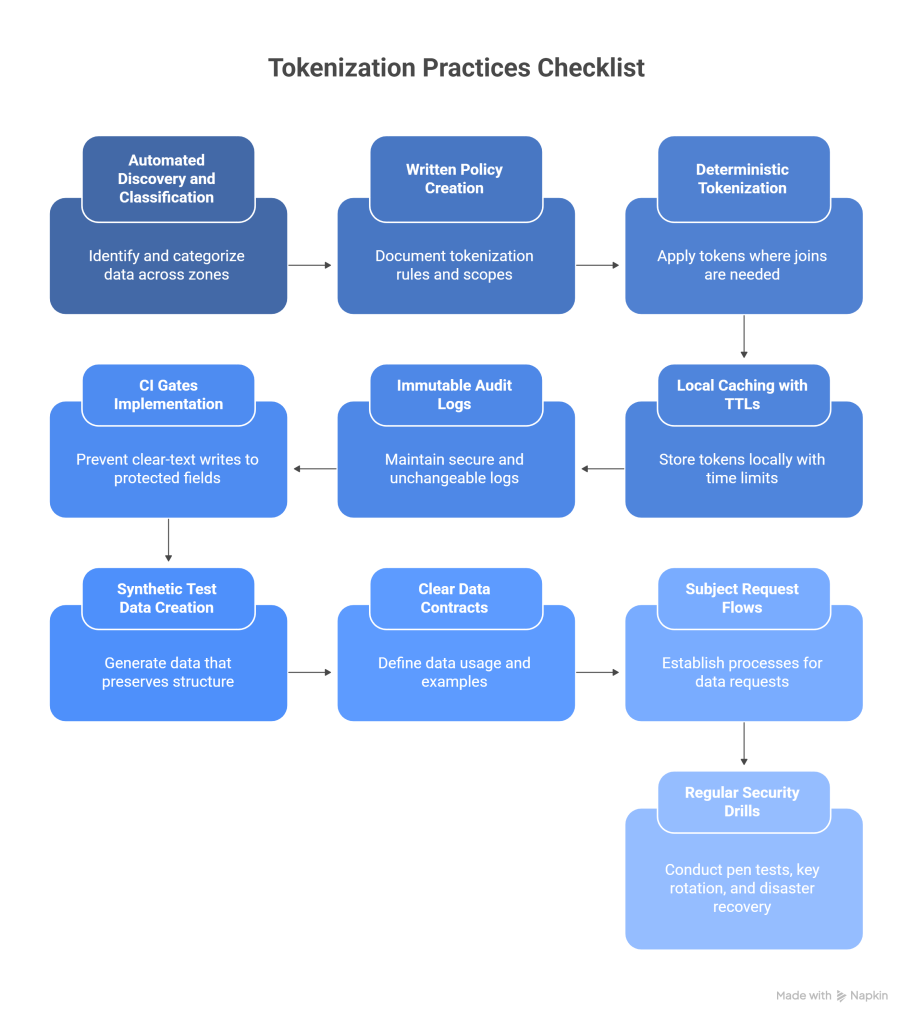

Best tokenization practices checklist

- Automated discovery and continuous classification across all data zones.

- Written policy that maps fields to transforms with scope and purpose.

- Deterministic tokens only where joins are required and scoped to a domain.

- Local caching with strict TTLs and HSM-backed keys.

- Immutable, centralized audit logs with anomaly alerts.

- CI gates that block clear-text writes to protected fields.

- Synthetic test data that preserves structure and edge cases.

- Clear data contracts in the catalog with examples.

- Proven subject request flows. retrieve, correct, delete.

- Regular pen tests, key rotation, and disaster recovery drills.

How Protecto helps

Protecto is a privacy and data security platform that makes tokenization practical at scale. It helps you avoid common pitfalls while getting value from data.

- Automated discovery. Scan databases, data lakes, SaaS apps, and logs to find PII, PHI, and payment data.

- Policy-driven protection. Map fields to tokenization, encryption, hashing, or redaction based on context.

- Domain-scoped tokens. Support deterministic tokens for joins with tenant or domain scoping to prevent unwanted linkage.

- AI-safe workflows. Redact sensitive text for LLM prompts. Re-identify only for authorized users with full audit.

- Developer experience. Lightweight SDKs, sidecar gateways, and CI checks that keep teams productive.

- Auditing and subject rights. Immutable logs and automated subject access and deletion workflows.