As artificial intelligence (AI) grows, AI guardrails ensure safety, accuracy, and ethical use. These guardrails are a set of protocols and best practices designed to mitigate risks associated with AI, such as bias, misinformation, and security threats. They are vital in shaping how AI systems, particularly generative AI, are developed and deployed.

AI systems can inadvertently produce harmful or misleading content or expose sensitive data without these safeguards. Establishing AI guardrails ensures that AI behaves predictably and remains aligned with user intentions and regulatory standards. Furthermore, these guardrails help organizations maintain accountability while minimizing the reputational and financial risks associated with AI misuse.

By enforcing AI guardrails, businesses can harness AI’s potential in a controlled environment, safeguarding against unintended consequences while adhering to privacy, security, and ethical guidelines. This approach builds user trust and facilitates AI’s broader acceptance across various industries.

Understanding Guardrails in the Context of Generative AI

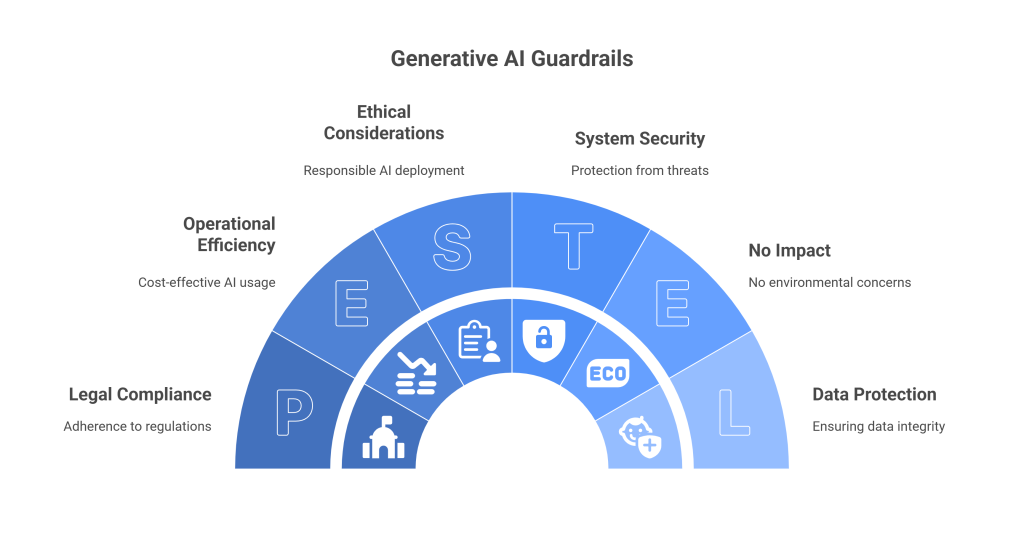

Gen AI guardrails are essential for guiding the safe and responsible use of generative AI. These guardrails serve as a structured framework to ensure that AI systems function within acceptable ethical, legal, and operational boundaries. In the context of generative AI, they prevent unintended outputs, such as biased content or inaccurate information.

Organizations use several types of guardrails to regulate AI systems. These include:

- Operational guardrails – Focus on ensuring that AI systems function according to predefined rules and comply with legal and ethical standards.

- Safety guardrails – Prioritize the protection of users by preventing harmful or inappropriate outputs from AI systems.

- Security guardrails – Safeguard AI systems from external threats, ensuring data privacy and integrity are maintained.

By implementing these types of guardrails, companies can reduce risks associated with AI deployment. These measures also help adhere to global standards for AI safety and ethics, which are becoming increasingly important as AI systems are integrated into critical operations.

Properly defined gen AI guardrails protect organizations and enable them to scale AI adoption while mitigating potential threats. They ensure that AI behaves in a way that aligns with human values and societal norms, fostering trust in these emerging technologies.

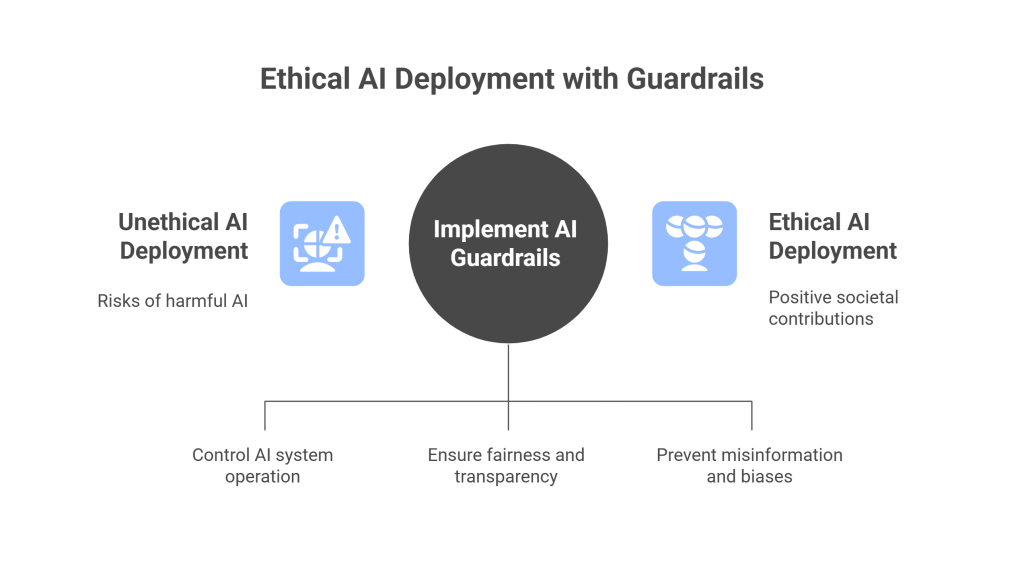

The Role of AI Guardrails in Ensuring Ethical AI Deployment

AI guardrails play a critical role in mitigating risks during AI deployment. By enforcing boundaries on how AI systems operate, they ensure that the technology aligns with ethical standards and societal norms. These guardrails help prevent harmful outcomes, such as biased decision-making, data privacy violations, or misuse of AI for malicious purposes.

Risks can arise from several factors in AI deployment, including unintended model outputs, bias in data, and security vulnerabilities. Guardrails provide the necessary controls to manage these risks by enforcing fairness, transparency, and accountability in AI processes. This creates an environment where AI can safely integrate into various sectors without compromising ethical standards.

Guardrails for generative AI, specifically, help ensure that AI-generated content does not spread misinformation or harmful biases. They can include safeguards like content moderation filters, bias-detection algorithms, and output validation mechanisms. These measures protect organizations from reputational damage and legal risks while enhancing public trust in AI systems.

By using AI guardrails, organizations can confidently deploy AI technologies, knowing they have the mechanisms to mitigate potential risks. These guardrails ensure that AI systems contribute positively to society while preventing unintended negative consequences.

Case Studies and Examples of AI Guardrails

Real-world implementations of AI guardrails provide clear insights into their effectiveness in addressing the challenges of AI systems. These case studies highlight how businesses and organizations have successfully integrated guardrails to ensure ethical and secure AI operations.

One prominent example is Microsoft’s Responsible AI framework, which includes guardrails for ensuring fairness, transparency, and accountability in AI applications. This framework requires extensive testing and monitoring during the development and deployment of AI models. The guardrails helped Microsoft avoid issues of bias and misuse in applications like Azure AI and chatbot systems, ensuring that the technology operates within set ethical parameters.

Another case comes from OpenAI, which integrates robust guardrails for generative AI models like GPT. OpenAI uses techniques such as reinforcement learning from human feedback (RLHF) to ensure that the outputs are aligned with ethical and legal standards. These guardrails prevent harmful or biased content from being generated, protecting both users and developers.

Additionally, Credo AI has implemented comprehensive AI governance platforms that help businesses establish control over their AI deployments. Their system includes AI guardrails focusing on regulatory compliance, risk management, and ethical AI practices. This allows organizations to deploy AI responsibly while adhering to the necessary legal and ethical standards.

These examples demonstrate the versatility of guardrails in safeguarding AI, reducing risks, and fostering responsible innovation.

Frameworks and Strategies for Implementing AI Guardrails

Organizations need robust frameworks and strategic approaches to effectively implement AI guardrails that align with their specific AI goals. These frameworks are blueprints for maintaining ethical standards, regulatory compliance, and system integrity across AI deployments.

One widely used framework is the AI governance framework, which outlines the principles and rules AI systems must follow to ensure responsible usage. Platforms like Credo AI offer a governance structure that allows companies to manage risks and meet compliance requirements while deploying AI. This framework provides checks and balances, ensuring transparency and ethical practices are integrated into every step of AI development.

Aporia’s monitoring systems serve as another critical example. They offer real-time AI monitoring to identify and rectify issues like bias, drift, or inaccurate predictions in machine learning models. These guardrails not only enhance the accuracy of models but also ensure they operate within the ethical guidelines defined by the organization.

Strategically, many companies adopt human-in-the-loop (HITL) approaches as part of their AI guardrails. This strategy involves human oversight in decision-making processes, ensuring that AI systems are held accountable and operate within ethical boundaries. Incorporating continuous auditing and testing of AI models further strengthens these guardrails, allowing businesses to adapt quickly to any identified risks or shortcomings.

By leveraging these frameworks and strategies, organizations can ensure that their AI systems are ethical, transparent, and secure.

Impact of Guardrails on Responsible AI and Gen AI Adoption

Implementing AI guardrails plays a pivotal role in fostering the growth of responsible AI. Guardrails ensure that AI systems align with ethical standards and legal regulations, mitigating risks like bias, unfair decision-making, and data misuse. By adhering to these guidelines, companies can build trust with users and regulators, promoting the responsible adoption of AI technology.

For gen AI adoption, these guardrails provide the necessary structure to manage the complex challenges that arise during AI deployment. They enable organizations to ensure their AI systems are transparent and accountable, which is essential for broader healthcare, finance, and marketing adoption. Users and stakeholders feel more confident when clear safety measures are in place, encouraging them to adopt generative AI solutions.

Incorporating AI guardrails also streamlines the integration of AI into business operations. It reduces potential risks such as data privacy violations, security breaches, and incorrect outputs. Companies prioritizing the implementation of these guidelines can accelerate their use of generative AI while ensuring it meets ethical and operational standards.

As a result, guardrails make gen AI adoption safer and more appealing to organizations seeking innovation without compromising on ethics or compliance.

Future Directions and the Evolution of AI Guardrails

As AI technologies rapidly advance, AI guardrails must evolve to keep pace. The future of guardrails will involve more dynamic and adaptive systems capable of adjusting to new challenges as they arise. With the increasing complexity of AI systems, these guardrails will need to be more sophisticated, ensuring real-time monitoring and continuous validation of AI outputs.

Another key trend is the integration of machine learning algorithms within the guardrails. These intelligent guardrails can learn from past errors, identifying potential risks before they materialize. This proactive approach will be crucial as generative AI becomes more ingrained in critical industries such as healthcare, finance, and legal services.

Regulatory frameworks around responsible AI will also drive the need for enhanced guardrails. Governments and organizations will develop stricter standards, requiring that AI systems adhere to ethical guidelines at every development and deployment stage. Compliance will no longer be optional but mandatory, leading to more robust guardrails.

As AI continues to push boundaries, ongoing innovation in AI guardrails will be essential. These advancements will ensure that AI remains a force for good, balancing progress with responsibility, security, and transparency.

Conclusion

AI guardrails are essential for ensuring generative AI’s safe, ethical, and effective use. As these technologies grow more powerful, well-implemented guardrails will help prevent misuse, bias, and security risks, guiding AI systems toward responsible deployment. By fostering responsible AI practices, these guardrails contribute to safer innovation, aligning AI development with societal values.

The journey toward building robust guardrails is ongoing, with continuous improvements necessary to meet emerging challenges. As organizations and governments emphasize AI safety, the role of these protective measures will only grow. Staying informed and engaged with the latest developments in AI guardrails will ensure that innovation and responsibility advance hand in hand.