Building an in-house data masking tool often starts as a practical decision. The logic feels sound. Your team understands the data, knows the systems, and can tailor masking logic exactly to your needs. On the surface, it looks like a short engineering project that saves licensing costs and avoids external dependencies.

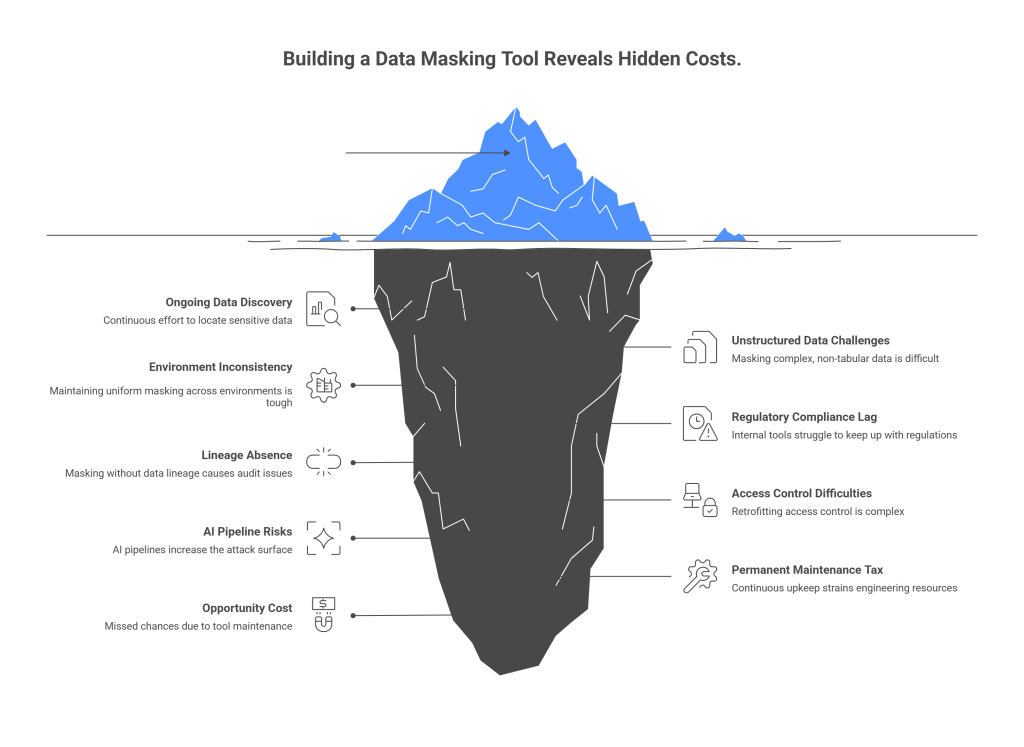

What we’ve learned, after observing many organizations take this path, is that the hidden costs of building your own data masking solution rarely appear during the initial build. They accumulate quietly over time, embedded in maintenance work, compliance exposure, architectural complexity, and lost focus. By the time these costs become visible, the tool is often too deeply embedded to unwind easily.

Why Teams Choose to Build Data Masking Internally

Internal data masking projects usually begin with good intentions. Teams want flexibility, tighter integration, and faster iteration. Sometimes off-the-shelf tools feel too broad, too slow to procure, or misaligned with a specific use case.

Early success reinforces this decision. A script masks a few database columns. A pipeline anonymizes test data. Logs are partially redacted. Everything appears manageable.

The challenge emerges when masking shifts from a one-time task into a permanent capability that must operate reliably across environments, data types, teams, and regulatory frameworks. At that point, complexity grows faster than most teams expect.

Hidden Cost #1: Data Discovery Is an Ongoing Problem, Not a Setup Task

Masking only works when you know where sensitive data lives. Most internal tools assume that discovery is already solved, or that data locations are relatively static.

In practice, personal data spreads continuously as systems evolve. New columns appear in databases, logs capture request payloads, free-text fields accumulate emails and phone numbers, and AI pipelines ingest unstructured content without fixed schemas. Discovery becomes a moving target rather than a checklist item.

Moreover, new tools are not trained on large datasets to capture edge cases or complex unstructured data. Lack of adequate training results in a high number of false positives and negatives, which can increase audit risk.

Finally, with in house built tools, teams end up maintaining scanners, rules, and manual reviews just to keep up. In the long term, this becomes a permanent operational responsibility.

Hidden Cost #2: Unstructured Data Breaks Simple Masking Logic

Structured fields are predictable. Unstructured text is not.

For example, support tickets, CRM notes, documents, and chat messages often contain personal data embedded in natural language. Detecting and masking this correctly requires understanding context, not just patterns. Regex-based approaches quickly fall short, and extending them results in false positives that undermine system trust.

At this stage, many internal tools quietly turn into natural language processing projects. That introduces new dependencies, model evaluation work, and ongoing tuning.

Hidden Cost #3: Consistency Across Environments Is Harder Than Expected

Masking logic rarely stays confined to one system. Production, staging, analytics, support tools, backups, and AI pipelines all need consistent behavior.

Teams discover that different environments require different masking rules. Developers want realism in test data, analysts need stable joins, support teams need partial visibility, and AI systems require deterministic outputs. Each exception adds conditional logic and increases the testing surface.

Over time, the masking tool becomes tightly coupled to business workflows, making changes risky and slow. What began as a utility starts behaving like core infrastructure.

Hidden Cost #4: Regulatory Change Outpaces Internal Tooling

Privacy regulations evolve continuously. New interpretations emerge around AI usage, cross-border data transfers, and unstructured content. Internal tools often lag behind because regulatory updates don’t map cleanly to engineering tasks.

When compliance logic lives outside normal development workflows, updates tend to happen reactively, often during audits or security reviews. That urgency introduces rushed fixes, rework, and context switching, all of which impact security and compliance posture.

Hidden Cost #5: Masking Without Lineage Creates Audit Friction

Masking data is only half the compliance story. The other half is proving what happened.

Auditors and customers increasingly ask detailed questions about data origin, transformation timing, access history, and downstream usage. Internal masking tools often transform data without recording full lineage, leaving teams to reconstruct events manually.

This reconstruction is time-consuming and error-prone, especially under fixed deadlines. The cost here is not just engineering time, but organizational stress and uncertainty.

Compared to this, mature AI privacy tools like Protecto generate clear, comprehensive, auditor-friendly trials of logs and important data it processes.

Hidden Cost #6: Access Control and Purpose Limitation Are Hard to Retrofit

Modern regulations expect controlled unmasking based on role and purpose. However, AI functions by combining data from multiple sources. This means if user A who is unauthorized to view file ‘X’ enters a prompt indirectly asking for data in those files, the tool can inadvertently scan and surface data from it.

Implementing access control correctly requires identity-aware checks, purpose binding, and detailed logging across APIs, jobs, dashboards, and exports. Many internal tools start as one-way masking systems and later attempt to add selective visibility.

Retrofitting access control into a tool that wasn’t designed for it often leads to duplicated logic and inconsistent enforcement. What looked like a masking utility becomes a partial authorization system, without the architectural foundations to support it cleanly.

AI privacy tools like Protecto solves this problem using CBAC (control based access control); a technique that evaluates the full context of a prompt rather than individual words or static roles. It grants or denies access based on conditions like who is requesting what and under which conditions.

Hidden Cost #7: AI Pipelines Multiply Risk Surface Area

AI changes the economics of data masking entirely.

Training datasets, embeddings, vector stores, prompt logs, and generated outputs all introduce new places where sensitive data can appear. Masking must happen before data enters these systems, because removing it afterward is often impractical.

Teams building internal tools discover that supporting AI safely requires deep integration with ingestion pipelines and careful handling of context. Errors propagate quickly, and fixing them retroactively is expensive and challenging.

Hidden Cost #8: Maintenance & Opportunity Cost

Internal data masking tools don’t stabilize. They require constant attention.

Schemas change, new data sources appear, detection rules drift, and audits demand documentation. This work often falls on senior engineers because it touches critical systems.

Over time, teams realize they are maintaining a parallel privacy platform that is not formally staffed or budgeted. Eventually, teams pay in terms of lost bandwidth that affects core business workflows.

Moreover, time spent maintaining masking logic is an hour not spent improving core product features, advancing analytics, shipping AI capabilities, or expanding into new markets. This opportunity cost rarely appears in planning documents, but it shapes long-term velocity.

Teams often don’t gradually outgrow masking needs. They cross a threshold, and complexity increases all at once.

When Building In-House Can Still Make Sense

There are scenarios where internal masking tools are reasonable. Narrow datasets, limited regulatory exposure, minimal unstructured data, and no AI workloads can keep complexity manageable.

The key question is whether today’s scope will still describe your business in two years. Most organizations underestimate how quickly their data footprint grows.

What Mature Organizations Do Differently

Organizations that handle data masking well tend to treat it as a platform capability rather than a script. They integrate discovery, transformation, access control, and auditability from the start. They assume regulations will evolve and design for adaptability instead of short-term simplicity.

Some build this internally with dedicated teams. Many rely on specialized platforms that already encode these lessons. In both cases, the decision is intentional and informed.

How Protecto Reduces These Hidden Costs

Protecto is designed to address the long-term challenges internal tools struggle with.

It provides continuous discovery across structured and unstructured data, context-aware masking and tokenization, consistent enforcement across environments, and built-in lineage and audit logs. Policy-based access controls ensure purpose limitation, while early-stage protection prevents sensitive data from inadvertently entering analytics and AI pipelines.

For teams that have already attempted to build internally, Protecto often replaces years of accumulated complexity with a single, coherent layer. It absorbs those long-tail costs so they don’t accumulate across engineering, compliance, and operations.

Let’s walk through how that happens, cost by cost.

- Eliminates Ongoing Cost of Data Discovery: Continuously discovers and classifies sensitive data across structured databases, logs, documents, support systems, and AI inputs.

- Handles Unstructured Data Smartly: Applies context-aware detection to unstructured text. It understands the meaning to identify names, identifiers, health data, and sensitive relationships even when formats are inconsistent.

- Consistency Across Environments: Protecto applies a single policy layer across environments. Same rules apply consistently whether data is flowing into production systems, test environments, analytics platforms, or AI pipelines.

- Keeps Up With Regulatory Change: Decouples regulatory logic from application code. Updates to masking behavior, retention rules, access controls, or scope at policy level.

- Provides Built-In Lineage and Audit Evidence: Protecto records data lineage automatically. It tracks where sensitive data originated, how it was transformed, where it flowed, and who accessed it.

- Avoiding the Complexity of Building Controls: Protecto includes policy-based access controls. Detokenization is tied to role, purpose, and workflow. Every access is logged.

- Protects AI Pipelines Without Re-Architecting Them: Integrates at ingestion points, ensuring sensitive data is masked or tokenized before it reaches AI systems to prevent accidental exposure and eliminates costly cleanups.

- Reducing Long-Term Maintenance Load: Protecto centralizes discovery, masking, access control, logging, and reporting into a single system. Monitoring is built in. Documentation and reports are generated automatically.

The hidden costs of building your own data masking tool rarely appear in the first sprint or even the first year. They surface gradually, in maintenance overhead, compliance risk, architectural rigidity, and lost momentum.

Before committing to an internal build, it’s worth asking not only whether you can build it and if you want to own that responsibility indefinitely. For many teams, clarity on that question is what prevents a small utility from becoming a long-term liability.

elementor-template id=”15760″