AI assistants and search tools are woven into daily work. But not all providers handle your prompts, files, or transcripts the same way. Small policy details determine whether your data trains future models, how long it’s kept, and what an auditor will see. If you use these tools in regulated environments, the safest choice to ensure OpenAI data privacy often depends on your specific channel: consumer app, enterprise account, or API.

Below is a clear, like-for-like view of four popular products: OpenAI (ChatGPT and API), Anthropic’s Claude, Otter.ai, and Perplexity AI.

Quick comparison at a glance

| Provider | Default training on consumer chats | API or enterprise data used for training | Typical retention signals | Notable privacy notes |

| OpenAI | Consumer ChatGPT may be retained and, depending on settings or legal holds, used for improvement; users have controls. | API and enterprise: not used for training by default. | Enterprise/API data not used for training by default; separate disclosure about a 2025 court order requiring temporary retention of some content. | Enterprise privacy page confirms no training on business data by default; consumer privacy and help pages explain opt-in paths and retention context. |

| Anthropic Claude | Yes, as of fall 2025, consumer chats train models unless you opt out in settings. | Claude for Work, Claude Gov, and API deployments excluded. | If you opt in to training, retention may extend up to five years; otherwise a shorter window applies. | Policy change rolled out with in-product controls; media and company notices document timelines and scope. |

| Perplexity AI | Consumer features may use activity to improve services; details vary by feature. | Sonar API advertises zero data retention and no training on customer data. | API requests claim no retention; consumer features like Personal Search may use data for improvement. | Perplexity’s docs outline a zero-retention API; third-party reviews highlight different defaults for consumer features. |

| Otter.ai | State recordings are de-identified and used to train models automatically; user control is primarily about sharing and access. | Not an LLM API, but data flows through cloud processing. | Policy effective 2024 describes controller role and training on de-identified data. | Recent legal analysis flags risk around reliance on de-identification for training. Validate contract terms if using in regulated workflows. |

Notes

- Provider policies often distinguish between consumer chat, business subscriptions, and API usage. Always check which channel your team is actually using.

- Retention windows can be reset by legal holds, safety investigations, or product changes; watch provider updates and legal notices.

Deep dive: where the differences really show

OpenAI

Enterprise and API: the company states it does not use your business data to train models by default, with opt-in pathways if you want model improvement. This is the safest path for regulated teams using OpenAI today.

Consumer ChatGPT: OpenAI explains how user content can help improve models, and in June 2025 disclosed a court order requiring retention of some consumer and API content pending litigation. Teams should treat consumer accounts as out of scope for sensitive data.

Anthropic Claude

Policy shift in 2025: Anthropic announced that consumer chats will be used for training unless users opt out; opting in extends retention significantly. Enterprise, government, and API channels remain excluded. Confirm your tenant type before sharing sensitive data.

Perplexity AI

Sonar API: the documentation advertises zero data retention and no training on customer data. This is well suited to programmatic use where logs are a liability.

Consumer features: privacy materials and independent reviews note that some consumer features, such as Personal Search, may use activity to improve services. Avoid sending secrets through consumer UIs.

Otter.ai

Training on de-identified recordings: Otter says it uses a proprietary de-identification method to train models and that training data is encrypted. Legal commentary in 2025 underscores that de-identification does not eliminate all AI risks, especially under biometric or state privacy laws. If you record regulated calls, put explicit consent and retention limits in writing.

Practical guidance for teams

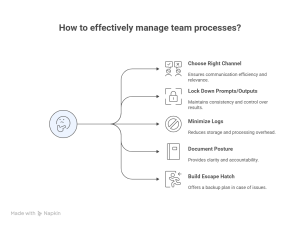

Choose the right channel

Use enterprise or API offerings for anything sensitive. For OpenAI and Anthropic, that route avoids training by default and gives you admin-level controls.

Lock down prompts and outputs

Even with provider promises, add your own pre-prompt redaction and output filtering so raw identifiers never reach a model or UI. This is especially important if you experiment in consumer products.

Minimize logs

Favor providers and modes that allow short retention or zero-retention operation, particularly for API calls in finance, healthcare, and public sector contexts. Perplexity’s Sonar API is one example; OpenAI enterprise provides retention controls and separate data handling.

Document your posture

Keep a one-pager per provider: which channel you use, training defaults, retention settings, who can access logs, and how you respond to rights requests. Update it when providers change terms.

Build an escape hatch

Use a privacy or security gateway that can swap underlying models without changing your controls. That way, a sudden policy update from any provider will not stall your roadmap.

Common buyer questions, answered

Do these companies sell my data

Across the four vendors reviewed, public materials emphasize that they do not sell personal data. The bigger risk is training and retention, not sales. Check the exact wording in your contract.

Is enterprise always safer than consumer

For OpenAI and Anthropic, yes: enterprise or API channels have stronger guarantees against training by default and clearer retention controls. Perplexity’s Sonar API is similarly strict. Otter focuses on consumer and business teams, not developer APIs; treat it like a collaboration tool with recordings rather than a model endpoint.

What about data residency

Most providers offer region routing for enterprise or through cloud partners, but specifics vary and may depend on the platform where you deploy the model. Validate residency, sub-processor lists, and retention in your order form or DPA.

How do legal holds affect us?

Any provider can be compelled to retain data. OpenAI disclosed such a requirement in 2025. Your best defense is to avoid sending secrets to consumer services and to keep enterprise/API data minimized and encrypted.

A simple selection checklist

- Channel selection: Use enterprise or API. Avoid consumers for sensitive data.

- Training default: Confirm default stance and your opt-out or opt-in setting.

- Retention: Target short or zero-retention modes for API traffic.

- Logging and audit: Ensure exportable logs and granular access controls.

- Residency and vendors: Verify data region and sub-processors in writing.

- Exit plan: Abstract models behind your own privacy gateway so you can rotate vendors.

One control plane, four vendors

Protecto sits between your users/apps and each AI provider as a policy-aware gateway plus pipeline SDKs. It inspects inputs and outputs in real time, enforces purpose, role, and region rules, and leaves an audit trail that proves what happened.

Quick map of controls by provider

| Provider and channel | What can go wrong | Protecto controls that neutralize the risk |

| OpenAI Enterprise or API | Sensitive data sent in prompts or retrieved via tools; over-broad API responses; logs retaining identifiers | Pre-prompt redaction, output filtering, tool allow lists; response schema and scope enforcement; short-retention log redaction; region routing and egress allow lists; deterministic tokenization at ingestion so identifiers never reach the model |

| OpenAI consumer apps | Consumer features and legal holds can retain data | Hard block for sensitive workflows; allow only enterprise/API tenants; client-side scrubber for accidental use; continuous discovery to ensure no consumer endpoints in production traffic |

| Anthropic Claude Enterprise/API | Similar to OpenAI: prompts may carry PII/PHI; retrieval may surface raw text | Same gateway rules as above; retrieval redaction before indexing; policy tags for purpose and residency; lineage linking user, dataset, and policy version |

| Anthropic consumer web/app | Consumer chats used for training unless opted out | Block by policy for protected data; route users to managed enterprise tenant; redact locally if a team must explore features |

| Perplexity Sonar API | Good defaults but you can still overshare in prompts or get rich answers with hidden identifiers | Pre-prompt redaction; output scrubbing; per-feature response whitelists; egress allow lists so only Sonar API is reachable; lineage and zero-retention logs in your environment |

| Perplexity consumer features (Personal Search, etc.) | Activity may be used to improve services | Enforce tenant and feature allow lists; automatic masking of PII; optional proxy mode that forbids consumer features entirely |

| Otter.ai meetings/transcripts | Raw audio/video contains names, faces, account numbers; transcripts stored with PHI/PII; shared links leak context | Ingest pipeline that applies face blurring and toneprint removal where required; real-time audio redaction for names, numbers, IDs; transcript entity redaction and deterministic tokenization; link-sharing DLP guard; retention timers and vault-based re-identification under strict runbooks |

Ready to adopt AI without the risks?