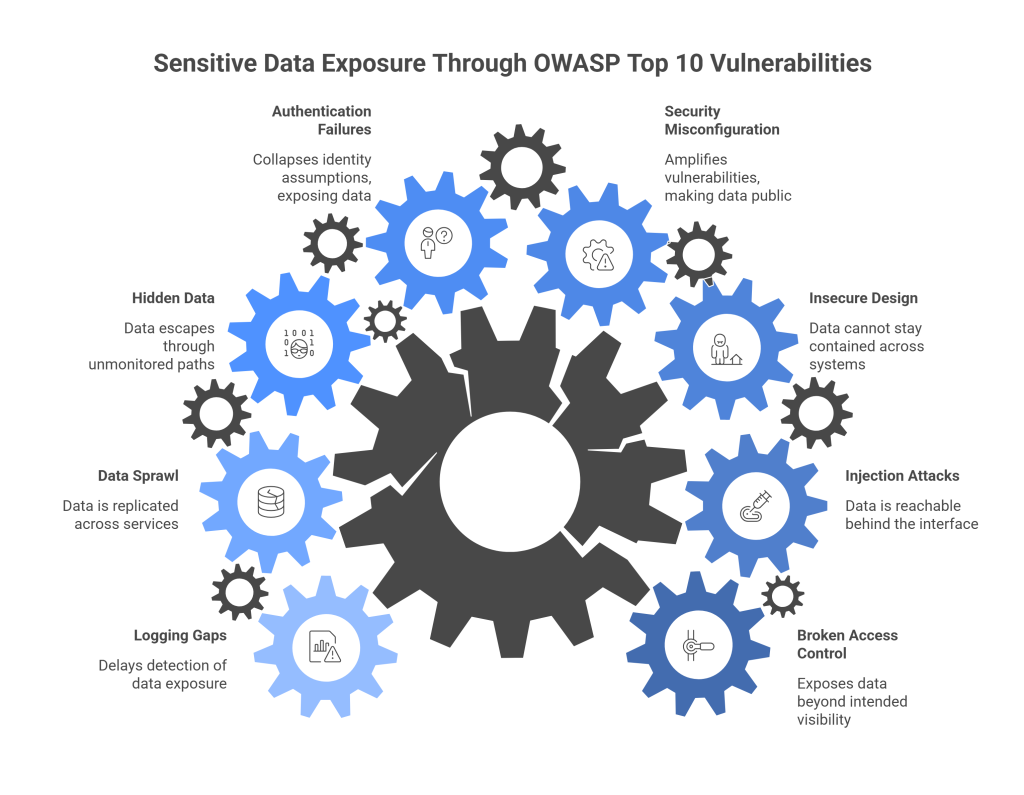

The OWASP Top 10 is usually presented as a list of technical failures. The usual suspects include broken access controls, prompt injection, insecure design, and misconfigurations.

Each category points to something that went wrong in the application without explicitly highlighting what’s at risk or what went wrong. In most cases, the answer points to the data.

Sensitive data is the reason attackers care about OWASP failures in the first place. The OWASP Top 10 describes how systems break. Sensitive data explains why those breaks matter.

Data is the incentive behind almost every OWASP failure

Attackers are not interested in whether your application follows best practices but looking for data. Sometimes it’s obvious like database dumps, leaked API responses, or misconfigured storage buckets.

Other times it’s subtle like session metadata, email addresses, internal identifiers, or partial records that can be correlated later.

The OWASP Top 10 catalogs common weaknesses tied together in a way each one provides a path, direct or indirect, to sensitive data.

Wihout valuable data behind these systems, most OWASP issues would be academic.

Broken access control is all about data visibility

Broken Access Control is often framed as a permissions problem. A user sees something they shouldn’t. But the real question always boils down to what data did that access decision expose?

In modern systems, access control governs far more than pages or endpoints like:

- Which rows a user can query

- Which fields are returned by an API

- Which logs can be viewed

- Which prompts an AI system can retrieve context for

When access control fails, it exposes data – often more data than intended. For example, role misconfigurations can turn masked views into raw records, forgotten internal endpoints can return full objects instead of filtered responses, and support tools can bypass application-layer checks entirely.

The OWASP category names the failure while the incident report describes the data that was accessed.

Injection attacks succeed because data is reachable

Injection vulnerabilities are typically explained as a way to execute unintended commands. That’s partially true as injection works because data is already reachable behind the interface.

SQL injection leaks valuable records, template injection compromises template configurations, and prompt injection tricks AI systems to leak confidential data by removing the guardrails.

In AI systems, prompt injection attacks just need to convince the system to retrieve data on the attacker’s behalf to get direct access to a database. The model simply follows instructions, resulting in data disclosure.

Insecure design creates systems where data cannot stay contained

Insecure design is the least concrete OWASP category, and often the most dangerous. More often than not, the main culprit are the decisions that quietly assume sensitive data will always be handled correctly later.

These decisions usually sound reasonable at the time:

- “We’ll store raw data for now and clean it up later.”

- “Downstream services will enforce masking.”

- “Only internal users will ever see this.”

- “This data won’t leave the system.”

Insecure design doesn’t immediately expose data. It guarantees that when something else fails, data exposure will be unavoidable.

Design choices determine how widely data is copied, retention deadline, number of systems with access, and audit difficulty level. Once sensitive data is deeply embedded across systems, every OWASP failure becomes more costly.

Security misconfiguration decides how public the data becomes

Misconfiguration does not directly lead to data leakages, but acts as an amplifier. Storage buckets without proper restrictions, API gateways with default settings, or IAM roles that’s broader than required all add the vulnerabilities. These misconfigurations determine who can reach sensitive data.

Misconfiguration combined with broken access control or insecure design puts internal data at risk of becoming externally accessible. This is why so many incidents involve “unexpected exposure.” The system functioned and controls were assumed to be correct but a single configuration change turns a private dataset into a public one.

The OWASP category describes the setup error. The fallout is measured in records exposed.

Authentication failures poison every data decision downstream

Authentication failures are often measured in terms of account compromise. The connection between identity and data handling is often ignored.

Every data control assumes identity is correct. This includes the rows you can query, fields you can see, logs you can access, and which prompts retrieve which context. When authentication fails, those assumptions collapse. This is especially dangerous in systems that rely on delegated identity like API keys reused across services, agents acting on behalf of users, or background jobs impersonating roles.

Once identity is wrong, data exposure is not a side effect. It is the system working as designed, for the wrong entity.

The OWASP category focuses on authentication. The damage shows up in data access patterns.

Sensitive data hides in places teams forget to look

One reason sensitive data is so often involved in OWASP incidents is that it rarely lives in single, obvious locations. It shows up in logs, analytics events, error traces, browser storage, client-side scripts, and third-party integrations.

Vulnerable or outdated components often leak data because they observe more than expected. For example, telemetry SDKs capture full request bodies, analytics tools record user identifiers, and client-side script executes with access to page context.

When these components become vulnerable, data escapes through paths teams aren’t monitoring. The OWASP category names the dependency issue while the exposure happens somewhere else entirely.

OWASP failures reveal how widely data has spread

One uncomfortable truth that OWASP incidents surface is how far sensitive data travels inside modern systems.

By the time a vulnerability is exploited, data is often replicated across services, cached in intermediate layers, logged in multiple formats, and embedded in analytics or monitoring tools.

Teams discover not just where data was exposed, but how many places it existed, making scoping incidents a major challenge.

OWASP vulnerabilities reveal the data sprawl.

Logging and monitoring gaps delay data exposure detection

Insufficient logging is often treated as a detection problem. In reality, it’s a data governance problem.

If you don’t know:

- Which data fields are returned by APIs

- Which scripts execute in user browsers

- Which identities access which records

- Which prompts retrieve which context

Then you don’t know where sensitive data is flowing.

Many data exposure incidents aren’t discovered through alerts. They are found through customer reports, third-party disclosures, or audits. By the time teams look, logs are incomplete, context goes missing, and scope lacks clarity.

The OWASP category points to missing visibility. The consequence is delayed recognition of data loss.

Sensitive data gives OWASP issues real-world consequences

Without sensitive data, many OWASP vulnerabilities would still matter. They would still be bugs and have flaws. However, they wouldn’t trigger regulatory action, customer notifications, or reputational damage.

Sensitive data changes the stakes. An injection flaw that exposes test data is an issue, but the same flaw exposing customer records is an incident. A misconfiguration that leaks metadata is a warning, but the same misconfiguration leaking PII is a breach.

This is why OWASP issues feel theoretical until data is involved. Sensitive data turns technical debt into business risk.

OWASP doesn’t explicitly say “data,” but it always implies it

The OWASP Top 10 is written to be broadly applicable. It avoids prescribing what kind of data systems handle. However, in practice, almost every system processes something sensitive like credentials, identifiers, behavioral data, proprietary information, and more.

OWASP categories describe failure modes that matter precisely because sensitive data is present. When teams separate “AppSec issues” from “data risk,” they miss this connection. They fix vulnerabilities without reducing exposure.

Where Protecto fits in this picture

If sensitive data is the common thread across most OWASP Top 10 issues, then reducing risk is more than just fixing vulnerabilities. It’s about changing what those vulnerabilities can expose.

This is where Protecto operates.

Most OWASP failures do not create new data. They reveal what was already there. Raw values moving freely through systems. Copied into logs. Passed into tools. Retrieved automatically by services and agents.

Protecto changes that default.

Instead of letting sensitive data flow everywhere and relying on every control to work perfectly, Protecto replaces meaning at the source. Tokens move through systems in place of real values. They preserve structure and referential integrity, but carry no semantics of their own.

When access control breaks, there is nothing meaningful to see.

When injection succeeds, there is nothing interpretable to extract.

When design assumptions fail, the blast radius is limited by default.

But with Protecto in place, those failures stop being synonymous with data exposure. The system can fail without the data failing with it. That shift doesn’t eliminate the OWASP Top 10. It changes what’s at stake when something on that list inevitably goes wrong.