As large language models (LLMs) continue to push the boundaries of natural language processing, their widespread adoption across industries has highlighted the critical need for robust LLM security solutions. These powerful AI systems, while immensely beneficial, are vulnerable to emerging threats such as data leakage, prompt injection attacks, and compliance risks.

Top 10 LLM Security Tools of 2025

In 2025, the landscape of LLM security tools has evolved to address these unique challenges, ensuring their safe and responsible deployment. Below, we explore the top LLM security tools of 2025, designed to safeguard LLM applications and enhance security across LLM security testing and monitoring.

Protecto / GPTGuard (Leading Secure LLM Solutions)

Protecto and GPTGuard are leading LLM security solutions, helping businesses securely deploy LLMs without exposing sensitive or personal information. Protecto provides end-to-end LLM security monitoring and testing, ensuring data protection, compliance, and role-based access control in Retrieval-Augmented Generation (RAG) workflows.

Key Features:

- Advanced Data Masking: Obfuscates sensitive data while preserving contextual integrity.

- Role-Based Access Controls: Ensures controlled data access in LLM environments.

- Intelligent Tokenization: Automatically replaces PII elements with AI-compatible tokens.

- Regulatory Compliance: Supports GDPR, HIPAA, CCPA, and DPDP compliance.

- Comprehensive Security Testing for LLMs: Identifies and mitigates adversarial attacks, prompt injections, and data breaches.

Protecto’s high-precision LLM security evaluation enables enterprises to safeguard their LLM models effectively. Whether integrating LLM security testing tools into enterprise AI applications or leveraging Protecto Privacy Vault for data encryption and anonymization, Protecto stands out as the top solution for secure LLM deployment.

WhyLabs LLM Security (Comprehensive LLM Security Monitoring & Testing)

WhyLabs LLM Security provides a multi-layered approach to LLM security testing, ensuring applications remain resilient against prompt injection attacks and data leaks.

Key Features:

- Data Loss Prevention: Prevents unauthorized data exposure.

- Prompt Injection Monitoring: Blocks adversarial prompts that attempt to bypass safeguards.

- Misinformation Detection: Identifies and mitigates LLM-generated hallucinations.

Lasso Security (LLM Guardian) (LLM Security Framework & Threat Modeling)

Lasso Security’s LLM Guardian is a LLM security framework offering comprehensive threat modeling and security assessments.

Key Features:

- LLM Security Evaluation: Detects vulnerabilities across AI workflows.

- Advanced Threat Modeling: Helps anticipate LLM-related cyber threats.

Lasso Security presents a comprehensive end-to-end solution explicitly designed for large language models. Their flagship offering, LLM Guardian, addresses the unique challenges and threats LLMs pose in the rapidly evolving cybersecurity landscape, tailored to meet the specific security needs of LLM applications.

Lasso Security provides robust security assessments. The company comprehensively evaluates LLM applications to identify potential vulnerabilities and security risks. These assessments enable organizations to understand their security posture and the challenges they may face when deploying LLMs, allowing them to take proactive measures.

Lasso Security’s LLM Guardian also offers advanced threat modeling capabilities, empowering organizations to anticipate and prepare for potential cyber threats targeting their LLM applications.

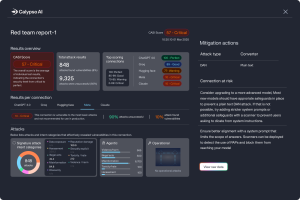

CalypsoAI Moderator (Enterprise LLM Security Solutions)

CalypsoAI Moderator is a best-in-class LLM security tool, offering data loss prevention, full auditability, and malicious code detection.

Key Features:

- Malware Detection: Prevents LLM-generated exploits.

- Audit Logging: Captures detailed records for compliance and security forensics.

CalypsoAI Moderator is a comprehensive security solution designed to address various challenges associated with deploying Large Language Models in enterprises. This tool’s key features cater to a wide range of security needs, making it a robust choice for organizations looking to safeguard their LLM applications.

A standout feature of CalypsoAI Moderator is its data loss prevention capabilities. It screens for sensitive data like code and intellectual property, ensuring that such information is blocked before leaving the organization. This feature is crucial in preventing the unauthorized sharing of proprietary information.

Additionally, CalypsoAI Moderator provides full auditability, offering a comprehensive record of all interactions, including prompt content, sender details, and timestamps. Malicious code detection is another critical aspect addressed by this solution. CalypsoAI Moderator can identify and block malware, thus safeguarding the organization’s ecosystem from potential infiltrations via LLM responses.

BurpGPT (Security Testing for LLMs in Web Applications)

BurpGPT integrates OpenAI’s LLMs with Burp Suite for security testing of LLM-based web applications.

Key Features:

- Advanced LLM Vulnerability Scanning

- Automated Threat Intelligence for LLM Security Testing

BurpGPT is a Burp Suite extension designed to enhance web security testing by integrating OpenAI’s LLMs. It provides advanced vulnerability scanning and traffic-based analysis capabilities, making it a robust tool for beginners and seasoned security testers.

BurpGPT has a passive scan check capability, which allows users to submit HTTP data to an OpenAI-controlled GPT model for analysis, helping detect vulnerabilities and issues that traditional scanners might miss in scanned applications.

BurpGPT offers granular control, allowing users to choose from multiple OpenAI models and control the number of GPT tokens used in the analysis. Seamless integration with Burp Suite is another significant advantage of BurpGPT.

Rebuff (Self-Hardening LLM Security Testing Tools)

Rebuff is an LLM security testing tool designed to protect AI applications from prompt injection attacks.

Key Features:

- Multi-Layered Defense: Detects and blocks LLM prompt injections.

- Vector Database Integration: Enhances threat intelligence and anomaly detection.

It employs a multi-layered defense mechanism to enhance the security of LLM applications, providing a robust line of defense against this growing threat.

One of the critical features of Rebuff is its multi-layered defense approach. The tool incorporates four layers of defense to provide comprehensive protection against PI attacks, ensuring that multiple safeguards are in place to mitigate potential vulnerabilities.

Rebuff employs a dedicated LLM to analyze incoming prompts and identify potential attacks. This LLM-based approach allows for more nuanced and context-aware threat detection, enhancing the tool’s overall accuracy and effectiveness. Rebuff also leverages a vector database to store embeddings of previous attacks.

Garak (Best LLM Security Software for Vulnerability Scanning)

Garak is an LLM security testing framework that detects AI vulnerabilities across LLM-powered applications.

Key Features:

- Automated LLM Vulnerability Scanning

- Supports OpenAI, Hugging Face, Cohere, and more

Garak is an exhaustive LLM vulnerability scanner designed to find security holes in technologies, systems, apps, and services that use language models. It’s a versatile tool capable of simulating attacks and probing for vulnerabilities in various potential failure modes, making it an invaluable asset for organizations seeking to bolster their LLM security posture.

Garak can autonomously run a range of probes over a model, managing tasks like finding appropriate detectors and handling rate limiting. This automated approach allows for a full standard scan and report without manual intervention, streamlining the vulnerability assessment process.

Garak supports numerous LLMs, including OpenAI, Hugging Face, Cohere, Replicate, and custom Python integrations. Moreover, Garak possesses a self-adapting capability, allowing it to evolve and improve over time.

LLMFuzzer (Fuzz Testing for LLM Security Evaluation)

LLMFuzzer is an open-source LLM security testing tool specializing in AI application fuzzing.

Key Features:

- Comprehensive Security Testing for LLMs

- Supports API-Based LLM Deployments

LLMFuzzer is an open-source fuzzing framework designed explicitly for large language models, with a primary focus on their integration into applications via LLM APIs. This tool is handy for security enthusiasts, pen-testers, or cybersecurity researchers keen on exploring and exploiting vulnerabilities in AI systems.

LLMFuzzer has robust fuzzing capabilities that are explicitly tailored for LLMs. It is built to test LLMs for vulnerabilities rigorously, ensuring these powerful models are thoroughly evaluated for potential security risks.

LLMFuzzer can test LLM integrations in various applications, providing a comprehensive assessment of the security posture of LLM deployments across different software environments. To identify vulnerabilities effectively, LLMFuzzer employs a wide range of fuzzing strategies. Its modular architecture allows for easy extension and customization according to specific testing needs.

LLM Guard (Data Leakage Prevention for LLMs)

LLM Guard, developed by Laiyer.ai, helps secure LLM solutions by detecting and mitigating harmful outputs, data leaks, and prompt injections.

Key Features:

- Prompt Injection Prevention

- Data Security & LLM Governance

LLM Guard has the ability to identify and manage harmful language in LLM interactions. By sanitizing and detecting harmful content, the tool ensures that the output generated by LLMs remains appropriate and safe, mitigating potential risks associated with inappropriate or offensive language.

Data leakage prevention is another crucial aspect addressed by LLM Guard. The tool is adept at preventing the leakage of sensitive information during LLM interactions, a vital component of maintaining data privacy and security. Furthermore, LLM Guard offers robust protection against prompt injection attacks, a growing concern in the LLM security landscape.

Vigil (Risk Detection in LLM Interactions)

Vigil is a Python-based security framework that evaluates LLM prompts and responses for potential risks.

Key Features:

- LLM Security Monitoring & Testing

- Detects Prompt Injections, Jailbreaks, and Security Threats

Its primary function is to detect prompt injections, jailbreaks, and other potential risks associated with LLM interactions.

A key strength of Vigil is its ability to analyze LLM prompts for prompt injections and risky inputs, a crucial aspect of maintaining the integrity of LLM interactions. By identifying potential threats early on, Vigil helps mitigate the risks associated with prompt injection attacks, ensuring that LLM outputs remain reliable and trustworthy.

Vigil’s modular design makes its scanners easily extensible, allowing for adaptation to evolving security needs and threats. Vigil employs various methods for prompt analysis to detect potential risks, including vector database/text similarity, YARA/heuristics, transformer model analysis, prompt-response similarity, and Canary Tokens.

Final Thoughts

Organizations must follow LLM security best practices and align with industry frameworks like OWASP Top 10 for LLM Applications. The best LLM security tools in 2024 provide comprehensive security testing for LLMs, ensuring that businesses can harness AI’s power without compromising security, privacy, or compliance.

Protecto remains a leader in LLM security solutions, offering robust security testing, AI data governance, and privacy-first AI adoption for enterprises across industries. Choosing the right LLM security evaluation and monitoring tools is key to safeguarding LLM-powered applications from evolving threats.

For more insights, visit Protecto and explore how Protecto’s AI security solutions can future-proof your LLM deployments.