Summary: Copying production data to development, quality assurance, and staging environments can pose significant security and privacy risks due to sensitive information exposure. Traditional approaches such as tokenization or redaction can render data useless, while manually generating test data is often impractical and time-consuming. Protecto offers an intelligent tokenization approach that ensures tokenized data closely matches the production environment while protecting sensitive information. Our solution saves time and effort in creating data for development and testing while maintaining compliance with data protection.

—

High-quality production-like test data is crucial for ensuring applications and systems work as intended, identifying and fixing bugs, complying with data protection regulations, and maintaining a high level of quality, security, and compliance. Without it, businesses risk poor user experiences, lost revenue, and reputational damage.

Generating high-quality production-like test data is difficult because of the complex and large-scale data environments in modern systems. If the data is inaccurate or incomplete, the results may be incorrect, and copying production data to non-production environments could lead to serious security and privacy risks. Moreover, creating test data that accurately mimics the production environment can be time-consuming and require a lot of effort. All these challenges make it hard for companies to get the necessary high-quality test data to ensure their applications and systems are working correctly.

Tokenization: Not Effective for Complex Data

Tokenization is a process that involves replacing sensitive data with randomly generated tokens that retain the original data’s format but have no meaningful value on their own. This technique reduces privacy and security risks by ensuring that sensitive data remains confidential and secure. Traditional redaction approaches are good for generating test data. However, redacting often render the data useless by removing essential information or altering the data’s structure, making it challenging to use for data analytics, AI/ML, and other complex development and testing.

In addition, redaction can introduce data inconsistencies that compromise the data’s usefulness. Inaccurate or incomplete data can hinder joining and merging data from various sources. Therefore, it is essential to use a more sophisticated approach that can preserve data integrity while mitigating security and privacy risks.

Protecto’s Intelligent Tokenization: Creates Production-Like Data

Protecto’s advanced tokenization technology offers a secure and efficient solution for replacing Personally Identifiable Information (PII) and other sensitive data with tokens without compromising privacy or security. Our solution closely matches the structure, length, and format of the production data, ensuring that the tokenized data is highly representative of the original data.

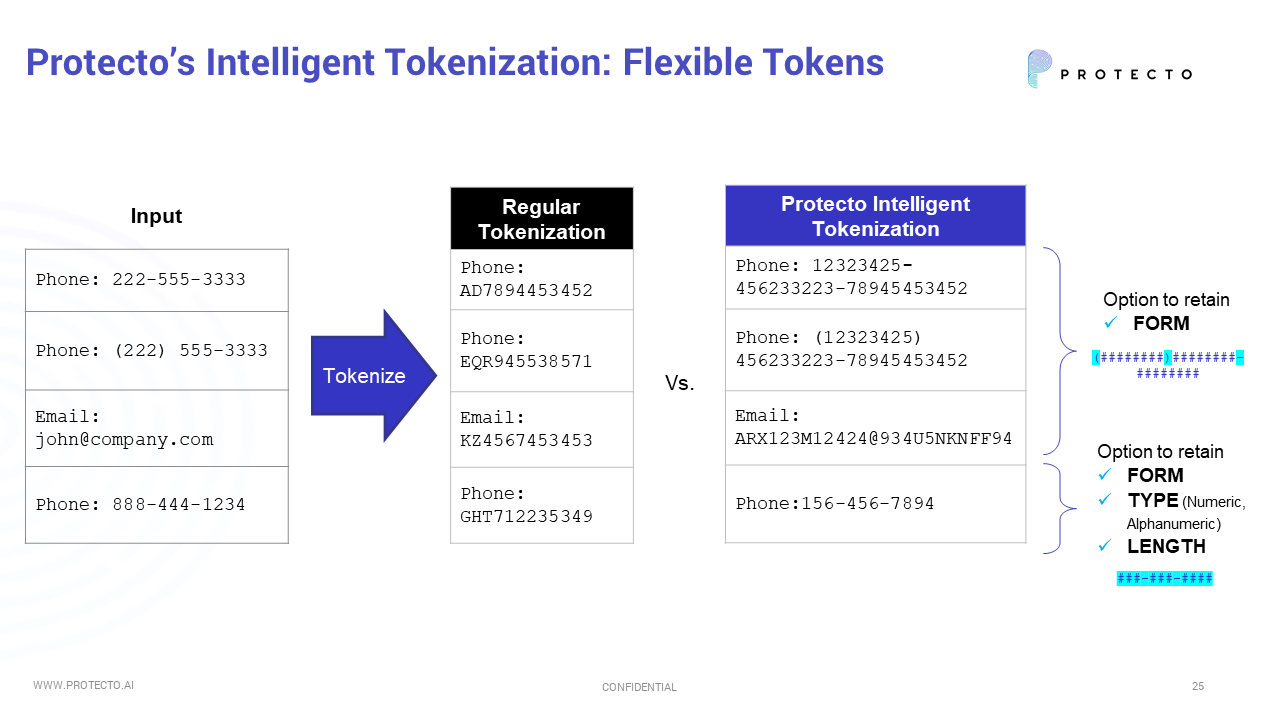

One of the key advantages of Protecto’s intelligent tokenization approach is its flexibility, which allows customers to maintain the form, size, and type of their data. For example, in the case of phone numbers, customers can choose how to tokenize their data, giving them greater control over the process. Customers can even maintain the length and type to mirror the original data.

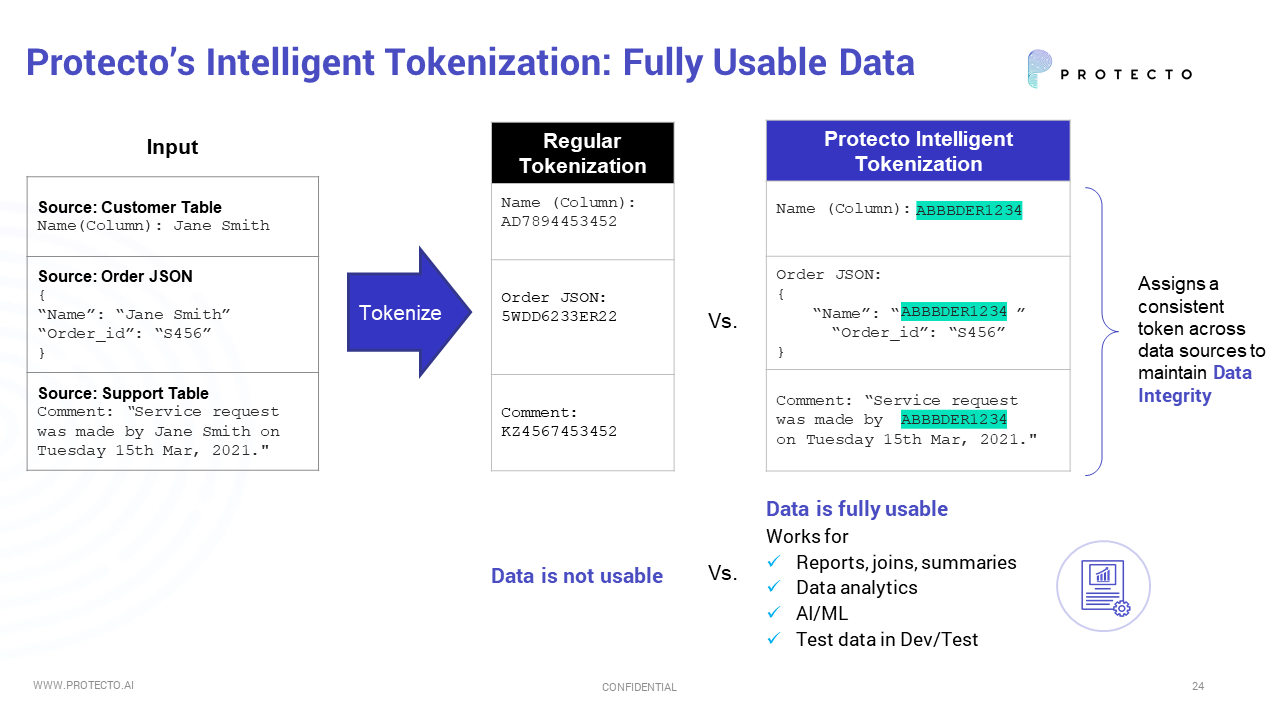

Protecto’s technology offers another significant advantage: providing consistent tokens across multiple data sources. Consistent tokens mean that tokenized data can be easily merged, summarized, analyzed, and even fed into AI/ML models without any issues. With Protecto, organizations can enjoy the benefits of tokenization without compromising on the quality or usability of their data.

Protecto intelligent tokenization makes the data fully usable across reporting, analytics, and AI/ML

Moreover, our product’s tokenization process is highly secure and ensures that sensitive information is never exposed. Our product’s tokenization feature uses advanced algorithms to generate highly secure, random tokens that are impossible to reverse engineer. This makes it virtually impossible for unauthorized individuals to de-identify sensitive data using brute force or uncover original data based on identifiable patterns. Additionally, Protecto uses encryption and other security measures to ensure that the data is always secure.

The tokenized data is compliant with data protection regulations, making it an ideal solution for organizations that need to comply with strict data protection requirements.

Experience the benefits of Protecto for yourself with a free trial! Get started now – no complex installation or implementation is required.