(Also watch this video: Introduction to Modern Data Stack)

Data is truly transformative. However, organizations can uncover value only if they can effectively manage data through its lifecycle. A modern data stack is a collection of pluggable tools that help enterprises collect, store, process, share, distribute, and protect data, ultimately helping companies extract more value from their data.

As businesses evolve, what data is collected and how it is used are changing. Data’s growing role creates many challenges, including delivering scalable infrastructure, ensuring quality, and protecting data. However, by modernizing their data stack, organizations can address these new and unique data challenges in a way that powers innovation and enhances business productivity.

What is a Modern Data Stack?

A modern data stack is a system of tools and technologies that together provide a comprehensive solution for collecting, storing, processing, and analyzing data. It is designed to enable organizations to access and gain insights from their data quickly, no matter the size or complexity. By utilizing the latest advances in data storage and processing techniques, a modern data stack can provide a secure, scalable, and cost-effective way to manage data.

A modern data stack is comprised of four distinct layers—collection, storage, processing, and analysis. The collection layer is responsible for gathering data from a variety of sources, such as websites, mobile apps, and IoT devices. The storage layer stores the data in a secure and reliable format, such as a database or cloud storage. The processing layer then takes the data and prepares it for analysis by transforming and aggregating it into a usable format. Finally, the analysis layer is used to uncover insights from the data and make informed decisions.

Why is the Modern Data Stack Important?

The modern data stack is an essential asset for businesses as it provides a comprehensive, unified perspective of the data. By integrating data from multiple sources, storing it in a central location, and providing an array of tools and services to make the data easier to understand and analyze, the modern data stack facilitates businesses to gain invaluable insights into their customers, optimize their operations, and make informed decisions.

The modern data stack also helps ensure data accuracy and reliability, as it consolidates data from multiple sources for comparison and analysis. With the modern data stack, businesses can make better use of their data, as well as identify opportunities for improvement and growth. Ultimately, the modern data stack helps businesses stay competitive in an ever-evolving market.

Characteristics of a Modern Data Stack

Here are the three characteristics of a modern data stack.

1. Scalable

Perhaps the most fundamental difference between a legacy and a modern data stack is that a modern data stack is hosted in the cloud. So, organizations can quickly address growing data needs without bearing the financial burdens or long lead time typically associated with traditional scaling options.

2. Easy to deploy

In addition, a modern data stack requires very little or basic technical configuration by the user. As a result, teams can scale and immediately get to speed with different stack layers.

3. Plug and play

One of the key characteristics of a modern data stack is that it is a pluggable stack of tools. Companies can pick the best-of-breed services to address their needs.

Layers of a modern data stack

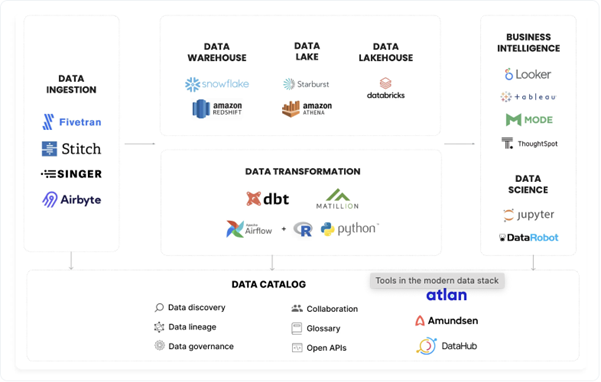

Let’s look at the key components of a modern data stack. Here is a simple rundown of a modern data stack’s core tools and capabilities. (Please note: The stack is evolving, and new layers are added.)

Data Ingestion

This includes pipelines to bring data from various sources, including websites, transaction systems, and various apps, into your central data storage.

> ETL

Raw data must go through a set of processes, including cleaning, normalizing, joining, and summarizing, to make it consumable across the company. This layer offers frameworks and common features to extract, transform, and load data.

> Data storage

Many cloud solutions, such as Snowflake, AWS Redshift, Databricks, etc., offer data warehouses, data lakes, and lakehouses that help businesses to store and process data.

> Semantic or Metric Layer

Instead of each BI tool writing SQL queries to fetch data, which could lead to different diverging definitions, the semantic layer provides defined APIs that provide the data objects. Semantic Layer provides APIs that convert metric computation requests into SQL queries and run them against the data warehouse. The semantic layer achieves consistent reporting by sitting between data models and BI tools.

> Reverse ETL

These tools move transformed data from data storage back to third-party business tools such as sales operations and CRMs. Delivering insights back to operational tools improves the velocity and quality of operational decisions.

> Business Intelligence Tools

The visualization layer helps teams build and deliver insights into various parts of the business. This layer includes tools that generate reports, dashboards, and alerts.

> Data Quality Management

It helps data engineers to observe data in motion and at rest to ensure data quality. Manage data catalog, lineage, and other metadata to manage data lifecycle.

> Privacy and governance

With growing data privacy laws, businesses must invest in tools that help them protect data privacy and security. Tools like Protecto can help you discover sensitive data and identify data privacy and security issues, such as who is accessing personal data, how the data is used, and what data is overexposed, so that they can take remedial steps in addressing privacy risks.

How A Modern Data Stack Impacts your organization

A modern data stack provides organizations with a number of key advantages in terms of accessing and analyzing their data more effectively. By enabling efficient data sharing across various systems, platforms, and applications, businesses can benefit from improved insights and decisions. This can result in better operational performance and strategic planning. Furthermore, with a modern data stack, it’s possible to connect multiple data sources, allowing for a more comprehensive view of customer data and market trends.

It also allows for the easy integration of new data sources, creating a more dynamic data infrastructure. Additionally, a modern data stack facilitates faster data processing, enabling businesses to react quickly to changing market conditions and customer needs. Ultimately, a modern data stack provides an invaluable resource to organizations looking to make the most of their data.

Difference Between Traditional and Modern Data Stacks

A traditional data stack typically consists of a database, a data warehouse, and a range of associated tools used to analyze and interpret the data. This setup has been in use for some time and has been proven to work well in certain scenarios. However, modern data stacks are now becoming increasingly popular due to their cloud-native technologies, such as cloud databases, big data analytics tools, and distributed storage solutions. This allows for greater scalability, flexibility, and faster access to data for analysis and reporting.

Furthermore, modern data stacks offer more sophisticated data processing capabilities, such as machine learning and artificial intelligence, which can provide insights that more traditional approaches may struggle to uncover. Such advanced analytics can help to uncover hidden patterns in the data, which can be used to inform decisions and strategies. Additionally, the data can be accessed from anywhere in the world, making it easier for organizations to collaborate and share data with other parties in a timely manner. All in all, modern data stacks offer organizations the chance to leverage their data in ways that were not previously possible.

Modern Data Stacks: FAQ

Q: What is the role of data visualization in modern data stacks?

A: Data visualization is an important part of modern data stacks as it allows users to quickly and easily identify patterns, trends, and correlations in their data. Visualizations can present complex data sets in a way that is easier to understand, making it easier for users to make informed decisions.

Q: How does Big Data fit into the modern data stack?

A: Big Data can play an important role in a modern data stack. It can provide a variety of useful insights, from identifying customer patterns and trends to uncovering new opportunities for product innovation. Additionally, Big Data can be used to identify areas of improvement or areas where a company may be falling short. Big Data can also be used to understand customer behavior and preferences better, leading to more effective marketing and customer service initiatives.

Interested in knowing more about how to supercharge your data protection and privacy compliance? Get in touch with us to request a demo.