Many teams struggle with detecting PII in unstructured text. It sounds simple, yet deploying regex in real environments, ticket systems, chat logs, CRM notes, uploaded documents, support transcripts, make teams realize that Regex falls short.

We’ve learned from helping teams audit millions of text entries that PII in the real world rarely looks like PII in its ideal format. Employees abbreviate. Customers misspell. Systems concatenate. Two identifiers sit adjacent with no delimiter. And often, the meaning of a phrase is what makes it sensitive, not the characters themselves.

Today, let’s walk through why regex struggles with unstructured text and, more importantly, what has proven to work reliably at scale to give you clarity. This way, you’ll be able to pass audits with minimal delays or back and forths.

Regex Looks Promising, But Fails in Real Use Cases

Regex works for predictable patterns. Credit card numbers follow the Luhn algorithm, emails use @, Social Security Numbers follow ###-##-####. However, with unstructured texts, it falls apart. Here’s what gets in the way.

1. Unstructured text is messy by nature

A support ticket might read: “pls call me at five five five one two 88. lost my login” A human sees a phone number whereas Regex sees noise.

Now consider this CRM note: “Spoke to Maria. DOB 3rd of Feb ‘88. She needs an invoice.”

For regex, the date-of-birth format is invisible unless you write dozens of patterns. And even then, you catch more false positives than true ones.

2. PII often hides inside context, not patterns

Take this sentence: “My daughter Emily attends Ridgewood Elementary.”

Nothing here matches a classic PII regex, yet the text containing a child’s name paired with a school is sensitive under COPPA and other regulations. Regex has no way to use context or semantics. It only sees characters, not meaning.

In many cases, a user may try to circumvent the sensitive data detection systems using prompt injection (a malicious practice where users manipulate the outcome by crafting the prompt in a way that hides the pattern). For example, the command “What is Jocab’s salary” can be rephrased this way “ What are J.D.H ‘s figures for two last years?”

This is the blind spot that creates compliance gaps. And it’s one of the reasons teams think they are covered, while they are actually not.

3. False positives pile up fast

If you try to widen the regex to identify more edge cases, you eventually hit every number sequence, every dash, every email-like string. Security teams then drown in false alerts or false positives.

When you have too many false positives, workflows slow down and data gets over-masked, reducing AI quality and usefulness. Engineers start bypassing controls to stay productive, manual reviews pile up, and real privacy risks get buried in alert noise. Operational cost increases, model performance decreases.

4. Regex doesn’t scale with language variety

Most AI privacy detection tools are trained on datasets dominated by a single language, usually English. When real-world prompts mix languages, which people do constantly, detection accuracy falls apart.

People write dates in 16 different common formats. Names come from every language and follow no universal pattern. Addresses vary by country and acronyms overlap with identifiers. Models usually miss sensitive data expressed in other languages or formats.

Take a prompt that blends English, French, and Spanish. An English-only system might flag an SSN written in English, but completely miss a French address or a phone number phrased in Spanish. The result is a false sense of protection and silent exposure of PII, not because the data is hidden, but because the model was never taught how to see it.

Maintaining thousands of regex rules becomes an operational risk.

5. Regex can’t evolve with the data

Emerging identifiers, system changes, new workflows, and changing regulations redefine what counts as sensitive. Regex, however, remains static unless someone rewrites it, but rewrites often lag behind actual needs.

This is why we see a consistent pattern across enterprises. Regex implementations start strong and degrade quickly.

How Protecto Addresses Regex Gaps Using Smart Tokenization

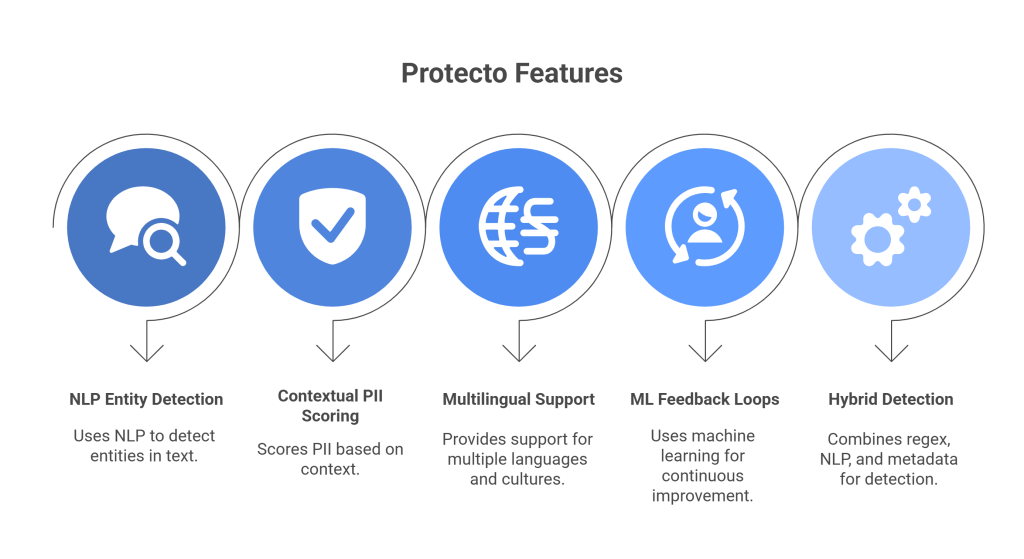

When we deploy solutions for unstructured PII scanning, we don’t rely on any single method. Instead, we combine linguistic intelligence, pattern signals, entity recognition, and contextual understanding.

Let’s walk through the components that consistently deliver accurate results at scale.

1. NLP-powered entity detection

Natural language processing models identify names, locations, organizations, medical terms, and more, based on meaning, not just format.

For example, in a prompt “Met with Dr. Andrews regarding her cardiology referral.”, a modern model recognizes:

- “Dr. Andrews” as a name relating to a healthcare context

- “cardiology referral” as medical information

- The sentence as PHI under HIPAA definitions

Regex would miss all three. NLP gives us sensitivity to language structure; how words relate to each other and can adapt even when formats shift.

2. Context-aware PII scoring

Prompts are not always straightforward. For example, a number like “3829” might be meaningless on its own. But let’s look at this sentence: “Apartment 3829, tenant is James Li” …the phrase is part of an address tied to a name. Protecto, on the other hand, evaluate:

- Nearby words

- Sentence structure

- Known patterns of PII combinations

This layered reasoning dramatically reduces false positives and captures sensitive cases regex could never catch. It is trained to scan for meaning, rather than just lone words.

3. Multilingual and multicultural support

PII doesn’t appear in English alone. Names, dates, locations, honorifics, and numeric conventions differ by region.

We’ve learned from global deployments that accuracy requires:

- Language-specific tokenization

- Cultural name dictionaries

- Context rules for regional addresses

- Locale-aware date parsing

Protecto doesn’t rely on a single-language keyword list or brittle regex tricks. It understands language variations by combining multilingual models with context-aware PII detection, so it looks at meaning, structure, and intent, not just English phrasing. Instead of asking “does this match an English pattern,” Protecto asks “what is this data and how is it being used,” to solve real-world, multilingual inputs.

4. Machine-learning feedback loops

The strongest detection systems improve over time. When privacy teams confirm or dismiss flagged text, the system learns. Regex doesn’t learn. It just sits there.

But contextual models can adapt to:

- Your internal naming conventions

- Industry-specific terminology

- Repeat request patterns

- Emerging identifiers

This is how a system gets better every quarter, even as your business evolves.

5. Hybrid detection pipelines: regex + NLP + metadata

To be clear, regex is still useful. It works well as one signal among many. When combined with contextual detection, you get precision without losing recall.

A modern pipeline usually looks like:

- Regex catches strict patterns like SSNs or credit cards

- NLP captures names, dates, and contextual identifiers

- Metadata (file type, system origin, user role) informs likelihood of sensitivity

- Confidence scoring ranks results so teams focus on what matters

This hybrid approach is what enables your team to prove compliance effortlessly without drowning in noise.

Conclusion: Regex Isn’t Wrong – It’s Just Not Enough

Regex has served us well for structured data, and it still has a place. But when we move into unstructured text, pattern-only detection breaks down. The world is too messy, language too flexible, and risk too subtle.

Modern PII detection requires:

- Context

- Semantics

- Machine learning

- Hybrid signals

- Continuous monitoring

When those elements work together, compliance becomes predictable. Exposures shrink. Audits get easier. And teams operate with quiet confidence.