As the adoption of large language models (LLMs) continues to surge, ensuring their security has become a top priority for organizations leveraging AI-powered applications. The OWASP LLM Top 10 for 2025 serves as a critical guideline for understanding and mitigating vulnerabilities specific to LLMs. This framework, modeled after the OWASP Top 10 for web security, highlights the most pressing threats associated with LLM-based applications and provides best practices for securing AI-driven systems.

In this blog, we will explore the OWASP LLM Top 10 for 2025, discuss the LLM vulnerabilities that pose risks to businesses, and outline mitigation strategies to enhance AI security.

What is OWASP and Why Does It Matter for LLMs?

The Open Web Application Security Project (OWASP) is a globally recognized nonprofit organization focused on improving software security. While OWASP originally targeted web applications, the rise of generative AI and LLM-powered applications has prompted a need for security best practices tailored to these advanced models.

The OWASP LLM Top 10 for 2025 identifies the primary threats that organizations face when deploying LLMs, helping businesses secure AI applications against data breaches, adversarial attacks, and model manipulation.

Interested Read: The Evolving Landscape of LLM Security Threats: Staying Ahead of the Curve

OWASP LLM Top 10 for 2025: Key Vulnerabilities & Mitigation Strategies

The OWASP LLM Top 10 for 2025 highlights the most critical security risks in large language model (LLM) applications, including prompt injections, data leakage, and model manipulation. This guide outlines key vulnerabilities and provides effective mitigation strategies to enhance the security and reliability of LLM-based systems.

1. Prompt Injection (LLM-01)

Threat: Attackers craft malicious prompts that manipulate the model’s responses, potentially leading to data leaks, misinformation, or unauthorized access.

Mitigation:

- Implement input validation and filtering to block harmful prompts.

- Use structured prompting techniques to prevent arbitrary instruction execution.

- Enforce role-based access control (RBAC) to limit user permissions.

2. Insecure Plugin & API Integrations (LLM-02)

Threat: LLMs often integrate with third-party plugins and APIs, which can introduce vulnerabilities if not properly secured.

Mitigation:

- Vet third-party integrations for security compliance.

- Implement API authentication mechanisms such as OAuth 2.0.

- Monitor API calls for anomalous behavior.

3. Training Data Poisoning (LLM-03)

Threat: Adversaries can manipulate training data to inject biases, alter model behavior, or introduce backdoors.

Mitigation:

- Establish strict data validation pipelines.

- Regularly audit training data for anomalies.

- Use differential privacy techniques to protect sensitive information.

4. Model Inversion Attacks (LLM-04)

Threat: Attackers attempt to extract training data from LLMs, which can lead to privacy violations, especially when sensitive or proprietary information is involved.

Mitigation:

- Implement rate limiting on queries.

- Use synthetic data augmentation to reduce memorization of sensitive data.

- Apply homomorphic encryption to safeguard model outputs.

5. LLM Supply Chain Risks (LLM-05)

Threat: AI models often rely on open-source repositories, making them susceptible to malicious code injections or compromised dependencies.

Mitigation:

- Maintain strict access controls over model development.

- Regularly audit dependencies for security vulnerabilities.

- Use trusted repositories for model development and deployment.

6. Excessive Output Disclosure (LLM-06)

Threat: LLMs can unintentionally expose sensitive data in their responses, leading to data leaks and regulatory violations.

Mitigation:

- Implement output filtering to block sensitive data exposure.

- Enforce data masking techniques to redact confidential information.

- Utilize context-aware monitoring to detect potential leaks.

7. Hallucinated Content & Misinformation (LLM-07)

Threat: LLMs may generate inaccurate or misleading responses, which can harm reputations or mislead users.

Mitigation:

- Use fact-checking models to verify outputs.

- Train LLMs with validated, high-quality datasets.

- Deploy human-in-the-loop review processes for critical responses.

8. Insecure Model Hosting & Deployment (LLM-08)

Threat: Improperly secured cloud-hosted or self-hosted LLMs can be vulnerable to unauthorized access and exploits.

Mitigation:

- Enforce multi-factor authentication (MFA) for admin access.

- Deploy LLMs in isolated, containerized environments.

- Conduct regular security audits on deployed models.

9. Bias & Fairness Concerns (LLM-09)

Threat: AI models trained on biased data may produce discriminatory outputs, leading to ethical and legal challenges.

Mitigation:

- Continuously evaluate models for bias using fairness metrics.

- Implement bias mitigation techniques during model training.

- Use diverse datasets to improve model generalization.

10. LLM Abuse & Social Engineering Attacks (LLM-10)

Threat: LLMs can be exploited for phishing, impersonation, or generating deceptive content.

Mitigation:

- Restrict LLM-generated automated content dissemination.

- Implement usage monitoring to detect abuse patterns.

- Train security teams to identify AI-driven social engineering threats.

Interested Read: LLM Security: Top Risks and Best Practices

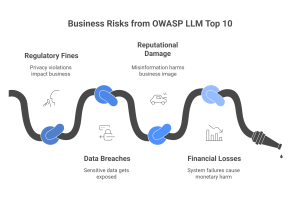

The Impact of OWASP LLM Top 10 on Businesses

As LLMs become integral to business operations, addressing these vulnerabilities is essential for maintaining security, compliance, and user trust. Organizations that fail to mitigate LLM risks face potential consequences such as:

- Regulatory fines due to privacy violations.

- Data breaches expose sensitive customer information.

- Reputational damage from AI-generated misinformation.

- Financial losses due to AI system failures or adversarial attacks.

By proactively implementing OWASP LLM Top 10 recommendations, businesses can enhance AI security and stay ahead of emerging threats in the competitive AI landscape.

Conclusion

The OWASP LLM Top 10 for 2025 serves as an essential security framework for businesses leveraging large language models. From prompt injection attacks to model inversion threats, these vulnerabilities highlight the growing risks associated with AI-powered applications. Organizations must adopt robust security measures to safeguard their AI models, ensure compliance with data privacy regulations, and maintain trust with users.

By following OWASP guidelines, businesses can mitigate risks, improve AI safety, and future-proof their LLM applications in an era of rapid AI advancements.

For more insights into securing LLMs, check out the official OWASP LLM Top 10 resources and stay informed on the latest AI security best practices.