Organizations worldwide are racing to deploy large language models for competitive advantage. Yet most executives remain unaware of the hidden security risks lurking within their AI systems. A single misconfigured LLM can expose customer data, violate regulations, and destroy years of trust-building efforts.

Securing sensitive data in LLM applications requires more than traditional cybersecurity approaches. These AI systems present unique vulnerabilities that demand specialized protection strategies. Data breaches through LLMs can occur through subtle prompt manipulations, training data memorization, or inference attacks that bypass conventional security controls.

Security teams need practical guidance for protecting confidential information across the entire LLM lifecycle. From initial data collection through model deployment and ongoing operations, every phase introduces specific risks that organizations must address systematically.

Understanding The Threats to Sensitive Data in LLMs

Large language models present unique security challenges when processing confidential information. Unlike traditional applications, LLMs can memorize and potentially reproduce sensitive data from their training sets. This creates unprecedented risks for organizations handling personally identifiable information, protected health information, financial records, and trade secrets.

Traditional security measures often fall short with AI systems. Standard perimeter defenses cannot prevent LLMs from leaking information through seemingly innocent responses. The models themselves become potential attack vectors, requiring new approaches to securing sensitive data in LLM applications.

Prompt Injection

Prompt injection attacks manipulate LLM inputs to extract confidential information or bypass security controls. Attackers craft prompts that trick models into revealing training data or ignoring safety instructions.

Direct prompt injection involves malicious instructions embedded in user inputs. Indirect prompt injection occurs when attackers manipulate external data sources that the LLM processes.

Detection methods include:

- Input validation and sanitization

- Pattern recognition for suspicious prompts

- Rate limiting and behavioral analysis

- Response monitoring for unusual outputs

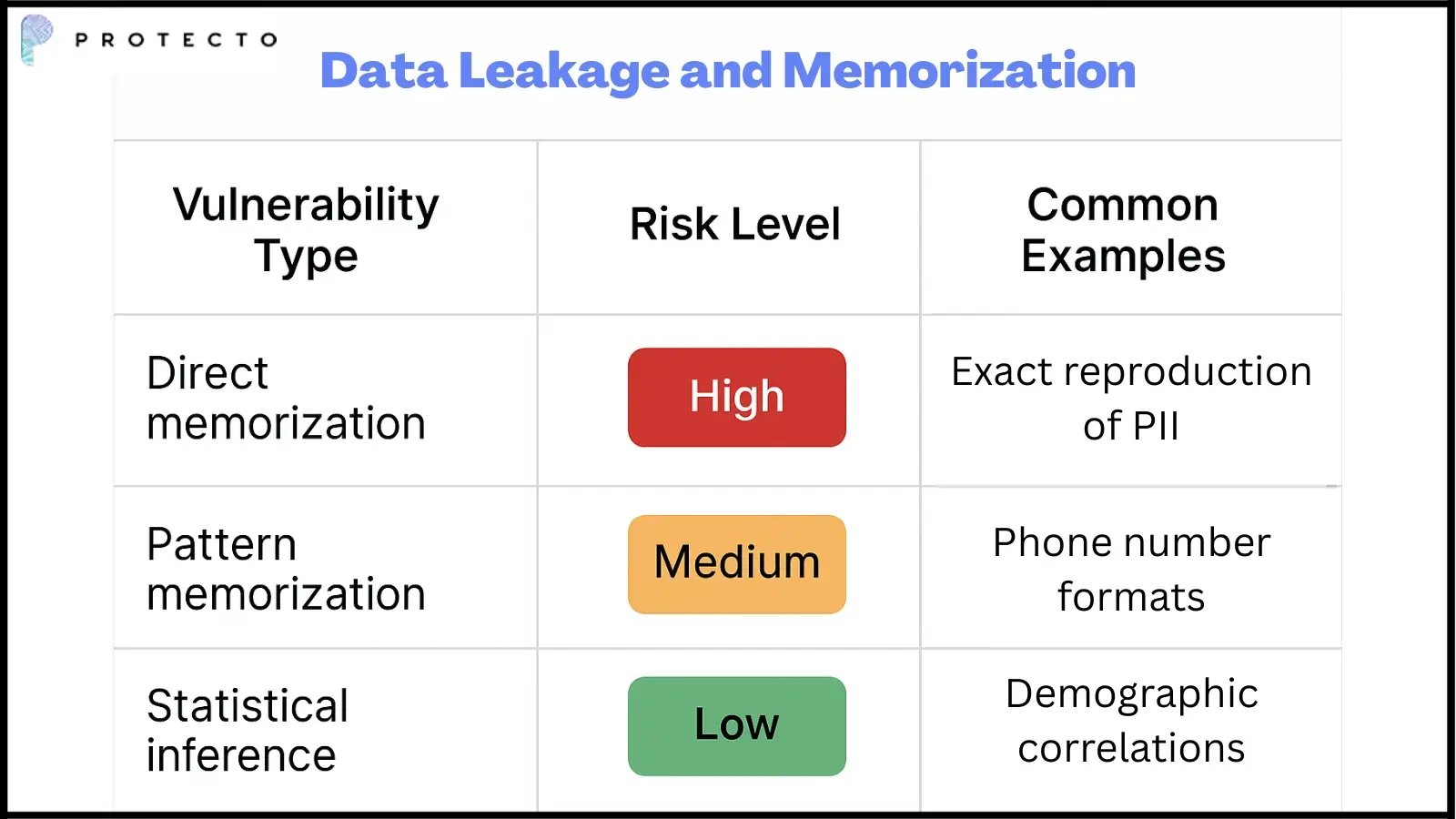

Data Leakage and Memorization

LLMs can memorize specific information from training data and reproduce it during inference. Training data extraction attacks use carefully designed queries to recover sensitive information the model learned during training.

Recent research shows models can memorize email addresses, phone numbers, and even entire documents. This poses significant risks for organizations using LLMs trained on proprietary data.

Model Theft and Inference Attacks

Model theft attacks attempt to replicate proprietary LLMs through systematic querying. Inference attacks extract sensitive information about training data without directly accessing the model.

These attacks can reveal whether specific individuals were included in training datasets or extract statistical properties of the training data.

Warning signs of potential inference attacks:

- Unusual query patterns targeting specific demographics

- Repeated requests for edge cases or rare scenarios

- Systematic probing of model boundaries

- High-frequency API calls from single sources

Key Compliance Frameworks and Regulations for LLM Security

Organizations deploying LLMs must navigate complex regulatory requirements. Privacy compliance laws directly impact how AI systems collect, process, and store sensitive information.

Modern regulations were not designed with AI in mind. This creates interpretation challenges and potential compliance gaps that organizations must address proactively.

GDPR Considerations

The General Data Protection Regulation establishes strict requirements for processing personal data in AI systems. Data minimization principles require organizations to limit data collection to what is necessary for specific purposes.

The right to be forgotten poses particular challenges for LLMs. Once trained on personal data, models cannot easily “forget” specific information without complete retraining.

Key GDPR requirements for LLM deployments:

- Explicit consent for data processing

- Clear purpose limitation statements

- Technical measures for data protection

- Regular impact assessments

- Breach notification procedures

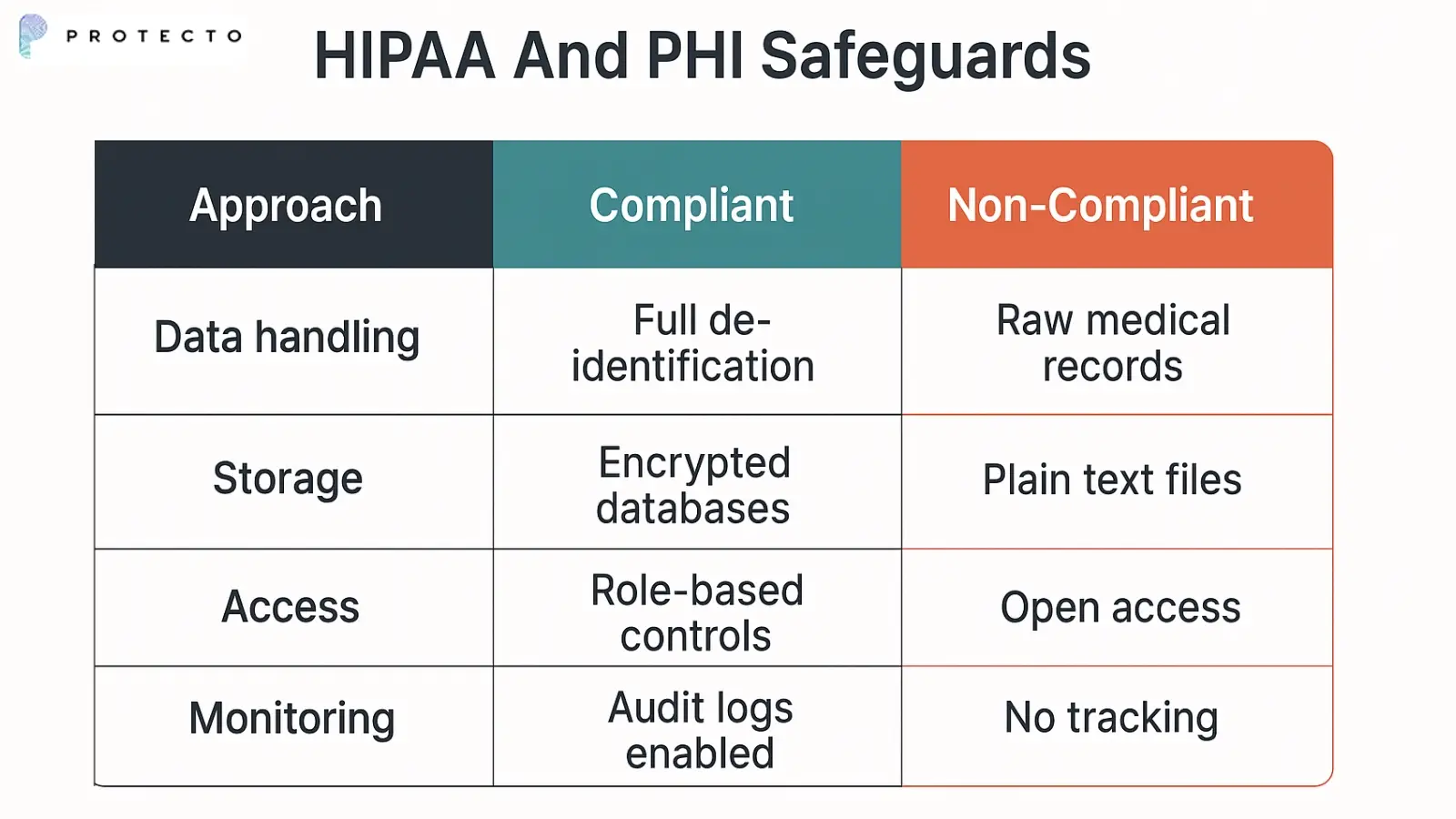

HIPAA And PHI Safeguards

Healthcare organizations using LLMs must comply with strict HIPAA requirements for protected health information. De-identification techniques become crucial when training models on medical data.

LLMs trained on healthcare data can inadvertently memorize patient information. This creates significant liability risks for covered entities.

CCPA And Global Data Privacy Laws

The California Consumer Privacy Act and similar laws grant consumers specific rights regarding their data in AI systems. Organizations must implement mechanisms for data subject requests and deletion.

Global privacy laws create complex compliance matrices for multinational deployments. Different jurisdictions have varying requirements for AI governance.

Key compliance requirements across jurisdictions:

- Consumer rights to data access and deletion

- Transparency in automated decision-making

- Data localization requirements

- Cross-border transfer restrictions

- Algorithmic accountability measures

Protecting Confidential Data Across the LLM Lifecycle

Security must be embedded throughout the entire LLM development and deployment process. Each phase presents unique risks that require specific controls and safeguards.

Organizations need systematic approaches that address security from initial data collection through model retirement.

1. Securing Data Collection

Data classification forms the foundation of effective LLM security. Organizations must identify and label sensitive information before it enters training pipelines.

Proper governance processes prevent sensitive data from reaching training environments. This requires automated scanning and human review processes.

Key security controls for data collection:

- Automated PII detection and flagging

- Data source authentication and validation

- Chain of custody documentation

- Regular data quality assessments

- Secure transfer protocols

2. Hardening Training Environments

Training environments require enhanced security controls to protect sensitive data during model development. Isolated computing infrastructure prevents unauthorized access to training data.

Access controls should follow the principle of least privilege. Only authorized personnel should access training environments and associated data.

Essential security controls for training environments:

- Network segmentation and isolation

- Multi-factor authentication for access

- Encrypted storage for all training data

- Regular security assessments and penetration testing

- Comprehensive logging and monitoring

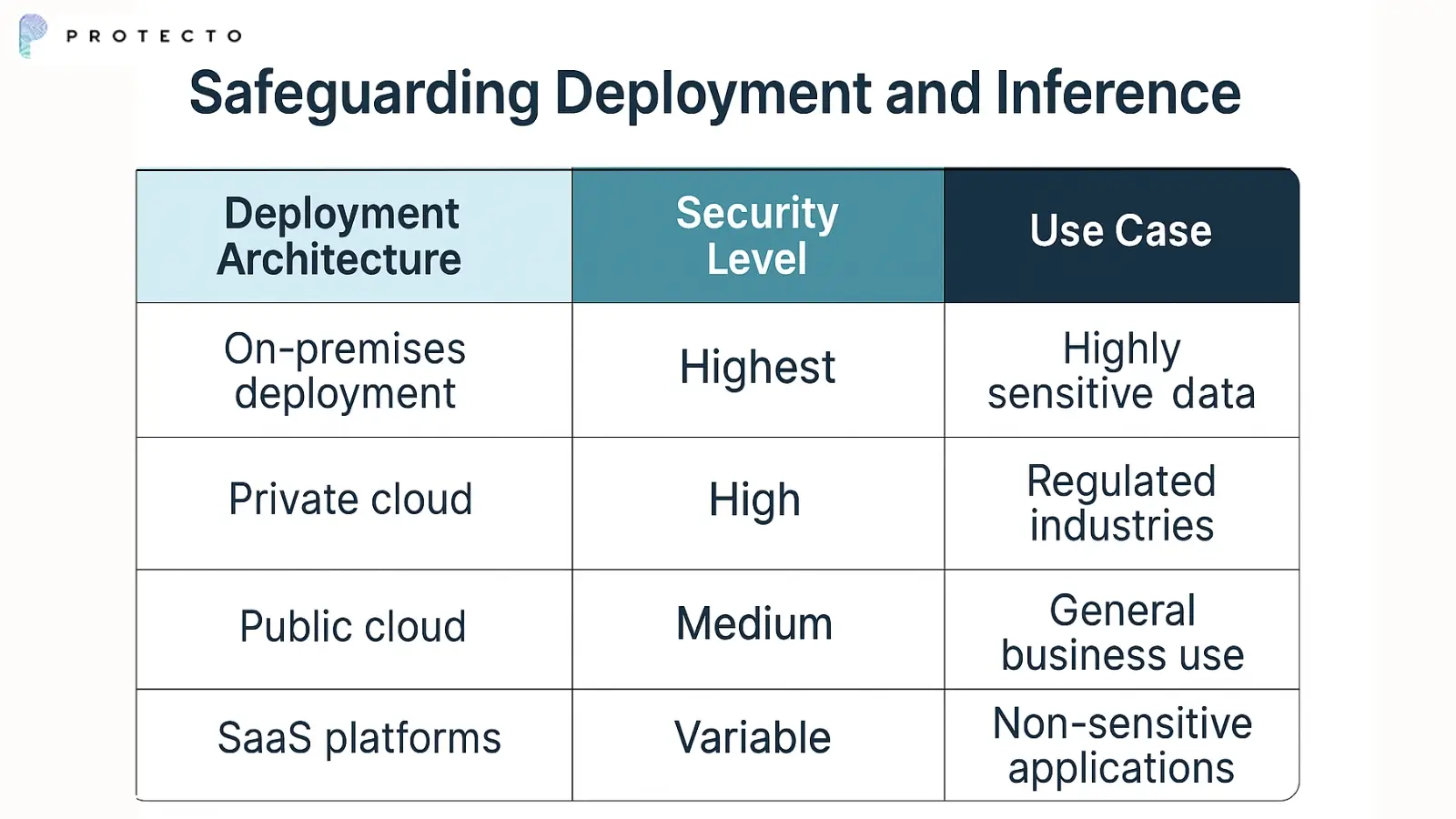

3. Safeguarding Deployment and Inference

Production LLM deployments face security challenges that differ from those in training environments. API integration points become potential attack vectors that require careful protection.

Container security and runtime protection help prevent unauthorized access to deployed models. Organizations must balance security with performance requirements.

4. Continuous Monitoring and Auditing

Ongoing monitoring detects anomalous behavior and potential security incidents. Real-time alerting systems can identify attacks as they occur.

Log analysis reveals patterns that might indicate security breaches or compliance violations. Organizations need comprehensive logging strategies that capture relevant security events.

Key metrics to monitor:

- Unusual query patterns or volumes

- Authentication failures and access attempts

- Sensitive data disclosure incidents

- Model performance degradation

- System resource utilization anomalies

Proven Strategies to Minimize Data Leakage

Practical techniques can significantly reduce the risk of sensitive data exposure through LLM applications. These strategies focus on preventing data leakage rather than detecting it after the fact.

LLM data security requires layered approaches that address multiple potential failure points.

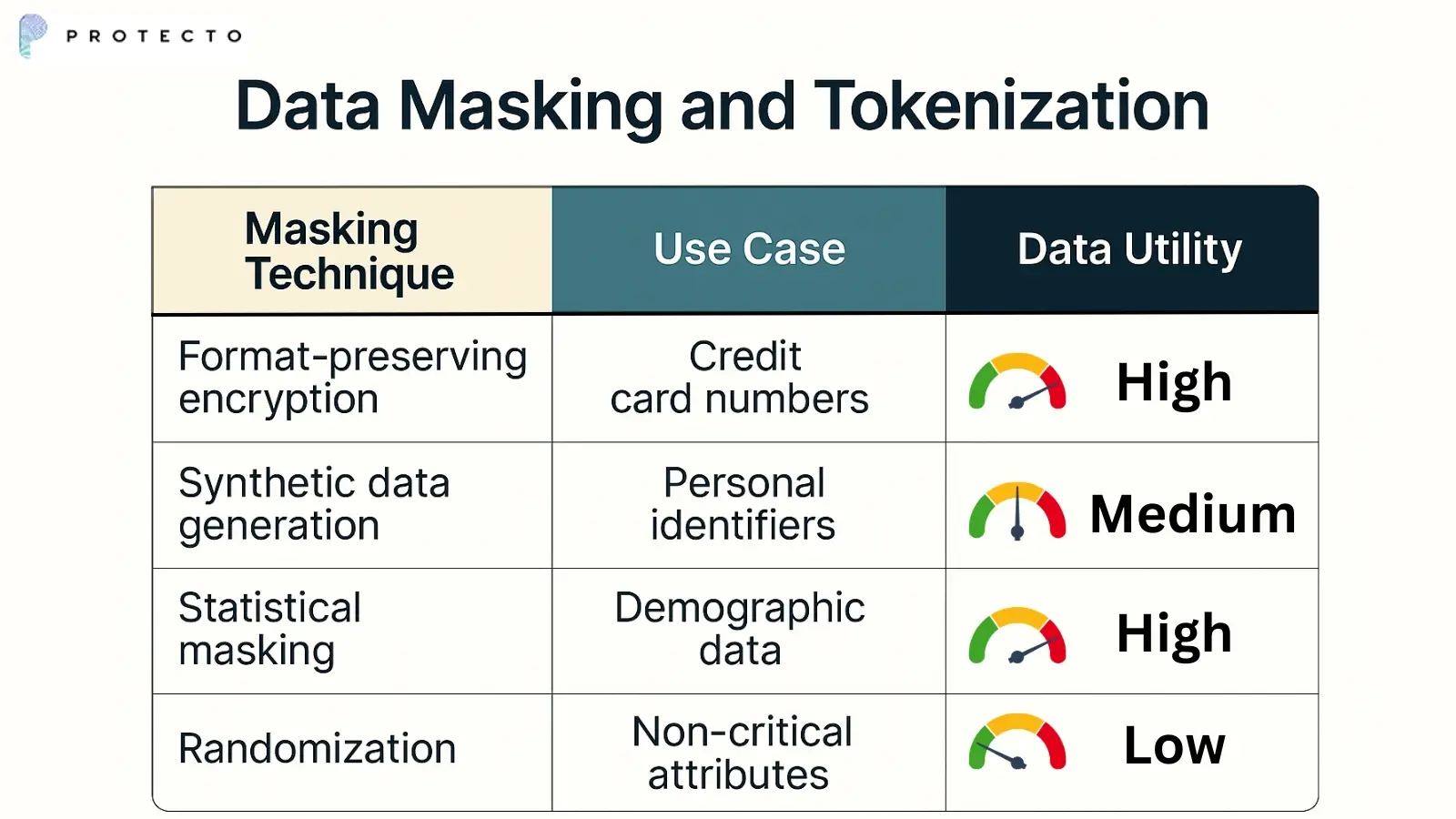

1. Data Masking and Tokenization

Data masking replaces sensitive information with realistic but fictional data during training. Tokenization substitutes sensitive values with non-sensitive tokens that maintain statistical properties.

These techniques allow organizations to train effective models without exposing actual sensitive data. Implementation requires careful attention to maintaining data utility while providing protection.

2. Encryption And Secure Storage

Encryption protects sensitive data at rest and in transit throughout the LLM lifecycle. Key management practices determine the effectiveness of encryption implementations.

Organizations must encrypt training data, model parameters, and inference results. This requires comprehensive key management and secure key storage.

Encryption standards and protocols:

- AES-256 for data at rest

- TLS 1.3 for data in transit

- Hardware security modules for key storage

- Regular key rotation schedules

- Multi-party key management for critical systems

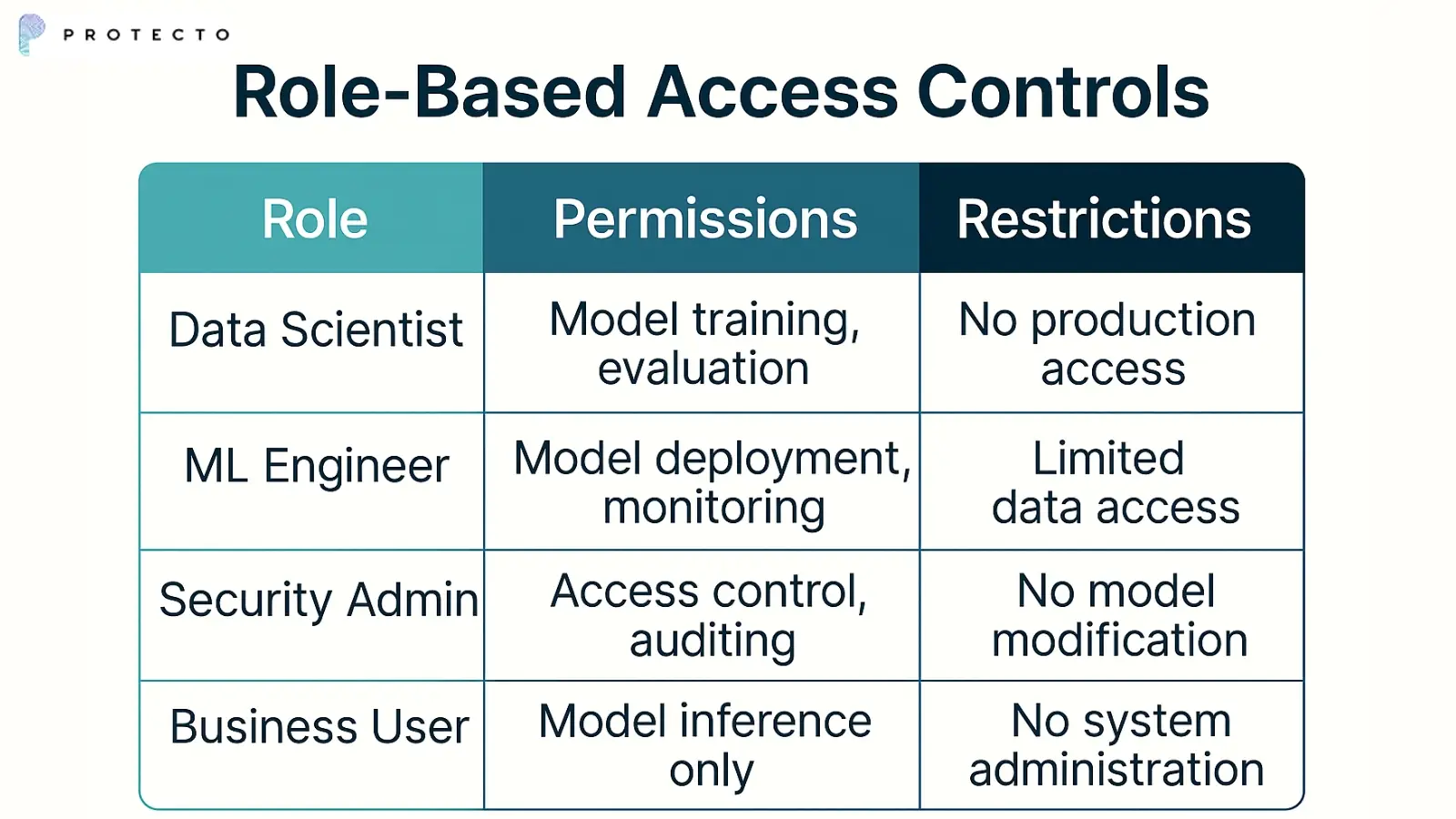

3. Role-Based Access Controls

Role-based access controls limit system access based on job responsibilities and business needs. The principle of least privilege ensures users receive only necessary permissions.

AI systems require specialized roles that traditional IT systems may not address. Organizations need new role definitions for AI developers, data scientists, and model operators.

4. Logging And Real-Time Alerting

Comprehensive logging strategies capture security-relevant events across LLM systems. Real-time alerting enables rapid response to potential security incidents.

Organizations must balance comprehensive logging with storage costs and privacy requirements. Log retention policies should align with regulatory requirements.

Critical events that should always be logged:

- All authentication attempts and outcomes

- Data access and modification events

- Model training and deployment activities

- Inference requests and responses

- System configuration changes

Handling Third-Party Integrations and Vendor Risks

Third-party LLM services introduce additional security considerations. Vendor risk management for LLMs requires specialized assessment criteria and ongoing monitoring.

Organizations must evaluate vendor security practices and contractual protections. Due diligence processes should address AI-specific risks.

1. Vendor Contracts and Privacy Compliance

Vendor contracts must address data processing responsibilities and security requirements. Data processing agreements should specify how vendors handle sensitive information.

Security certifications provide some assurance but require ongoing validation. Organizations should verify vendor claims through independent assessments.

Critical contract clauses:

- Data residency and cross-border transfer limitations

- Incident notification and response requirements

- Security audit rights and frequencies

- Data deletion and return procedures

- Liability allocation for security breaches

2. Secure API Integration

API integration with third-party LLM services requires robust authentication and authorization mechanisms. Rate limiting prevents abuse and potential data extraction attacks.

Input validation becomes crucial when sending data to external services. Organizations must sanitize inputs and validate responses.

API security best practices:

- Strong authentication mechanisms (OAuth 2.0, API keys)

- Request signing and integrity verification

- Input sanitization and output validation

- Traffic encryption and certificate pinning

- Comprehensive request and response logging

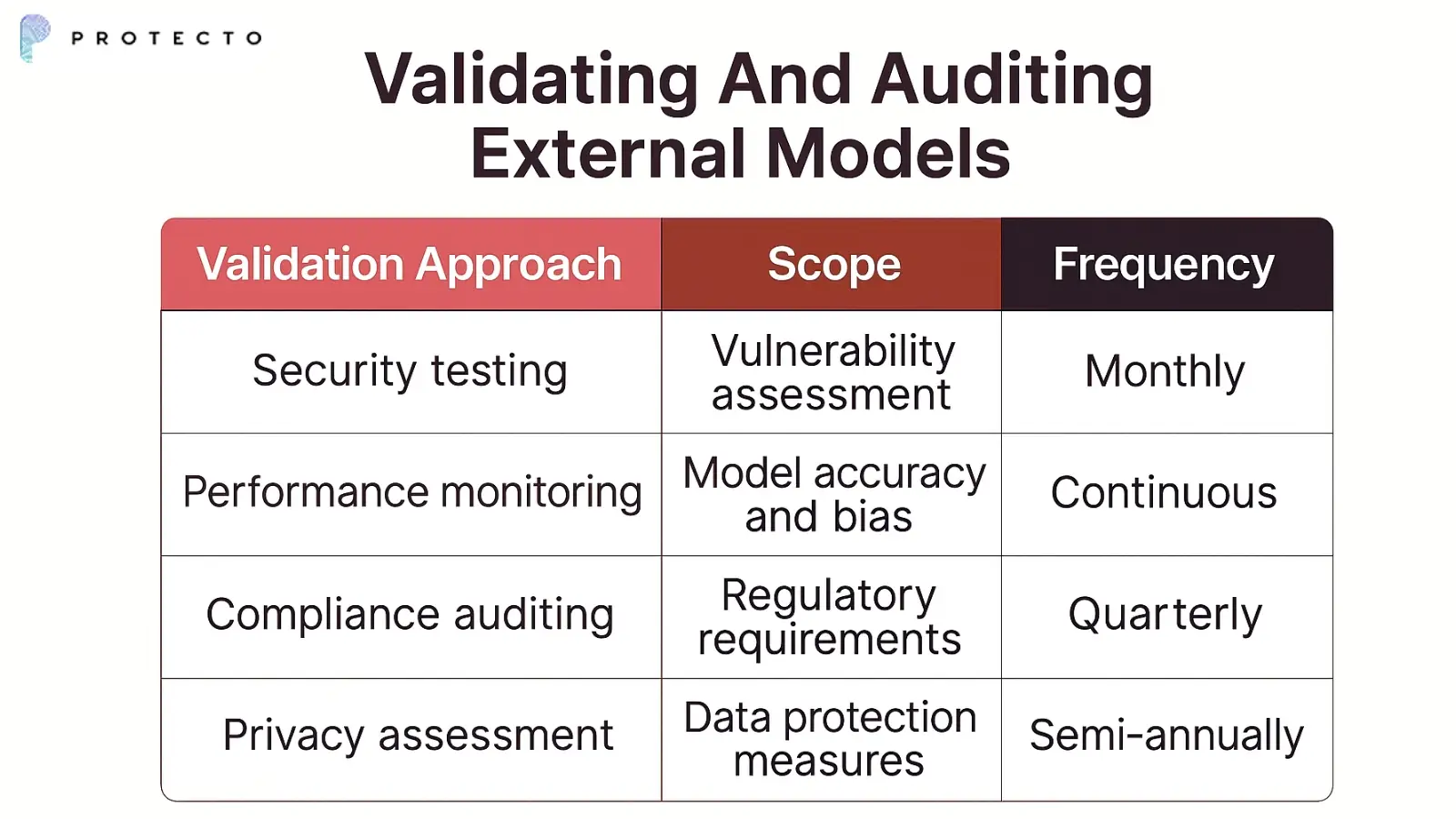

3. Validating And Auditing External Models

Third-party model validation requires specialized testing methodologies. Organizations should assess models for potential data leakage and security vulnerabilities.

Ongoing monitoring of external models helps detect changes in behavior that might indicate security issues.

Balancing Privacy with AI Innovation

Organizations face pressure to innovate with AI while maintaining strong privacy protections. AI privacy protection techniques enable both goals simultaneously.

Differential privacy adds mathematical guarantees to privacy protection. Federated learning allows model training without centralizing sensitive data. Synthetic data generation creates realistic datasets without exposing real information.

Privacy-enhancing technologies reduce the tension between data protection and model performance. These approaches enable innovation while maintaining compliance with regulatory requirements.

Modern data minimization strategies focus on extracting maximum value from minimal data exposure. This aligns business objectives with privacy requirements.

Strengthening Trust and Compliance in LLM Deployments

Trust in LLM applications requires transparency, accountability, and demonstrated security practices. Organizations must build comprehensive governance programs that address stakeholder concerns.

LLM security best practices evolve rapidly as new threats emerge. Staying current requires ongoing investment in security research and professional development.

Transparency initiatives help stakeholders understand how organizations protect their data. Regular security assessments and public reporting build confidence in AI deployments.

Organizations should implement comprehensive monitoring and auditing capabilities. These systems provide evidence of security effectiveness and regulatory compliance.

Building trust requires consistent demonstration of security practices over time. Organizations must invest in long-term security programs rather than one-time implementations.

Ready to strengthen your LLM security posture? Schedule a demo to see how Protecto’s data protection platform can help secure your AI applications.

FAQs About LLM Security

How do I assess if my existing AI pipeline is secure?

Conduct a comprehensive security assessment examining data flows, access controls, and monitoring capabilities across your entire AI pipeline, from data collection to model deployment.

How do I retroactively remove memorized PII from a deployed LLM?

Use techniques like model editing, knowledge unlearning, or, in severe cases, retrain with properly sanitized datasets while implementing ongoing monitoring to detect residual data leakage.